Workstation

Windows 11 Workstation VM asking for encryption password that you did not explicitly set

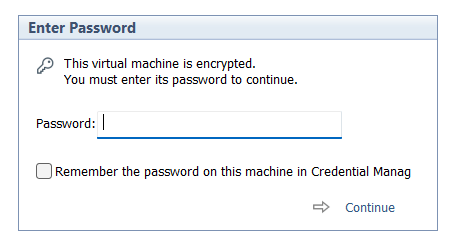

I had created a Windows 11 VM on Workstation 25H2 and then moved it to a new deployment of Workstation. Upon powerup it the VM stated I must supply a password (fig-1) as the VM was encrypted. In this post I’ll cover why this happened and how I got around it.

Note: Disabling TPM/Secure Boot is not recommended for any system. Additionally, bypassing security leaves systems open for attack. If you are curious around VMware system Hardening check out this great video by Bob Plankers.

(Fig-1)

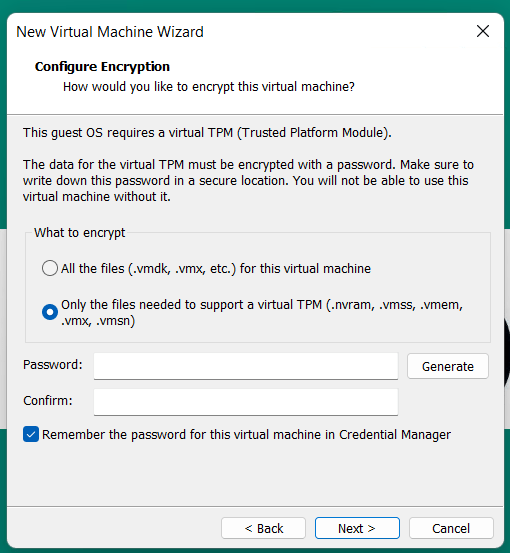

Why did this happen? As of VMware Workstation 17 encryption is required with a TPM 2.0 device, which is a requirement for Windows 11. When you create a new Windows 11×64 VM, the New VM Wizard (fig-2) asks you to set an encryption password or auto-generated one. This enables the VM to support Windows 11 requirements for TPM/Secure boot.

(Fig-2)

I didn’t set a password, where is the auto-generated password kept? If you allowed VMware to “auto-generate” the password, it is likely stored in your host machine’s credential manager. For Windows, open the Windows Credential Manager (search for “Credential Manager” in the Start Menu). Look for an entry related to VMware, specifically something like “VMware Workstation”.

I don’t have access to the PC where the auto-generated password was kept, how did I get around this? All I did was edit the VMs VMX configuration file commenting out the following. Then added the VM back into Workstation. Note: this will remove the vTPM device from the virtual hardware, not recommended.

# vmx.encryptionType

# encryptedVM.guid

# vtpm.ekCSR

# vtpm.ekCRT

# vtpm.present

# encryption.keySafe

# encryption.data

How could I avoid this going forward? 2 Options

Option 1 – When creating the VM, set and record the password.

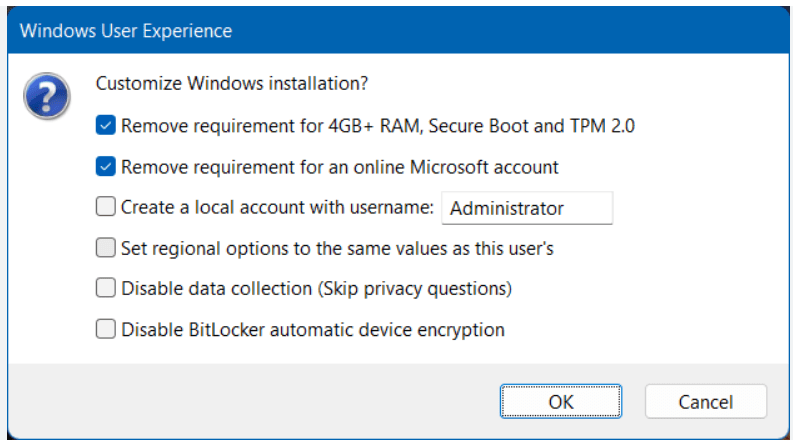

Option 2 – To avoid this all together, use Rufus to create a new VM without TPM/Secure boot enabled.

- Use Rufus to create a bootable USB drive with Windows 11. When prompted choose the options to disable Secure Boot and TPM 2.0.

- Once the USB is created create a new Windows 11×64 VM in Workstation.

- For creation options choose Typical > choose I will install the OS later > choose Win11x64 for the OS > chose a name/location > note the encryption password > Finish

- When the VM is completed, edit its settings > remove the Trusted Platform Module > then go to Options > Access Control > Remove Encryption > put in the password to remove it > OK

- Now attach the Rufus USB to the VM and boot to it.

- From there install Windows 11.

Wrapping this up — Bypassing security allowed me to access my VM again. However, it leaves the VM more vulnerable to attack. In the end, I enabled security on this vm and properly recorded its password.

VMware Workstation Gen 9: BOM2 P2 Windows 11 Install and setup

**Urgent Note ** The Gigabyte mobo in BOM2 initially was working well in my deployment. However, shortly after I completed this post the mobo failed. I was able to return it but to replace it the cost doubled. I’m currently looking for a different mobo and will post about it soon.

For the Gen 9 BOM2 project, I have opted for a clean installation of Windows 11 to ensure a baseline of stability and performance. This transition necessitates a full reconfiguration of both the operating system and my primary Workstation environment. In this post, I will outline the specific workflow and configuration steps I followed during the setup. Please note that this is not intended to be an exhaustive guide, but rather a technical log of my personal implementation process.

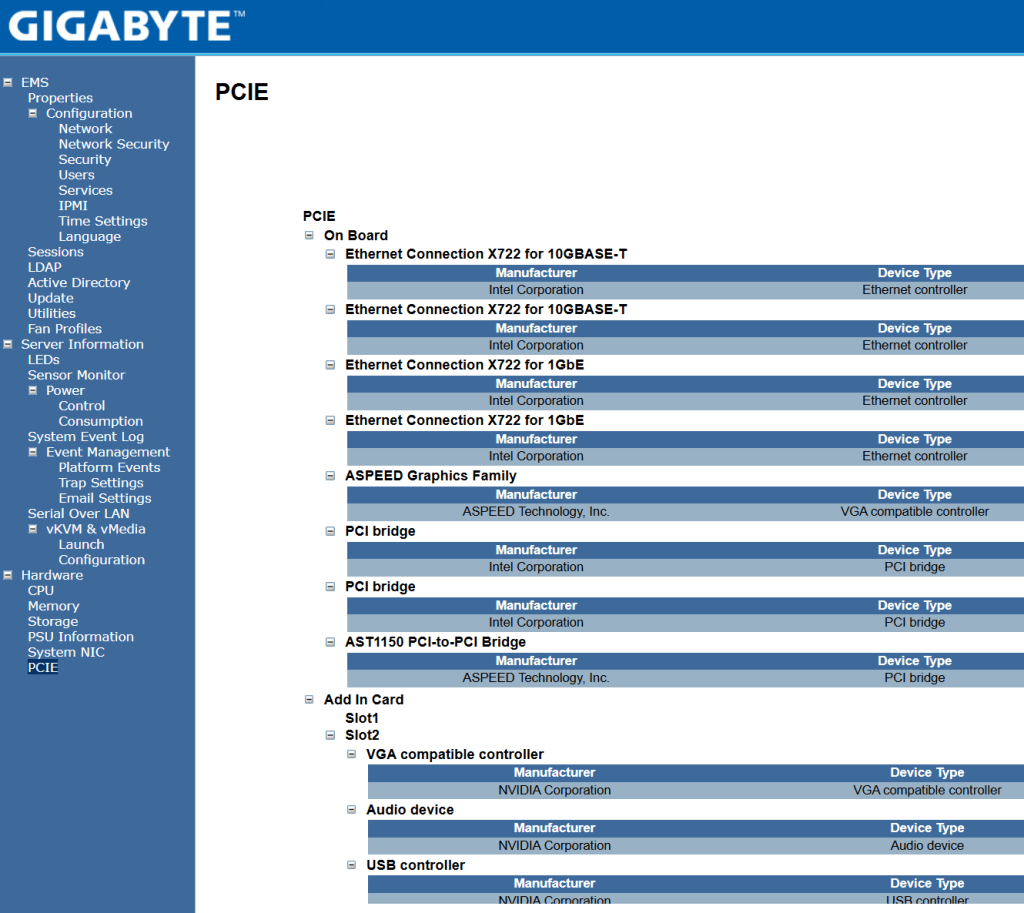

After backing up and ensuring my VMs are not encrypted, the first thing I do is install the new hardware and ensure all of the hardware is recognized by the motherboard. There is quite a bit items being carried over from BOM1 plus several new items, so its import these items are recognized before the installation of Windows 11.

The Gigabyte mobo has a web based Embedded Management Software tool that allows me to ensure all hardware is recognized. After logging in I find the information under the Hardware section to be of value. The PCIe section seems to be the most detailed and it allows me to confirm my devices.

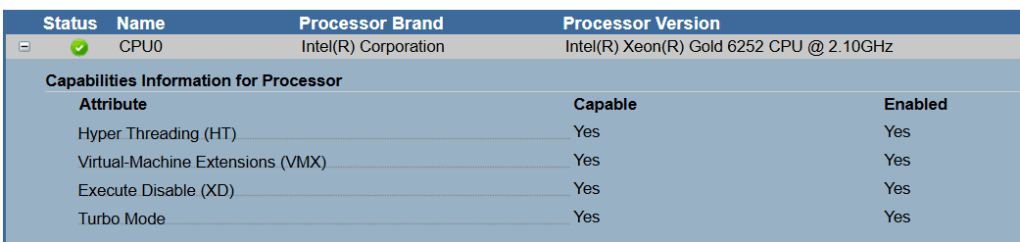

Next I validate that the CPU settings have the Virtual-Machines Extensions (VMX) enabled. This is a requirement for Workstation.

Once all the hardware is confirmed I create my Windows 11 boot USB using Rufus and boot to it. For more information on this process see my past video around creating it.

Next I install Windows 11 and after it’s complete I update the following drivers.

- Install Intel Chipset drivers

- Install Intel NIC Drivers

- Run Windows updates

- Optionally, I update the Nvidia Video Drivers

At this point all the correct drivers should be installed, I validate this by going into Device Manager and ensuring all devices have been recognized.

I then go into Disk Manager and ensure all the drives have the same drive letter as they did in BOM1.

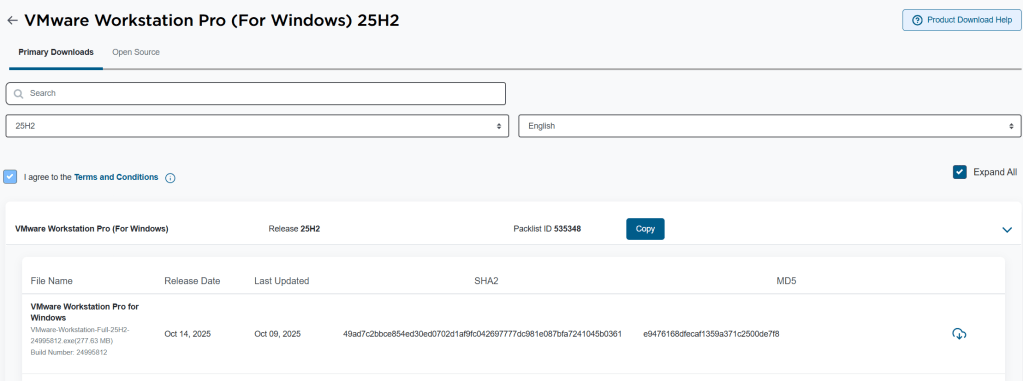

Workstation Pro is now free and users can download it at the Broadcom support portal. You can find it there under FREE Downloads.

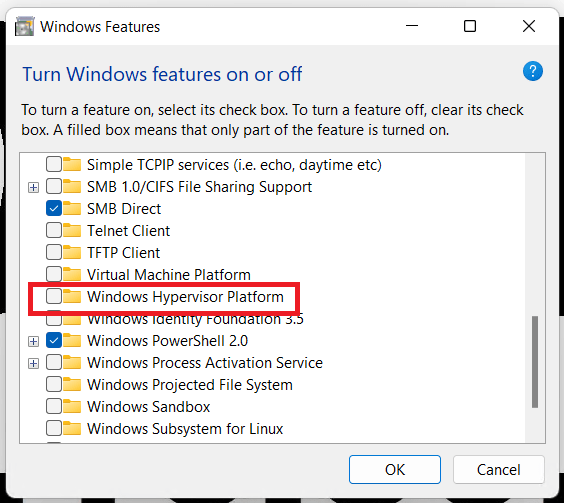

Before I install Workstation I validate that Windows Hyer-V is not enabled. I go into Windows Features, I ensure that Hyper-V and Windows Hypervisor Platform are NOT checked.

Once confirmed I install Workstation 25. For more information on how to install Workstation 25H2 see my blog.

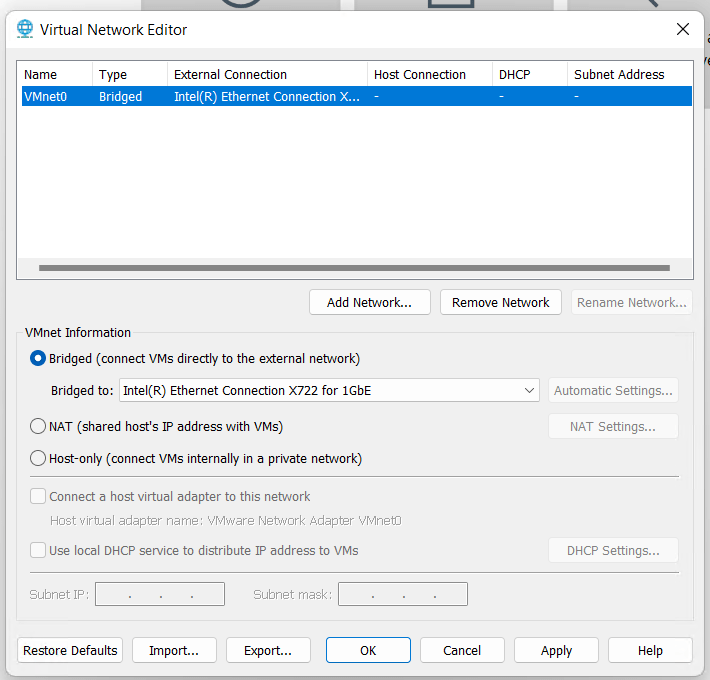

After Workstation has completed its installation, I open it up and go to Edit > Virtual Network Editor. I delete the other VMnets and adjust VMnet0 to match the correct network adapter.

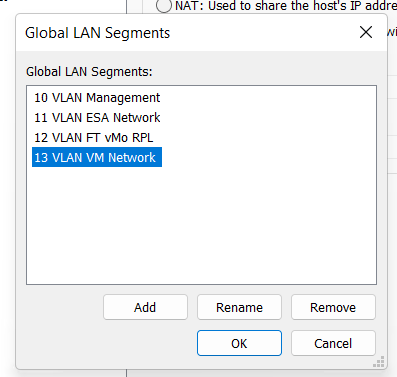

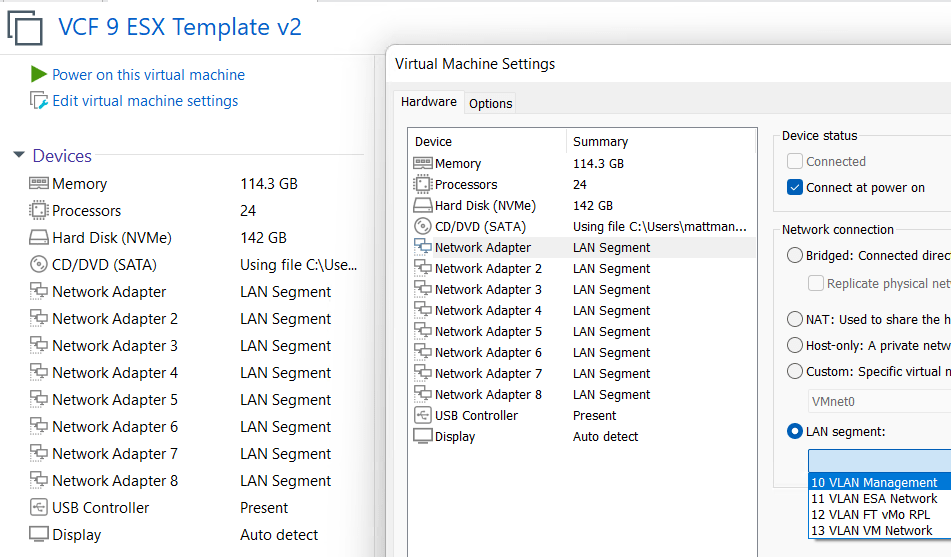

Next I create a simple VM and add in all the VLAN Segments. For more information on this process, see my post under LAN Segments.

One at a time I add in each of my VMs and ensure their LAN Segments are aligned properly.

This is what I love about Workstation, I was able to recover my entire VCF 9 environment and move it to a new system quite quickly. In my next post I’ll cover how I set up Windows 11 for better performance.

VMware Workstation Gen 9: BOM2 P1 Motherboard upgrade

**Urgent Note ** The Gigabyte mobo in BOM2 initially was working well in my deployment. However, shortly after I completed this post the mobo failed. I was able to return it but to replace it the cost doubled. I’m currently looking for a different mobo and will post about it soon.

To take the next step in deploying a VCF 9 Simple stack with VCF Automation, I’m going to need to make some updates to my Workstation Home Lab. BOM1 simply doesn’t have enough RAM, and I’m a bit concerned about VCF Automation being CPU hungry. In this blog post I’ll cover some of the products I chose for BOM2.

Although my ASRock Rack motherboard (BOM1) was performing well, it was constrained by available memory capacity. I had additional 32 GB DDR4 modules on hand, but all RAM slots were already populated. I considered upgrading to higher-capacity DIMMs; however, the cost was prohibitive. Ultimately, replacing the motherboard proved to be a more cost-effective solution, allowing me to leverage the memory I already owned.

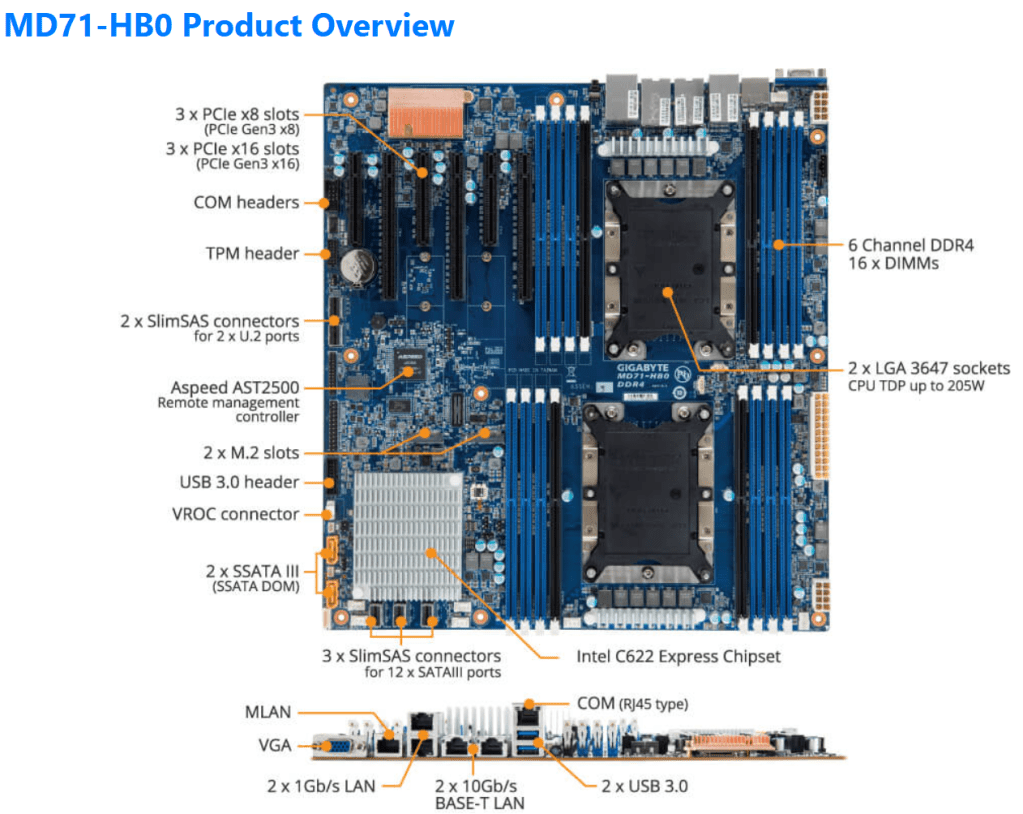

The mobo I chose was the Gigabyte Gigabyte MD71-HB0, it was rather affordable but it lacked PCIe bifurcation. Bifurcation is a feature I needed to support the dual NVMe disks into one PCIe slot. To overcome this I chose the RIITOP M.2 NVMe SSD to PCI-e 3.1 These cards essentially emulate a bifurcated PCIe slot which allows for the dual NVMe disks in a single PCIe slot.

The table below outlines the changes planned for BOM2. There was minimal unused products from the original configuration, and after migrating components, the updated build will provide more than sufficient resources to meet my VCF 9 compute/RAM requirements.

Pro Tip: When assembling new hardware, I take a methodical, incremental approach. I install and validate one component at a time, which makes troubleshooting far easier if an issue arises. I typically start with the CPUs and a minimal amount of RAM, then scale up to the full memory configuration, followed by the video card, add-in cards, and then storage. It’s a practical application of the old adage: don’t bite off more than you can chew—or in this case, compute.

| KEEP from BOM1 | Added to create BOM2 | UNUSED |

| Case: Phanteks Enthoo Pro series PH-ES614PC_BK Black Steel | Mobo: Gigabyte MD71-HB0 | Mobo: ASRack Rock EPC621D8A |

| CPU: 1 x Xeon Gold ES 6252 (ES means Engineering Samples) 24 pCores | CPU: 1 x Xeon Gold ES 6252 (ES means Engineering Samples) New net total 48 pCores | NVMe Adapter: 3 x Supermicro PCI-E Add-On Card for up to two NVMe SSDs |

| Cooler: 1 x Noctua NH-D9 DX-3647 4U | Cooler: 1 x Noctua NH-D9 DX-3647 4U | 10Gbe NIC: ASUS XG-C100C 10G Network Adapter |

| RAM: 384GB 4 x 64GB Samsung M393A8G40MB2-CVFBY 4 x 32GB Micron MTA36ASF4G72PZ-2G9E2 | RAM: New net total 640GB 8 x 32GB Micron MTA36ASF4G72PZ-2G9E2 | |

| NVMe: 2 x 1TB NVMe (Win 11 Boot Disk and Workstation VMs) | NVMe Adapter: 3 x RIITOP M.2 NVMe SSD to PCI-e 3.1 | |

| NVMe: 6 x Sabrent 2TB ROCKET NVMe PCIe (Workstation VMs) | Disk Cables: 2 x Slimline SAS 4.0 SFF-8654 | |

| HDD: 1 x Seagate IronWolf Pro 18TB | ||

| SSD: 1 x 3.84TB Intel D3-4510 (Workstations VMs) | ||

| Video Card: GIGABYTE GeForce GTX 1650 SUPER | ||

| Power Supply: Antec NeoECO Gold ZEN 700W | ||

PCIe Slot Placement:

For the best performance, PCIe Slot placement is really important. Things to consider – speed and size of the devices, and how the data will flow. Typically if data has to flow between CPUs or through the C622 chipset then, though minor, some latency is induced. If you have a larger video card, like the Super 1650, it’ll need to be placed in a PCIe slot that supports its length plus doesn’t interfere with onboard connectors or RAM modules.

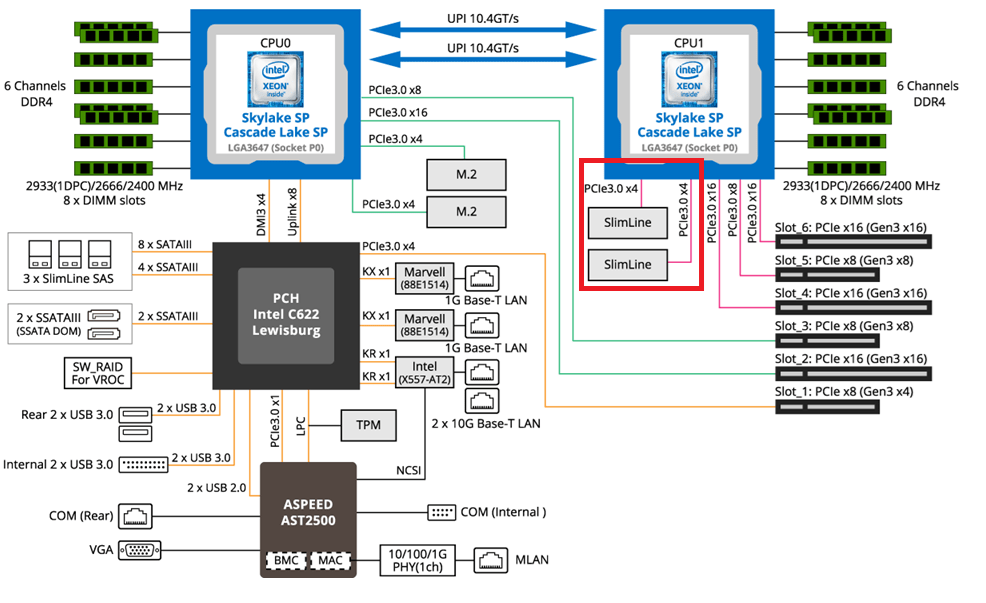

Using Fig-1 below, here is how I laid out my devices.

- Slot 2 for Video Card. The Video card is 2 slots wide and covers Slot 1 the slowest PCIe slot

- Slot 3 Open

- Slot 4, 5, and 6 are the RIITOP cards with the dual NVMe

- Slimline 1 (Connected to CPU 1) has my 2 SATA drives, typically these ports are for U.2 drives but they also will work on SATA drives.

Why this PCIe layout? By isolating all my primary disks on CPU1 I don’t cross over the CPU nor do I go through the C622 chipset. My 2 NVMe disks will be attached to CPU0. They will be non-impactful to my VCF environment as one is used to boot the system and the other supports unimportant VCF VMs.

Other Thoughts:

- I did look for other mobos, workstations, and servers but most were really expensive. The upgrades I had to choose from were a bit constrained due to the products I had on hand (DDR4 RAM and the Xeon 6252 LGA-3647 CPUs). This narrowed what I could select from.

- Adding the RIITOP cards added quite a bit of expense to this deployment. Look for mobos that support bifurcation and match your needs. However, this combination + the additional parts were more than 50% less when compared to just updating the RAM modules.

- The Gigabyte mobo requires 2 CPUs if you want to use all the PCIe slots.

- Updating the Gigabyte firmware and BMC was a bit wonky. I’ve seen and blogged about these mobo issues before, hopefully their newer products have improved.

- The layout (Fig-1) of the Gigabyte mobo included support for SlimLine U.2 connectors. These will come in handy if I deploy my U.2 Optane Disks.

(Fig-1)

Now starts the fun, in the next posts I’ll reinstall Windows 11, performance tune it, and get my VCF 9 Workstation VMs operational.

VMware Workstation Gen 8: Environment Revitalization

In my last blog post, I shared my journey of upgrading to Workstation build 17.6.4 (build-24832109), plus ensuring I could start up my Workstation VM’s. In this installment, we dive deeper into getting the environment ready, and perform a back up.

Keep in mind my Gen 8 Workstation has been powered down for almost a year, so there are some things I have to do to get it ready. I see this blog as more informational and if users already have a stable environment you can skip these sections. However, you may find value in these steps if you are trying to revitalize an environment that has been shut down for a long period of time.

Before we get started, a little background.

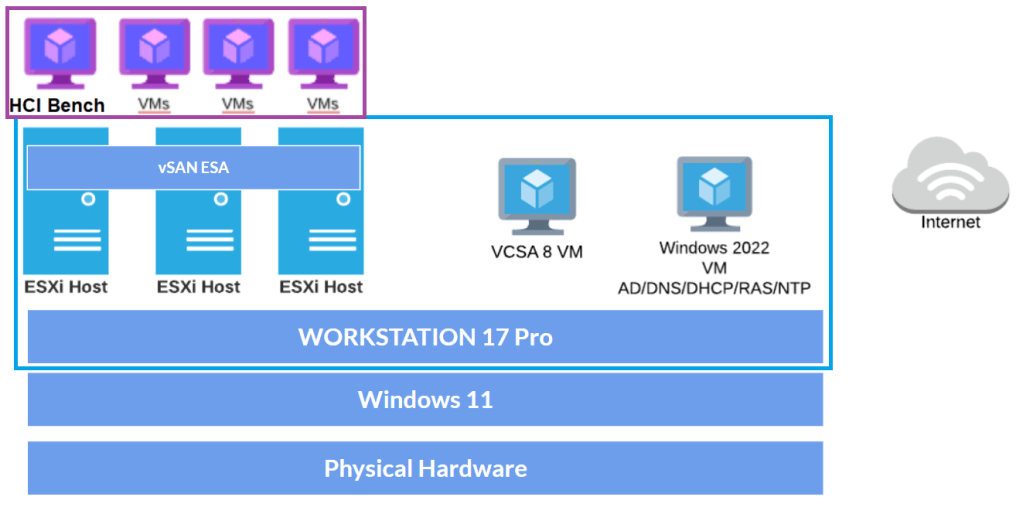

This revitalization follows my designs that were published on my Workstation Home Lab YouTube series. That series focused on building a nested home lab using Workstation 17 and vSphere 8. Nesting with Workstation can evoke comparisons to the movie Inception, where everything is multi-layered. Below is a brief overview of my Workstation layout, aimed at ensuring we all understand which layer we are at.

- Layer 1 – Physical Layer:

- The physical hardware I use to support this VMware Workstation environment is a super charged computer with lots of RAM, CPU, and highspeed drives. More information here.

- Base OS is Windows 11

- VMware Workstation build is 17.6.4 build-24832109

- Layer 2 – Workstation VMs: (Blue Box in diagram)

- I have 4 key VM’s that run directly on Workstation.

- These VM’s are: Win2022 Sever, VCSA 8u2, and 3 x ESXi 8u2 Hosts

- The Win2022 Server has the following services: AD, DNS, DHCP, and RAS

- Current state of these VM’s is suspended.

- Layer 3 – Workload VM’s: (Purple box)

- The 3 Nested ESXi Hosts have several VM’s

Lets get started!

Challenges:

1) Changes to License keys.

My vSphere environment vExpert license keys are expired. Those keys were based on vSphere 8.0u2 and were only good for one year. Since the release of vSphere 8.0u2b subscription keys are needed. This means to apply my new license keys I’ll have to upgrade vSphere.

TIP: Being a Broadcom VMware employee I’m not illegible for VMUG or vExpert keys, but if you are interested in the process check out a post by Daniel Kerr. He did a great write up.

2) Root Password is incorrect.

My root password into VCSA is not working and will need to be corrected.

3) VCSA Machine Certs need renewed.

There are several certificates that are expired and will need to be renewed. This is blocking me from being able to log on to the VCSA management console.

4) Time Sync needs to be updated.

I’ve change location and the time zone will need updated with NTP

Here are the steps I took to resume my vSphere Environment.

The beauty of working with Workstation is the ability to backup and/or snapshot Workstation VM’s as files and restore them when things fail. I took many snapshots and restored this lab a few times as I attempted to restart it. Restarting this Lab was a bit of a learning process as it took a few attempts to find everything that needed attention. Additionally, some of the processes you would follow in the real world didn’t apply here. So if you’re a bit concerned by some of the steps below, trust me I tried the correct way first and it simply didn’t work out.

1) Startup Workstation VM AD222:

At this point – I have only resumed AD222.

The other VMs rely on the Windows 2022 VM for its services. First, I need to get this system up and validate that all of its services are operational.

- I used the Server Manager Dash Board as a quick way to see if everything is working properly.

- From this dashboard I can see that my services are working and upon checking the red areas I found there was an non-issue with Google updater being stopped.

- Run and Install Windows Updates

- Network Time Checks (NTP)

- All my VM’s get their time from this AD server. So it being correct is important.

- I ensure the local time on the server is correct. From CLI I type in ‘w32tm /tz’ and confirm the time zone is correct.

- Using the ‘net time’ command I confirm the local date/time matches the GUI clock in the Windows server.

- Using ‘w32tm /query /status’ I confirm that time is syncing properly

- Note: My time ‘Source’ is noted as ‘Local CMOS Clock’. This is okay for my private Workstation environment. Had this been production, we would have wanted a better time source.

2) Fix VCSA223 Server Root Password:

At this point only – I have resumed power to VCSA223 and AD222 is powered on.

Though I was initially able to access VCSA via the vSphere Client, I eventually determined I was unable to log in to the VCSA appliance via DCUI, SSH, or management GUI. The root password was incorrect and needed to be reset.

To fix the password issue I need to gracefully shutdown the VCSA appliance and follow KB 322247. In Workstation I simply right clicked on the VCSA appliance > Power > Shutdown Guest

3) Cannot access the VCSA GUI Error 503-Service Not available.

After fixing the VCSA password I was now able to access it via the SSH and DCUI consoles. However, I was unable to bring up the vSphere Client or the VCSA Management GUI. The management GUI simply stated ‘503 service not available’.

To resolve this issue I used the following KB’s

- 344201 Verify and resolve expired vCenter Server certificates using the command line interface

- Used this KB to help determine which certificates needed attention.

- Found an expired machine certificate.

- 385107 vCert – Scripted vCenter expired certificate replacement

- Following this KB I downloading vCert via WinSCP to the VCSA appliance.

- I used KB 326317 WinSCP adjustment to help download the file.

- After I completed the install section I chose option 6 and reset all the certificates.

- Next I rebooted the VCSA appliance.

- After the reboot I was now able to access the VCSA vSphere Client and Management GUI.

4) VCSA Management GUI Updates

- I accessed the VCSA Management GUI and validated/updated its NTP settings.

- Next I mounted the most recent VCSA ISO and updated the appliance to 8.0.3.24853646

5) Updating ESXi

- At this point only my AD and VCSA servers have been resumed. My ESXi hosts are still suspended.

- To start the update from 8.0.2ub to 8.0.3ue, I choose to resume then immediately shutdown all 3 ESXi hosts. This step may seem a bit harsh but no matter how I tried to be graceful about resuming these VM’s I ran into issues.

- While shut down I mounted VMware-VMvisor-Installer-8.0U3e-24677879.x86_64.ISO and booted/upgraded each ESXi host.

6) License keys in VCSA

Now that everything is powered on I was able to go onto the vSphere client. First thing I noticed was the VMware keys (VCSA, vSAN, ESXi) were all expired.

I updated the license keys in this order:

- First – Update the VCSA License Key

- Second – Update the vSAN License Key

- Third – Update the ESXi Host License Key

7) Restarting vSAN

- When I shut down or suspend my Workstation Home lab I always shut down my Workload VM’s and do a proper shutdown of vSAN.

- After I confirmed all my hosts were licensed and connected properly, I simply went into the cluster > configure > vSAN Services.

8) Backup VM’s

Now that my environment is properly working it’s time to do a proper shut down, remove all snapshots, and then take a backup of my Workstation VM’s.

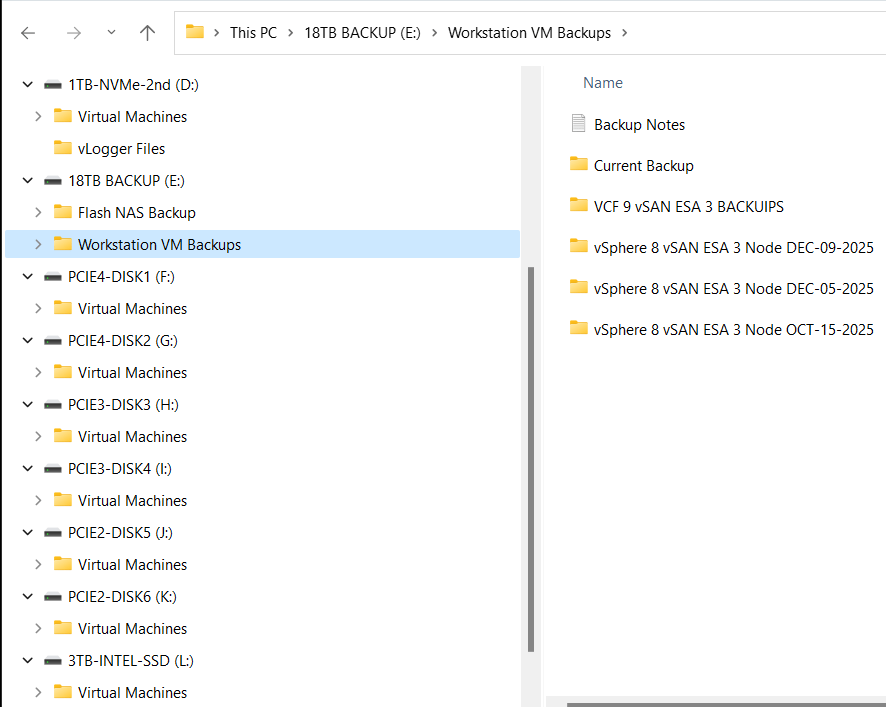

With Workstation a simple Windows File copy from Source to target is all that is needed. In my case I have a large HDD where I store my backups. In Windows I simply right click on the Workstation VMs folder and chose copy. I then go to the target location right click and choose paste.

TIP: I keep track of my backups and notes with a simple notepad. This way I don’t forget their state.

And that’s it, after being down for over a year my Workstation Home lab Gen 8 is now fully functional and backed up. I’ll continue to use it for vSphere 8 testing as I build out a new VCF 9 enviroment.

Thanks for reading and please feel free to ask any questions or comments.