vcf

VMware Workstation Gen 9: BOM2 P2 Windows 11 Install and setup

**Urgent Note ** The Gigabyte mobo in BOM2 initially was working well in my deployment. However, shortly after I completed this post the mobo failed. I was able to return it but to replace it the cost doubled. I’m currently looking for a different mobo and will post about it soon.

For the Gen 9 BOM2 project, I have opted for a clean installation of Windows 11 to ensure a baseline of stability and performance. This transition necessitates a full reconfiguration of both the operating system and my primary Workstation environment. In this post, I will outline the specific workflow and configuration steps I followed during the setup. Please note that this is not intended to be an exhaustive guide, but rather a technical log of my personal implementation process.

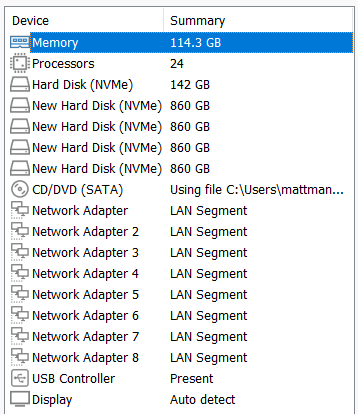

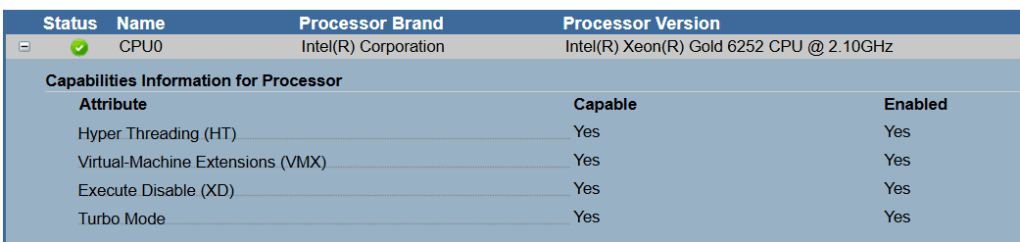

After backing up and ensuring my VMs are not encrypted, the first thing I do is install the new hardware and ensure all of the hardware is recognized by the motherboard. There is quite a bit items being carried over from BOM1 plus several new items, so its import these items are recognized before the installation of Windows 11.

The Gigabyte mobo has a web based Embedded Management Software tool that allows me to ensure all hardware is recognized. After logging in I find the information under the Hardware section to be of value. The PCIe section seems to be the most detailed and it allows me to confirm my devices.

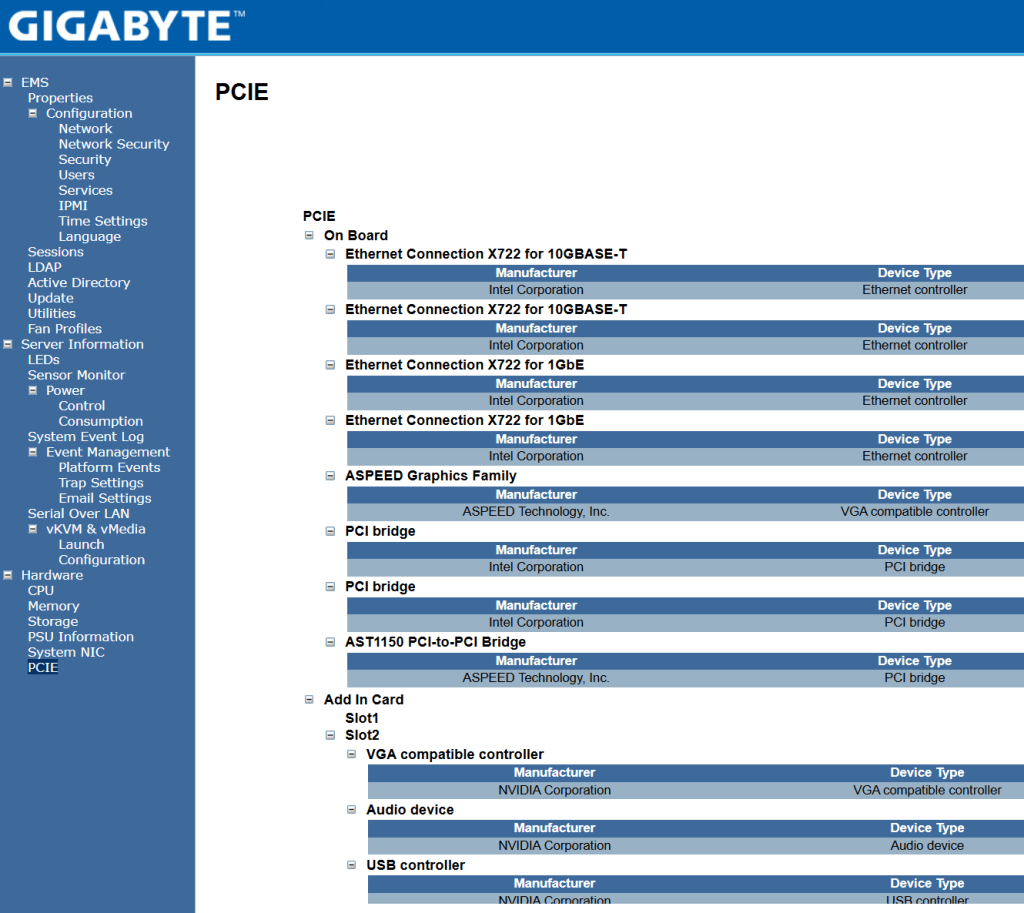

Next I validate that the CPU settings have the Virtual-Machines Extensions (VMX) enabled. This is a requirement for Workstation.

Once all the hardware is confirmed I create my Windows 11 boot USB using Rufus and boot to it. For more information on this process see my past video around creating it.

Next I install Windows 11 and after it’s complete I update the following drivers.

- Install Intel Chipset drivers

- Install Intel NIC Drivers

- Run Windows updates

- Optionally, I update the Nvidia Video Drivers

At this point all the correct drivers should be installed, I validate this by going into Device Manager and ensuring all devices have been recognized.

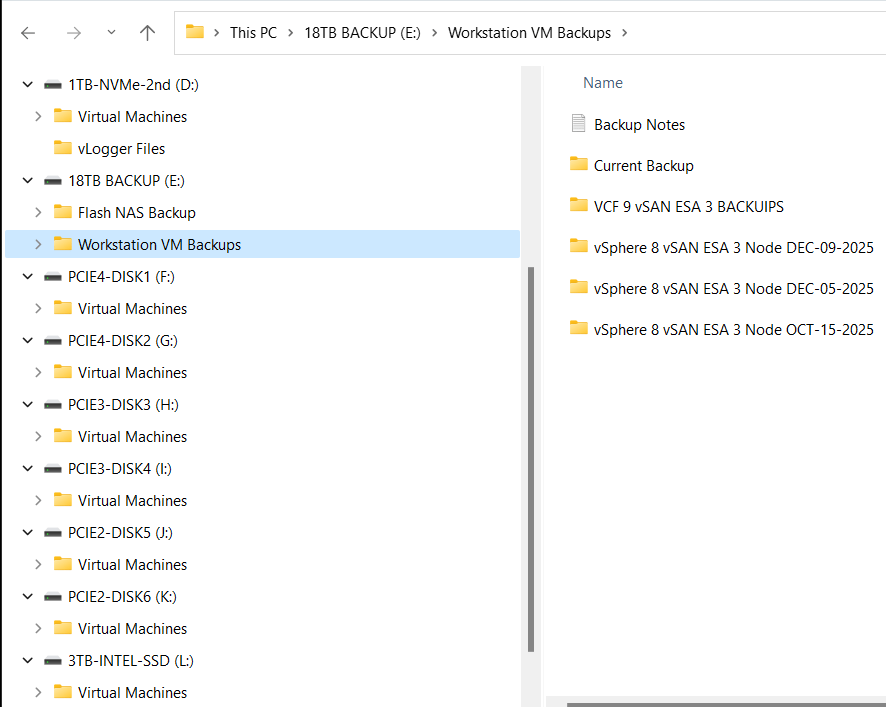

I then go into Disk Manager and ensure all the drives have the same drive letter as they did in BOM1.

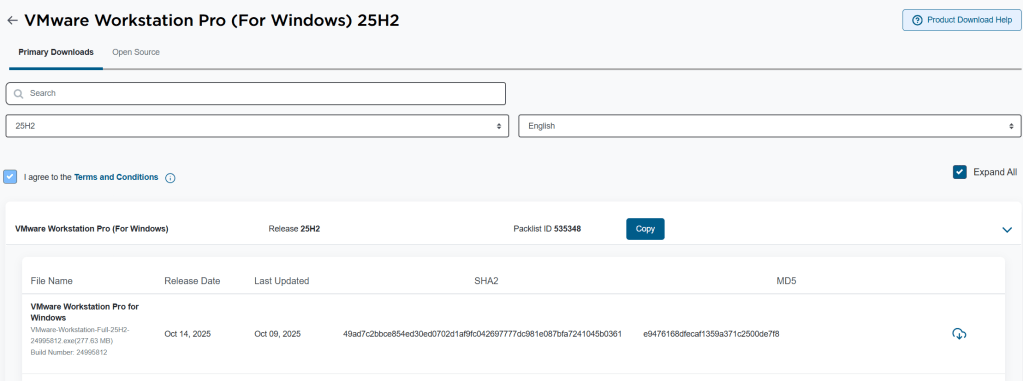

Workstation Pro is now free and users can download it at the Broadcom support portal. You can find it there under FREE Downloads.

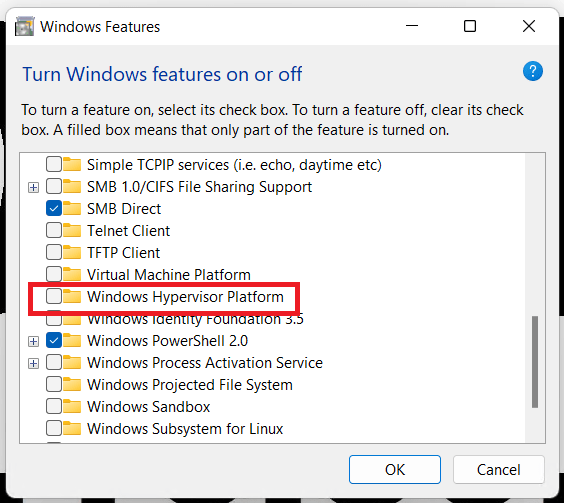

Before I install Workstation I validate that Windows Hyer-V is not enabled. I go into Windows Features, I ensure that Hyper-V and Windows Hypervisor Platform are NOT checked.

Once confirmed I install Workstation 25. For more information on how to install Workstation 25H2 see my blog.

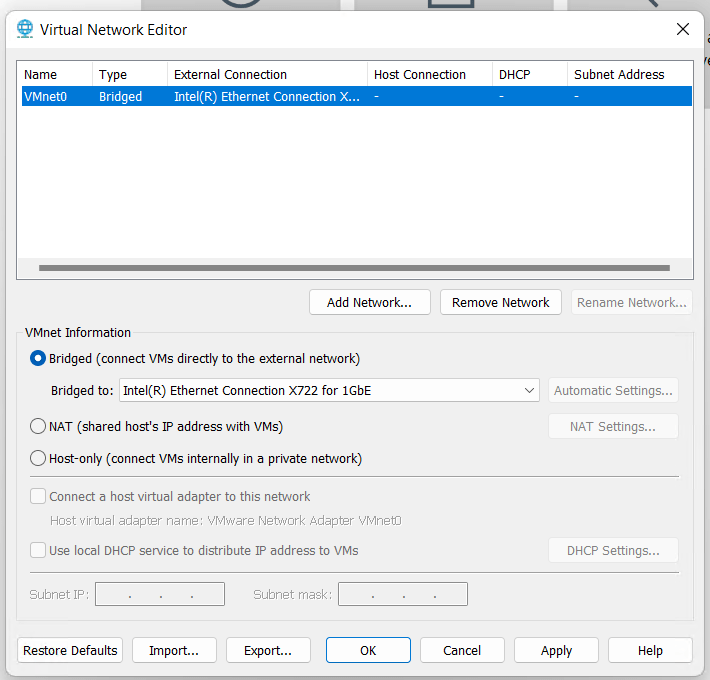

After Workstation has completed its installation, I open it up and go to Edit > Virtual Network Editor. I delete the other VMnets and adjust VMnet0 to match the correct network adapter.

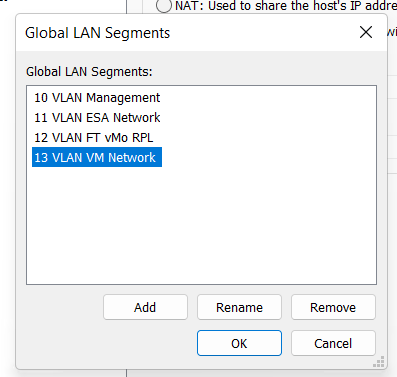

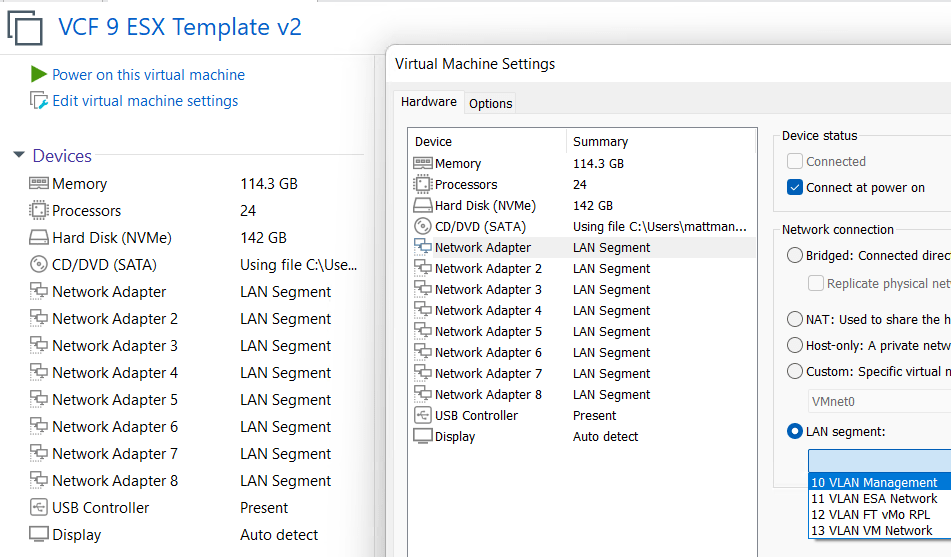

Next I create a simple VM and add in all the VLAN Segments. For more information on this process, see my post under LAN Segments.

One at a time I add in each of my VMs and ensure their LAN Segments are aligned properly.

This is what I love about Workstation, I was able to recover my entire VCF 9 environment and move it to a new system quite quickly. In my next post I’ll cover how I set up Windows 11 for better performance.

VMware Workstation Gen 9: BOM2 P1 Motherboard upgrade

**Urgent Note ** The Gigabyte mobo in BOM2 initially was working well in my deployment. However, shortly after I completed this post the mobo failed. I was able to return it but to replace it the cost doubled. I’m currently looking for a different mobo and will post about it soon.

To take the next step in deploying a VCF 9 Simple stack with VCF Automation, I’m going to need to make some updates to my Workstation Home Lab. BOM1 simply doesn’t have enough RAM, and I’m a bit concerned about VCF Automation being CPU hungry. In this blog post I’ll cover some of the products I chose for BOM2.

Although my ASRock Rack motherboard (BOM1) was performing well, it was constrained by available memory capacity. I had additional 32 GB DDR4 modules on hand, but all RAM slots were already populated. I considered upgrading to higher-capacity DIMMs; however, the cost was prohibitive. Ultimately, replacing the motherboard proved to be a more cost-effective solution, allowing me to leverage the memory I already owned.

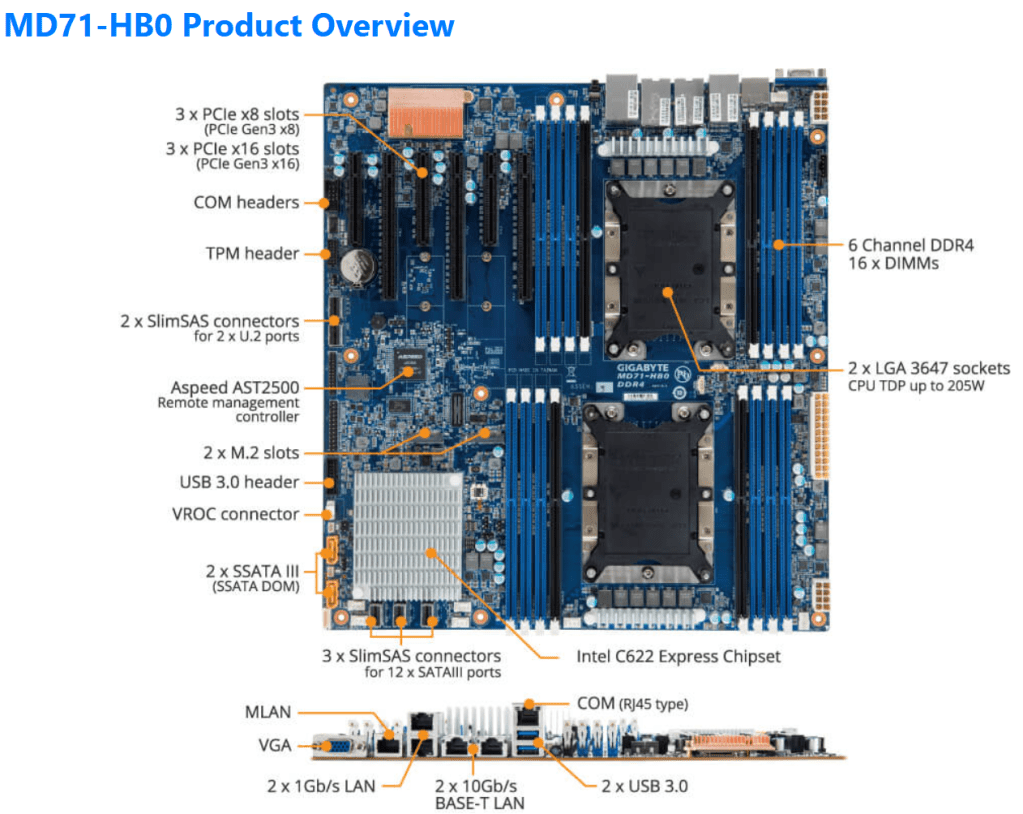

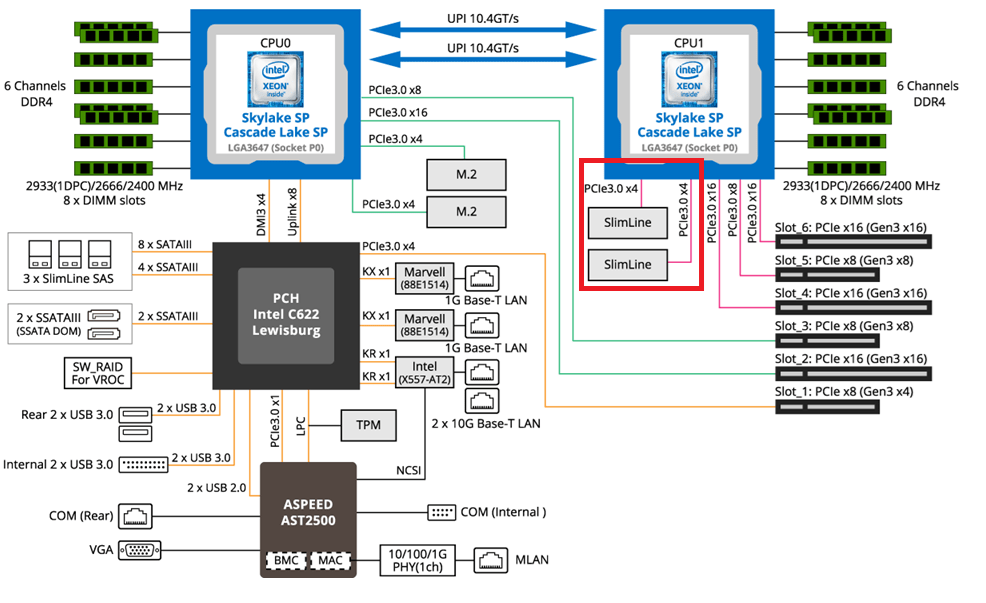

The mobo I chose was the Gigabyte Gigabyte MD71-HB0, it was rather affordable but it lacked PCIe bifurcation. Bifurcation is a feature I needed to support the dual NVMe disks into one PCIe slot. To overcome this I chose the RIITOP M.2 NVMe SSD to PCI-e 3.1 These cards essentially emulate a bifurcated PCIe slot which allows for the dual NVMe disks in a single PCIe slot.

The table below outlines the changes planned for BOM2. There was minimal unused products from the original configuration, and after migrating components, the updated build will provide more than sufficient resources to meet my VCF 9 compute/RAM requirements.

Pro Tip: When assembling new hardware, I take a methodical, incremental approach. I install and validate one component at a time, which makes troubleshooting far easier if an issue arises. I typically start with the CPUs and a minimal amount of RAM, then scale up to the full memory configuration, followed by the video card, add-in cards, and then storage. It’s a practical application of the old adage: don’t bite off more than you can chew—or in this case, compute.

| KEEP from BOM1 | Added to create BOM2 | UNUSED |

| Case: Phanteks Enthoo Pro series PH-ES614PC_BK Black Steel | Mobo: Gigabyte MD71-HB0 | Mobo: ASRack Rock EPC621D8A |

| CPU: 1 x Xeon Gold ES 6252 (ES means Engineering Samples) 24 pCores | CPU: 1 x Xeon Gold ES 6252 (ES means Engineering Samples) New net total 48 pCores | NVMe Adapter: 3 x Supermicro PCI-E Add-On Card for up to two NVMe SSDs |

| Cooler: 1 x Noctua NH-D9 DX-3647 4U | Cooler: 1 x Noctua NH-D9 DX-3647 4U | 10Gbe NIC: ASUS XG-C100C 10G Network Adapter |

| RAM: 384GB 4 x 64GB Samsung M393A8G40MB2-CVFBY 4 x 32GB Micron MTA36ASF4G72PZ-2G9E2 | RAM: New net total 640GB 8 x 32GB Micron MTA36ASF4G72PZ-2G9E2 | |

| NVMe: 2 x 1TB NVMe (Win 11 Boot Disk and Workstation VMs) | NVMe Adapter: 3 x RIITOP M.2 NVMe SSD to PCI-e 3.1 | |

| NVMe: 6 x Sabrent 2TB ROCKET NVMe PCIe (Workstation VMs) | Disk Cables: 2 x Slimline SAS 4.0 SFF-8654 | |

| HDD: 1 x Seagate IronWolf Pro 18TB | ||

| SSD: 1 x 3.84TB Intel D3-4510 (Workstations VMs) | ||

| Video Card: GIGABYTE GeForce GTX 1650 SUPER | ||

| Power Supply: Antec NeoECO Gold ZEN 700W | ||

PCIe Slot Placement:

For the best performance, PCIe Slot placement is really important. Things to consider – speed and size of the devices, and how the data will flow. Typically if data has to flow between CPUs or through the C622 chipset then, though minor, some latency is induced. If you have a larger video card, like the Super 1650, it’ll need to be placed in a PCIe slot that supports its length plus doesn’t interfere with onboard connectors or RAM modules.

Using Fig-1 below, here is how I laid out my devices.

- Slot 2 for Video Card. The Video card is 2 slots wide and covers Slot 1 the slowest PCIe slot

- Slot 3 Open

- Slot 4, 5, and 6 are the RIITOP cards with the dual NVMe

- Slimline 1 (Connected to CPU 1) has my 2 SATA drives, typically these ports are for U.2 drives but they also will work on SATA drives.

Why this PCIe layout? By isolating all my primary disks on CPU1 I don’t cross over the CPU nor do I go through the C622 chipset. My 2 NVMe disks will be attached to CPU0. They will be non-impactful to my VCF environment as one is used to boot the system and the other supports unimportant VCF VMs.

Other Thoughts:

- I did look for other mobos, workstations, and servers but most were really expensive. The upgrades I had to choose from were a bit constrained due to the products I had on hand (DDR4 RAM and the Xeon 6252 LGA-3647 CPUs). This narrowed what I could select from.

- Adding the RIITOP cards added quite a bit of expense to this deployment. Look for mobos that support bifurcation and match your needs. However, this combination + the additional parts were more than 50% less when compared to just updating the RAM modules.

- The Gigabyte mobo requires 2 CPUs if you want to use all the PCIe slots.

- Updating the Gigabyte firmware and BMC was a bit wonky. I’ve seen and blogged about these mobo issues before, hopefully their newer products have improved.

- The layout (Fig-1) of the Gigabyte mobo included support for SlimLine U.2 connectors. These will come in handy if I deploy my U.2 Optane Disks.

(Fig-1)

Now starts the fun, in the next posts I’ll reinstall Windows 11, performance tune it, and get my VCF 9 Workstation VMs operational.

VMware Workstation Gen 9: FAQs

I complied a list of frequently asked questions (FAQs) around my Gen 9 Workstation build. I’ll be updating it from time to time but do feel free to reach out if you have additional questions.

Last Update: 02/04/2026

General FAQs

Why Generation 9? Starting with my Gen 7 build the Gen Number aligns to the version of vSphere it was designed for. So, Gen 9 = VCF 9. It also helps my readers to track the Generations that interests them the most.

Why are you running Workstation vs. dedicated ESX servers? I’m pivoting my home lab strategy. I’ve moved from a complex multi-server setup to a streamlined, single-host configuration using VMware Workstation. Managing multiple hosts, though it gives real world experience, wasn’t meeting my needs when it came to roll back from a crash or testing different software versions. With Workstation, I can run multiple labs at once and use simple backup plus Workstation’s snapshot manager to recreate labs quite quickly. I find Workstation more adaptable, and making my lab time about learning rather than maintenance.

What are your goals with Gen 9? To develop and build a platform that is able to run the stack of VCF 9 product for Home Lab use. See Gen 9 Part 1 for more information on goals.

Where can I find your Gen 9 Workstation Build series? All of my most popular content, including the Gen 9 Workstation builds can be found under Best of VMX.

What version of Workstation are you using? Currently, VMware Workstation 25H2, this may change over time see my Home Lab BOM for more details.

How performant is running VCF 9 on Workstation? In my testing I’ve had adequate success with a simple VCF install on BOM1. Clicks through out the various applications didn’t seem to lag. I plan to expand to a full VCF install under BOM2 and will do some performance testing soon.

What core services are needed to support this VCF Deployment? Core Services are supplied via Windows Server. They include AD, DNS, NTP, RAS, and DHCP. DNS, NTP, and RAS being the most important.

BOM FAQs

Where can I find your Bill of Materials (BOM)? See my Home Lab BOM page.

Why 2 BOMs for Gen 9? Initially, I started with the hardware I had, this became BOM1. It worked perfectly for a simple VCF install. Eventually, I needed to expand my RAM to support the entire VCF stack. I had 32GB DDR4 modules on hand but the BOM1 motherboard was fully populated. It was less expensive to buy a motherboard that had enough RAM slots plus I could add in a 2nd CPU. This upgrade became BOM2. Additionally, It gives my readers some ideas of different configurations that might work for them.

What can I run on BOM1? I have successfully deployed a simple VCF deployment, but I don’t recommend running VCF Automation on this BOM. See the Best of VMX section for a 9 part series.

What VCF 9 products are running in BOM1? Initial components include: VCSA, VCF Operations, VCF Collector, NSX Manager, Fleet Manager, and SDDC Manager all running on the 3 x Nested ESX Hosts.

What are your plans for BOM2? Currently, under development but I would like to see if I could push the full VCF stack to it.

What can I run on BOM2? Under development, updates soon.

Are you running both BOMs configurations? No I’m only running one at a time. Currently, running BOM2.

Do I really need this much hardware? No you don’t. The parts listed on my BOM is just how I did it. I used some parts I had on hand and some I bought used. My recommendation is use what you have and upgrade when you need to.

What should I do to help with performance? Invest in highspeed disk, CPU cores, and RAM. I highly recommend lots of properly deployed NVMe disks for your nested ESX hosts.

What do I need for multiple NVMe Drives? If you plan to use multiple NVMe drives into a single PCIe slot you’ll need a motherboard that supports bifurcation OR you’ll need an NVMe adapter that will support it. Not all NVMe adapters are the same, so do your research before buying.

VMware Workstation Gen 9: Part 9 Shutting down and starting up the environment

Deploying the VCF 9 environment on to Workstation was a great learning process. However, I use my server for other purposes and rarely run it 24/7. After its initial deployment, my first task is shutting down the environment, backing it up, and then starting it up. In this blog post I’ll document how I accomplish this.

NOTE: Users should license their VCF 9 environment first before performing the steps below. If not, the last step, vSAN Shutdown will cause an error. There is a simple work around.

How to shutdown my VCF Environment.

My main reference for VCF 9 Shut down procedures is the VCF 9 Documentation on techdocs.broadcom.com. (See REF URLs below) The section on “Shutdown and Startup of VMware Cloud Foundation” is well detailed and I have placed the main URL in the reference URLs below. For my environment I need to focus on shutting down my Management Domains as it also houses my Workload VMs.

Here is the order in which I shutdown my environment. This may change over time as I add other components.

| Shutdown Order | SDDC Component |

|---|---|

| 1 – Not needed, not deployed yet | VCF Automation |

| 2 – Not needed, not deployed yet | VCF Operations for Networks |

| 3 – From VCSA234, locate a VCF Operations collector appliance.(opscollectorapplaince) – Right-click the appliance and select Power > Shut down Guest OS. – In the confirmation dialog box, click Yes. | VCF Operations collector |

| 4 – Not needed, not deployed yet | VCF Operations for logs |

| 5 – Not needed, not deployed yet | VCF Identity Broker |

| 6 – From vcsa234, in the VMs and Templates inventory, locate the VCF Operations fleet management appliance (fleetmgmtappliance.nested.local) – Right-click the VCF Operations fleet management appliance and select Power > Shut down Guest OS. – In the confirmation dialog box, click Yes. | VCF Operations fleet management |

| 7 – You shut down VCF Operations by first taking the cluster offline and then shutting down the appliances of the VCF Operations cluster. – Log in to the VCF Operations administration UI at the https://vcops.nested.local/admin URL as the admin local user. – Take the VCF Operations cluster offline. On the System status page, click Take cluster offline. – In the Take cluster offline dialog box, provide the reason for the shutdown and click OK. Wait for the Cluster status to read Offline. This operation might take about an hour to complete. (With no data mine took <10 mins) – Log in to vCenter for the management domain at https://vcsa234.nested.local/ui as a user with the Administrator role. – After reading Broadcom KB 341964, I determined my next step is to simply Right-click the vcops appliance and select Power > Shut down Guest OS. – In the VMs and Templates inventory, locate a VCF Operations appliance. – Right-click the appliance and select Power > Shut down Guest OS. – In the confirmation dialog box, click Yes.This operations takes several minutes to complete. | VCF Operations |

| 8 – Not Needed, not deployed yet | VMware Live Site Recovery for the management domain |

| 9 – Not Needed, not deployed yet | NSX Edge nodes |

| 10 – I continue shutting down the NSX infrastructure in the management domain and a workload domain by shutting down the one-node NSX Manager by using the vSphere Client. – Log in to vCenter for the management domain at https://vcsa234.nested.local/ui as a user with the Administrator role. – Identify the vCenter instance that runs NSX Manager. – In the VMs and Templates inventory, locate an NSX Manager (nsxmgr.nested.local) appliance. – Right-click the NSX Manager appliance and select Power > Shut down Guest OS. – In the confirmation dialog box, click Yes. – This operation takes several minutes to complete. | NSX Manager |

| 11 – Shut down the SDDC Manager appliance in the management domain by using the vSphere Client. – Log in to vCenter for the management domain at https://vcsa234.nested.local/ui as a user with the Administrator role. – In the VMs and templates inventory, expand the management domain vCenter Server tree and expand the management domain data center. – Expand the Management VMs folder. – Right-click the SDDC Manager appliance (SDDCMGR108.nested.local) and click Power > Shut down Guest OS. – In the confirmation dialog box, click Yes. This operation takes several minutes to complete. | SDDC Manager |

| 12 – You use the vSAN shutdown cluster wizard in the vSphere Client to shut down gracefully the vSAN clusters in a management domain. The wizard shuts down the vSAN storage and the ESX hosts added to the cluster. – Identify the cluster that hosts the management vCenter for this management domain. – This cluster must be shut down last. – Log in to vCenter for the management domain at https://vcsa234.nested.local/ui as a user with the Administrator role. – For a vSAN cluster, verify the vSAN health and resynchronization status. – In the Hosts and Clusters inventory, select the cluster and click the Monitor tab. – In the left pane, navigate to vSAN Skyline health and verify the status of each vSAN health check category. – In the left pane, under vSAN Resyncing objects, verify that all synchronization tasks are complete. – Shut down the vSAN cluster. In the inventory, right-click the vSAN cluster and select vSAN Shutdown cluster. – In the Shutdown Cluster wizard, verify that all pre-checks are green and click Next. Review the vCenter Server notice and click Next. – If vCenter is running on the selected cluster, note the orchestration host details. Connection to vCenter is lost because the vSAN shutdown cluster wizard shuts it down. The shutdown operation is complete after all ESXi hosts are stopped. – Enter a reason for performing the shutdown, and click Shutdown. | Shut Down vSAN and the ESX Hosts in the Management Domain OR Manually Shut Down and Restart the vSAN Cluster If vSAN Fails to shutdown due to a license issue, then under the vSAN Cluster > Configure > Services, choose ‘Resume Shutdown’ (Fig-3) |

| Next the ESX hosts will power off and then I can do a graceful shutdown of my Windows server AD230. In Workstation, simply right click on this VM > Power > Shutdown Guest. Once all Workstation VM’s are powered off, I can run a backup or exit Workstation and power off my server. | Power off AD230 |

(Fig-3)

How to restart my VCF Environment.

| Startup Order | SDDC Component |

|---|---|

| PRE-STEP: – Power on my Workstation server and start Workstation. – In Workstation power on my AD230 VM and ensure / verify all the core services (AD, DNS, NTP, and RAS) are working okay. Start up the VCF Cluster: 1 – One at a time power on each ESX Host. – vCenter is started automatically. Wait until vCenter is running and the vSphere Client is available again. – Log in to vCenter at https://vcsa234.nested.local/ui as a user with the Administrator role. – Restart the vSAN cluster. In the Hosts and Clusters inventory, right-click the vSAN cluster and select vSAN Restart cluster. – In the Restart Cluster dialog box, click Restart. – Choose the vSAN cluster > Configure > vSAN > Services to see he vSAN Services page. This will display information about the restart process. – After the cluster has been restarted, check the vSAN health service and resynchronization status, and resolve any outstanding issues. Select the cluster and click the Monitor tab. – In the left pane, under vSAN > Resyncing objects, verify that all synchronization tasks are complete. – In the left pane, navigate to vSAN Skyline health and verify the status of each vSAN health check category. | Start vSAN and the ESX Hosts in the Management DomainStart ESX Hosts with NFS or Fibre Channel Storage in the Management Domain |

| 2 – From vcsa234 locate the sddcmgr108 appliance. – In the VMs and templates inventory, Right Click on the SDDC Manager appliance > Power > Power On. – Wait for this vm to boot. Check it by going to https://sddcmgr108.nested.local – As its getting ready you may see “VMware Cloud Foundation is initializing…” – Eventually you’ll be prompted by the SDDC Manager page. – Exit this page. | SDDC Manager |

| 3 – From the VCSA234 locate the nsxmgr VM then Right-click, select Power > Power on. – This operation takes several minutes to complete until the NSX Manager cluster becomes fully operational again and its user interface – accessible. – Log in to NSX Manager for the management domain at https://nsxmgr.nested.local as admin. – Verify the system status of NSX Manager cluster. – On the main navigation bar, click System. – In the left pane, navigate to Configuration Appliances. – On the Appliances page, verify that the NSX Manager cluster has a Stable status and all NSX Manager nodes are available. Notes — Give it time – You may see the Cluster status go from Unavailable > Degraded, ultimately you want it to show Available. – In the Node under Service Status you can click on the # next to Degraded. This will pop up the Appliance details and will show you which item are degraded. – If you click on Alarms, you can see which alarms might need addressed | NSX Manager |

| 4 – Not Needed, not deployed yet | NSX Edge |

| 5 – Not Needed, not deployed yet | VMware Live Site Recovery |

| 6 – From vcsa234, locate vcfops.nested.lcoal appliance. – Following the order described in Broadcom KB 341964. For my environment I simply Right-click on the appliance and select Power > Power On. – Log in to the VCF Operations administration UI at the https://vcfops.nested.lcoal/admin URL as the admin local user. – On the System status page, click Bring Cluster Online. This operation might take about an hour to complete. Notes: – Cluster Status update may read: ‘Going Online’ and then finally ‘Online’ – Nodes Status may start to appear eventually showing ‘Running’ and ‘Online’ – Took <15 mins to come Online | VCF Operations |

| 7 – From vcsa234 locate the VCF Operations fleet management appliance (fleetmgmtappliance.nested.local) Right-click the VCF Operations fleet management appliance and select Power > Power On. In the confirmation dialog box, click Yes. | VCF Operations fleet management |

| 8 – Not Needed, not deployed yet | VCF Identity Broker |

| 9 – Not Needed, not deployed yet | VCF Operations for logs |

| 10 – From vcsa234, locate a VCF Operations collector appliance. (opscollectorappliance) Right-click the VCF Operations collector appliance and select Power > Power On. In the configuration dialog box, click Yes. | VCF Operations collector |

| 11 – Not Needed, not deployed yet | VCF Operations for Networks |

| 12 – Not Needed, not deployed yet | VCF Automation |

REF:

VMware Workstation Gen 9: Part 7 Deploying VCF 9.0.1

Now that I have set up an VCF 9 Offline depot and downloaded the installation media its time to move on to installing VCF 9 on my Workstation environment. In this blog all document the steps I took to complete this.

PRE-Steps

1) One of the more important steps is making sure I backup my environment and delete any VM snapshots. This way my environment is ready for deployment.

2) Make sure your Windows 11 PC power plan is set to High Performance and does not put the computer to sleep.

3) Next since my hosts are brand new they need their self-signed certificates updated. See the following URL’s.

- VCF Installer fails to add hosts during deployment due to hostname mismatch with subject alternative name

- Regenerate the Self-Signed Certificate on ESX Hosts

4) I didn’t setup all of DNS names ahead of time, I prefer to do it as I’m going through the VCF installer. However, I test all my current DNS settings, and test the newly entered ones as I go.

5) Review the Planning and Resource Workbook.

6) Ensure the NTP Service is running on each of your hosts.

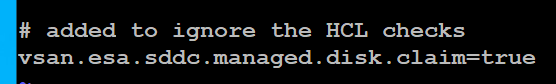

7) The VCF Installer 9.0.1 has some extra features to allow non-vSAN certified disks to pass the validation section. However, nested hosts will fail the HCL checks. Simply add the line below to the /etc/vmware/vcf/domainmanager/application-prod.properties and then restart the SDDC Domain Manager services with the command: systemctl restart domainmanager

This allows me Acknowledge the errors and move the deployment forward.

Installing VCF 9 with the VCF Installer

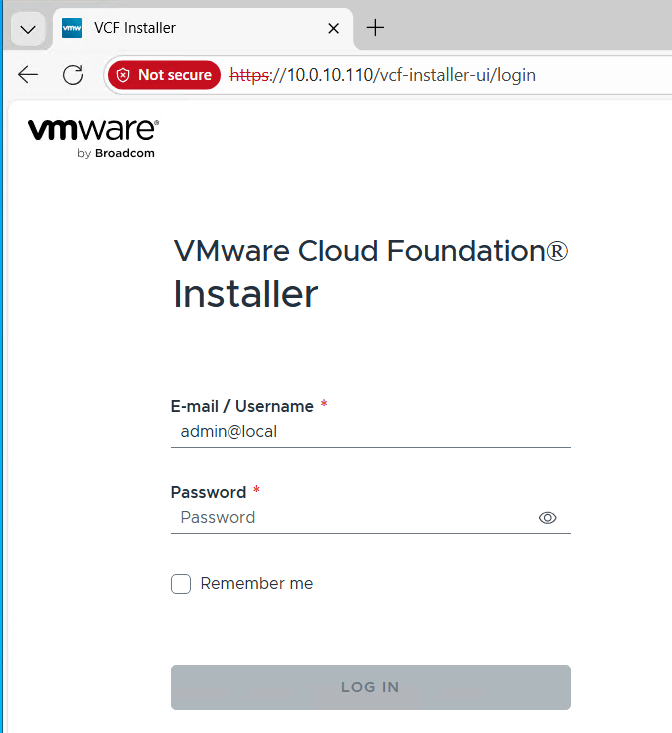

I log into the VCF Installer.

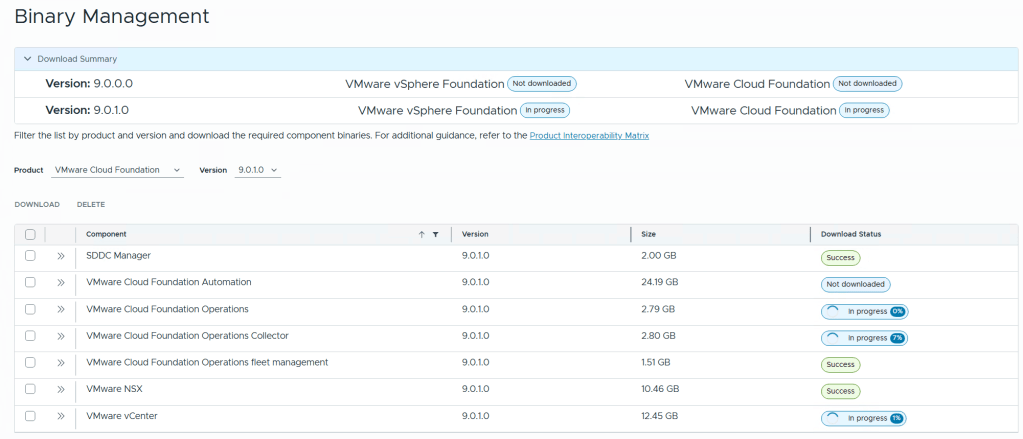

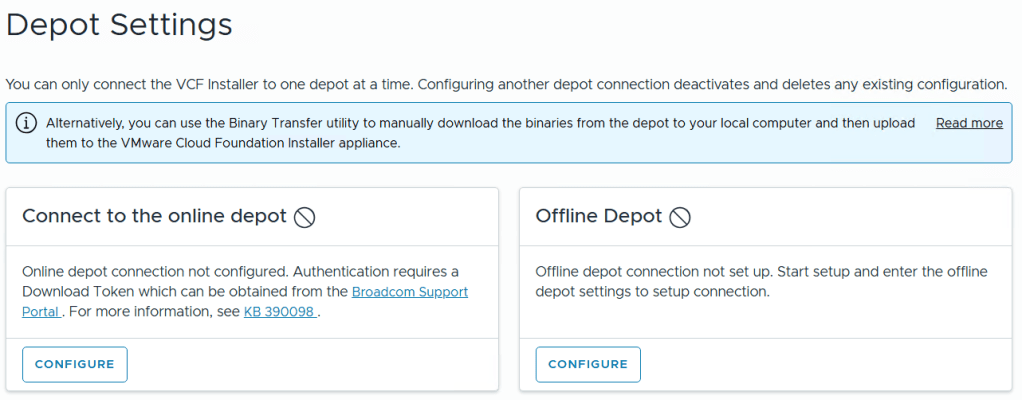

I click on ‘Depot Settings and Binary Management’

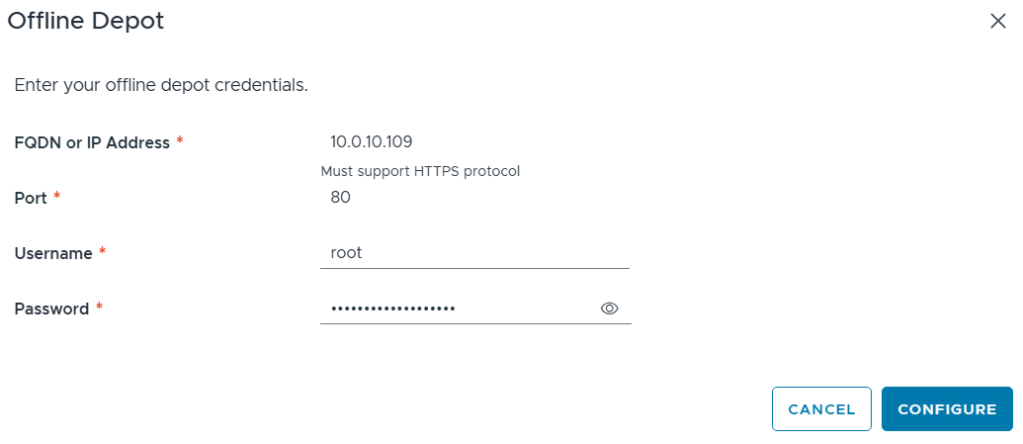

I click on ‘Configure’ under Offline Depot and then click Configure.

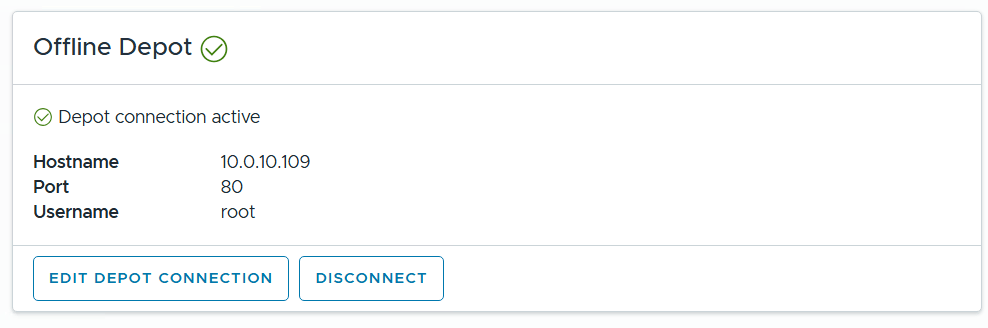

I confirm the Offline Depot Connection if active.

I chose ‘9.0.1.0’ next to version, select all except for VMware Cloud Automation, then click on Download.

Allow the downloads to complete.

All selected components should state “Success” and the Download Summary for VCF should state “Partially Downloaded” when they are finished.

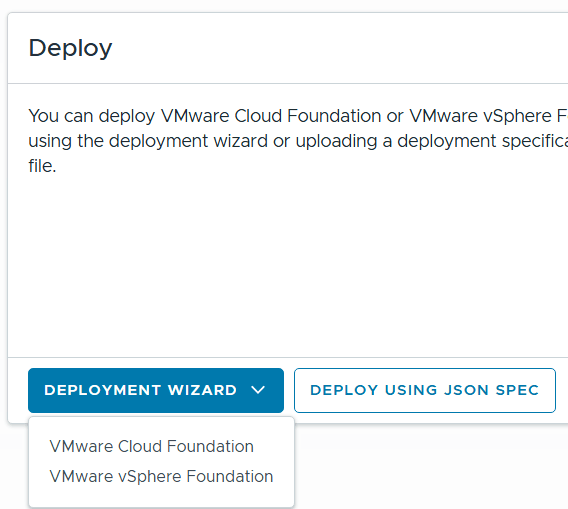

Click return home and choose VCF under Deployment Wizard.

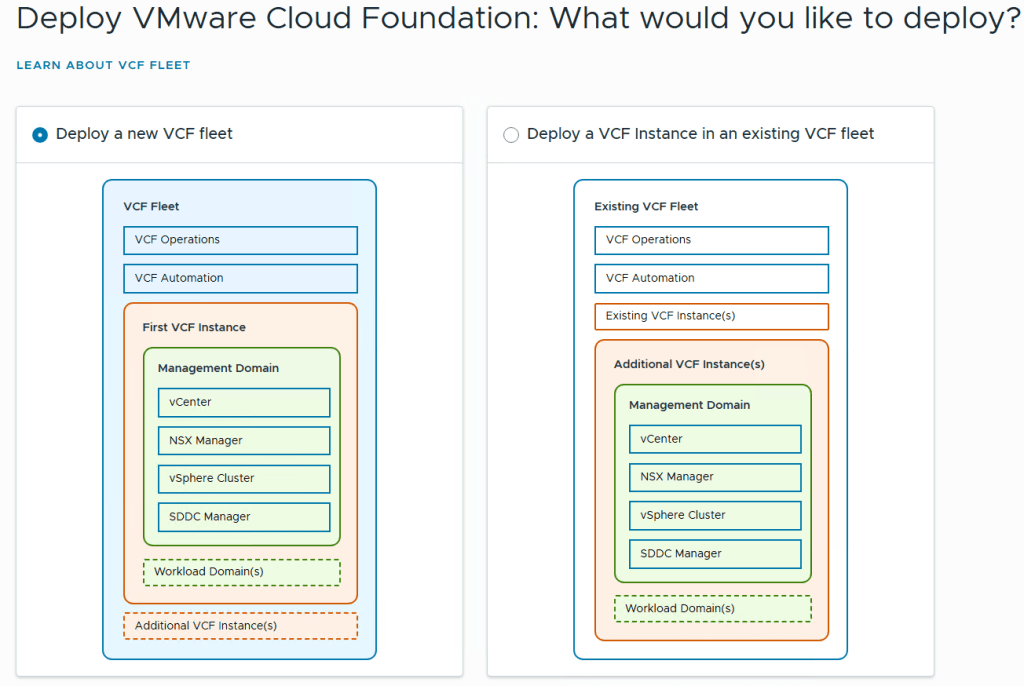

This is my first deployment so I’ll choose ‘Deploy a new VCF Fleet’

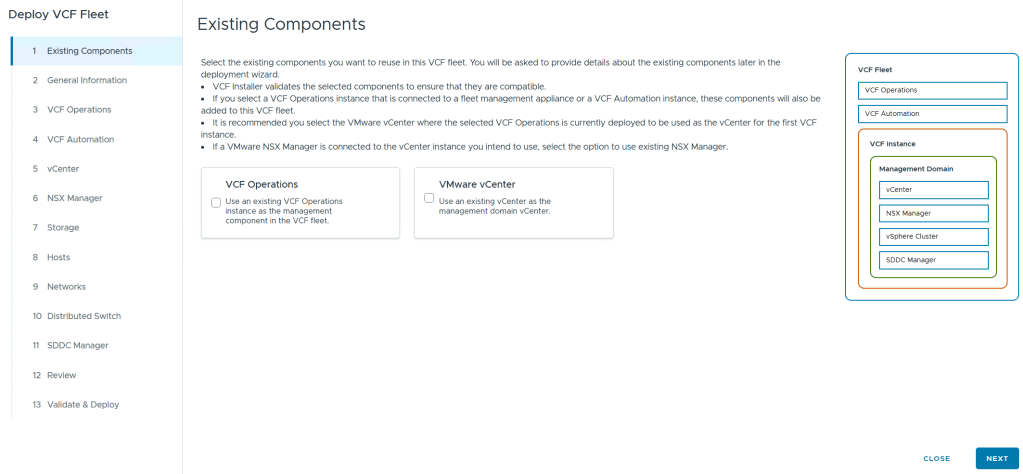

The Deploy VCF Fleet Wizard starts and I’ll input all the information for my deployment.

For Existing Components I simply choose next as I don’t have any.

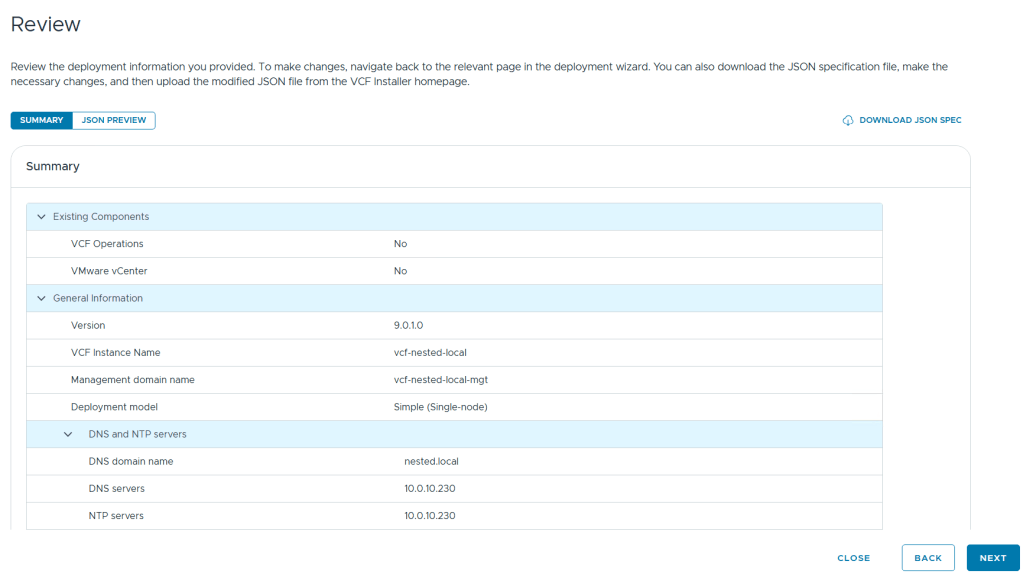

I filled in the following information around my environment, choose simple deployment and clicked on next.

I filled out the VCF Operations information and created their DNS records. Once complete I clicked on next.

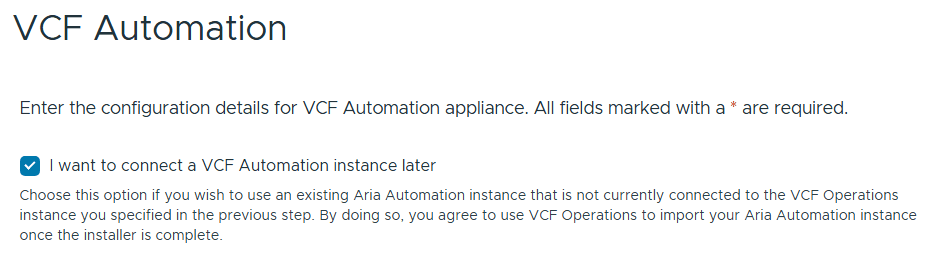

I chose to “I want to connect a VCF Automation instance later” can chose next.

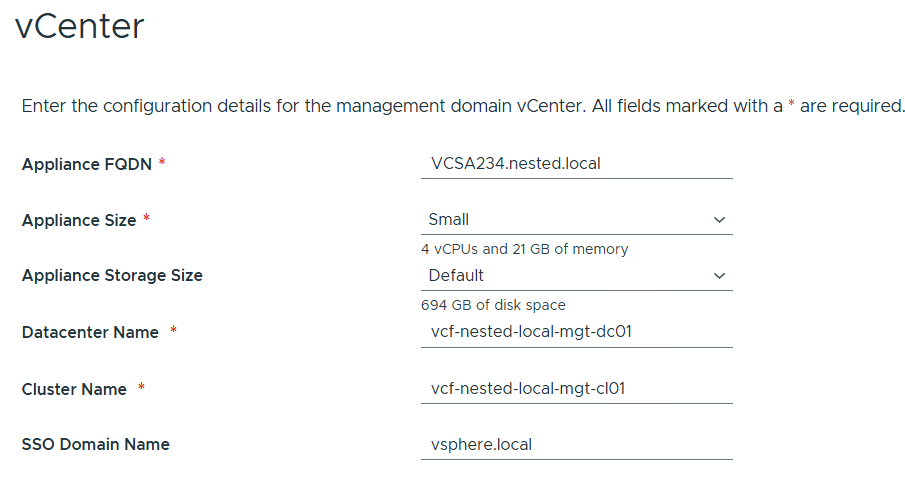

Filled out the information for vCenter

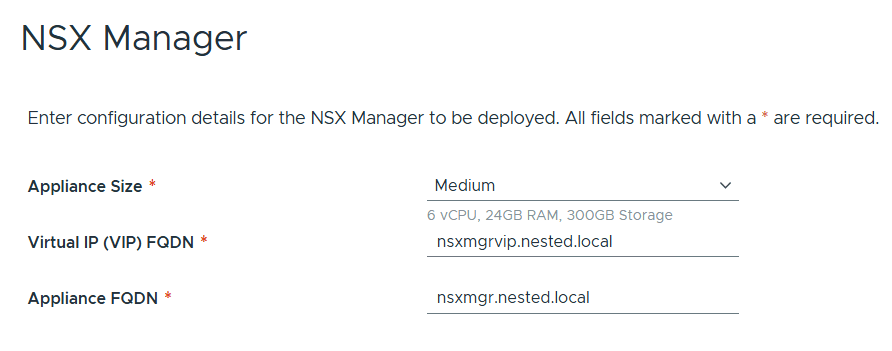

Entered the details for NSX Manager.

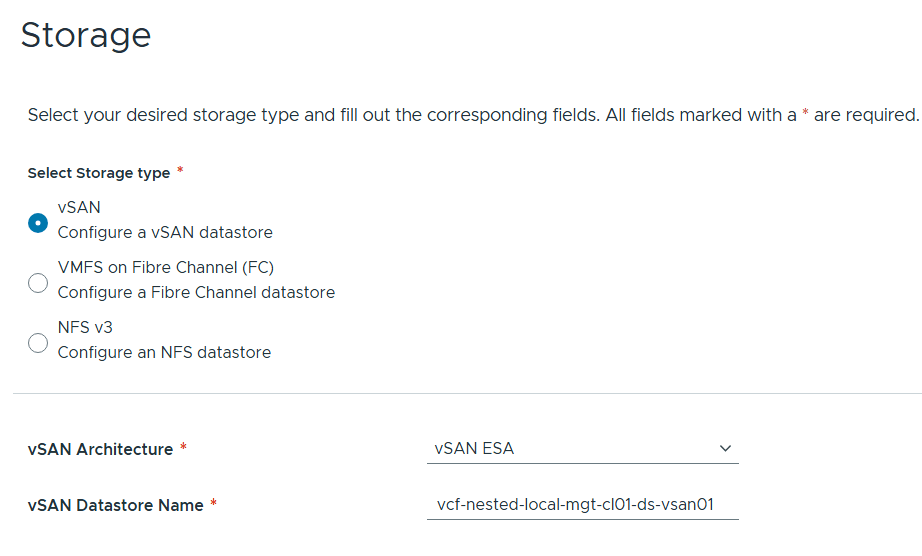

Left the storage items as default.

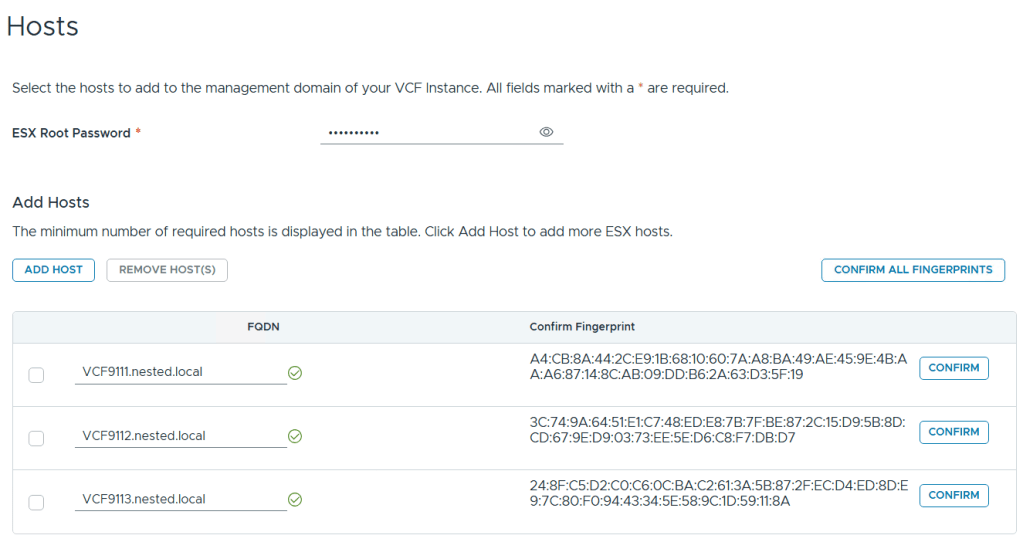

Added in my 3 x ESX 9 Hosts, confirmed all fingerprints, and clicked on next.

Note: if you skipped the Pre-requisite for the self-signed host certificates, you may want to go back and update it before proceeding with this step.

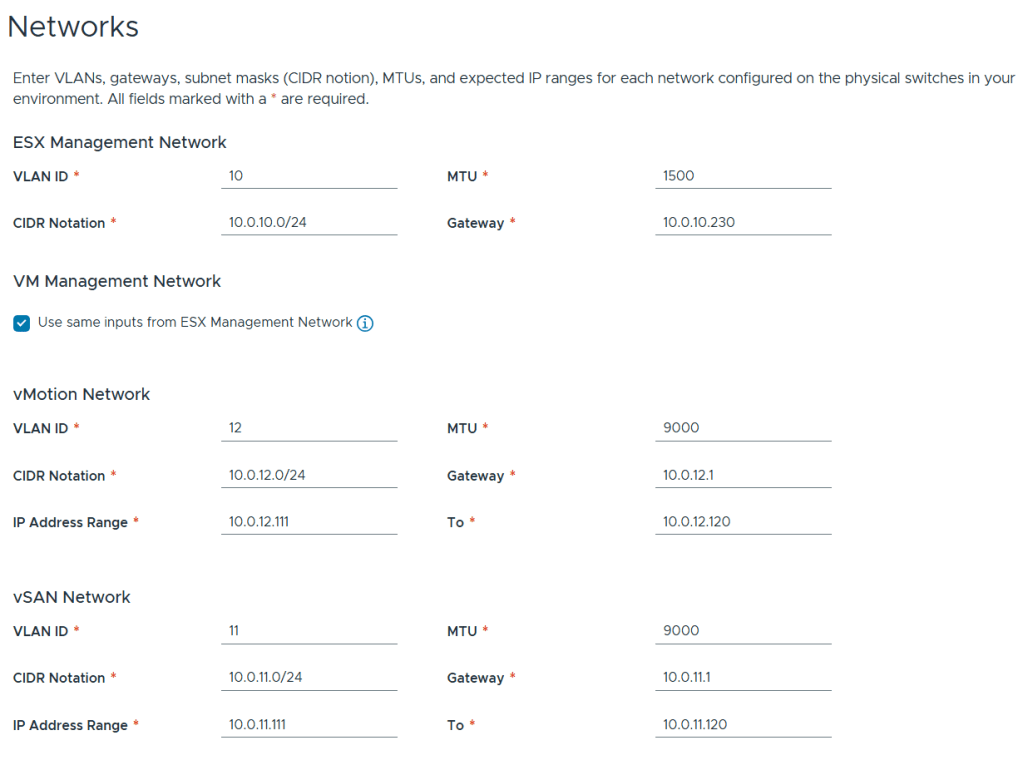

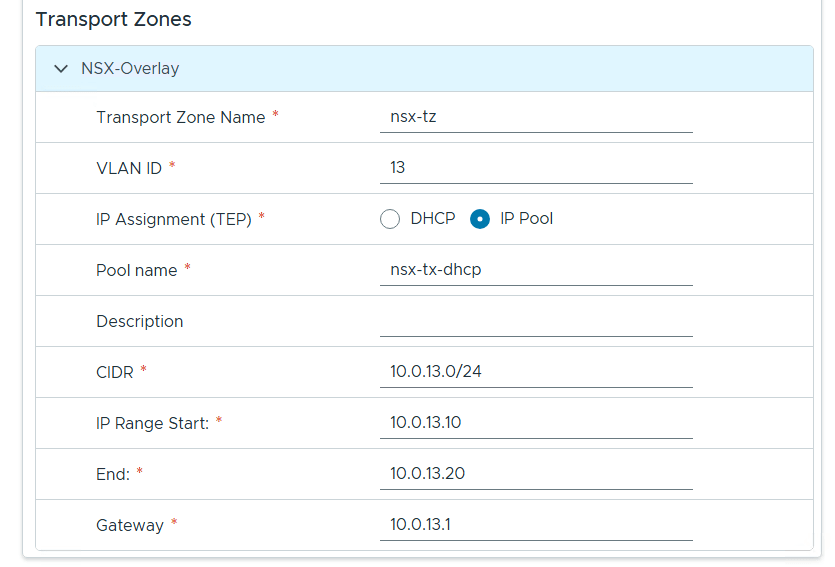

Filled out the network information based on our VLAN plan.

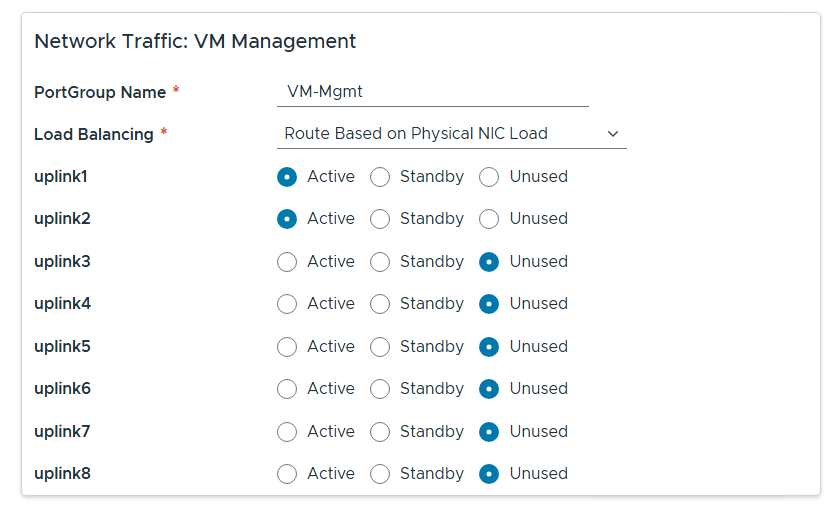

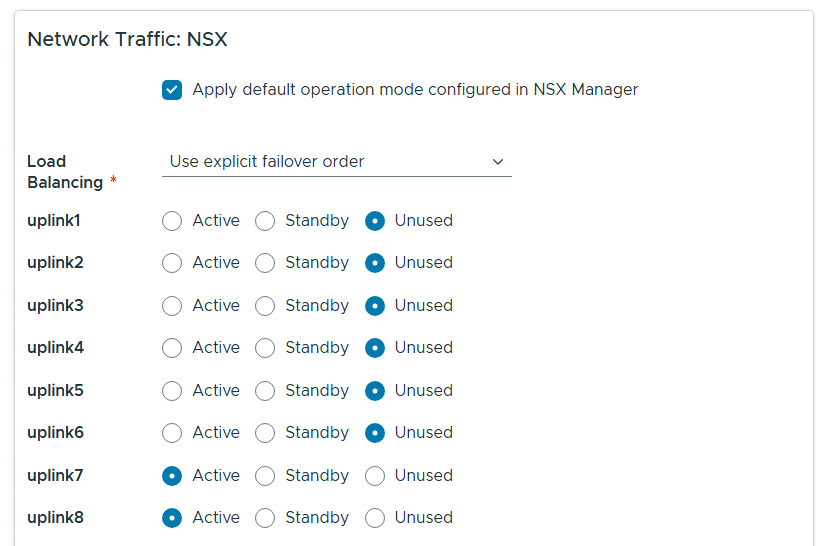

For Distributed Switch click on Select for Custom Switch Configuration, MTU 9000, 8 Uplinks and chose all services, then scroll down.

Renamed each port group and chose the following network adapters, chose their networks, updated NSX settings then chose next.

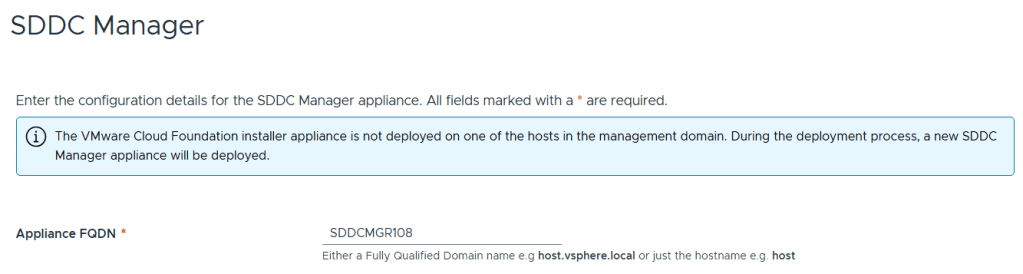

Entered the name of the new SDDC Manager and updated it’s name in DNS, then clicked on next.

Reviewed the deployment information and chose next.

TIP – Download this information as a JSON Spec, can save you a lot of typing if you have to deploy again.

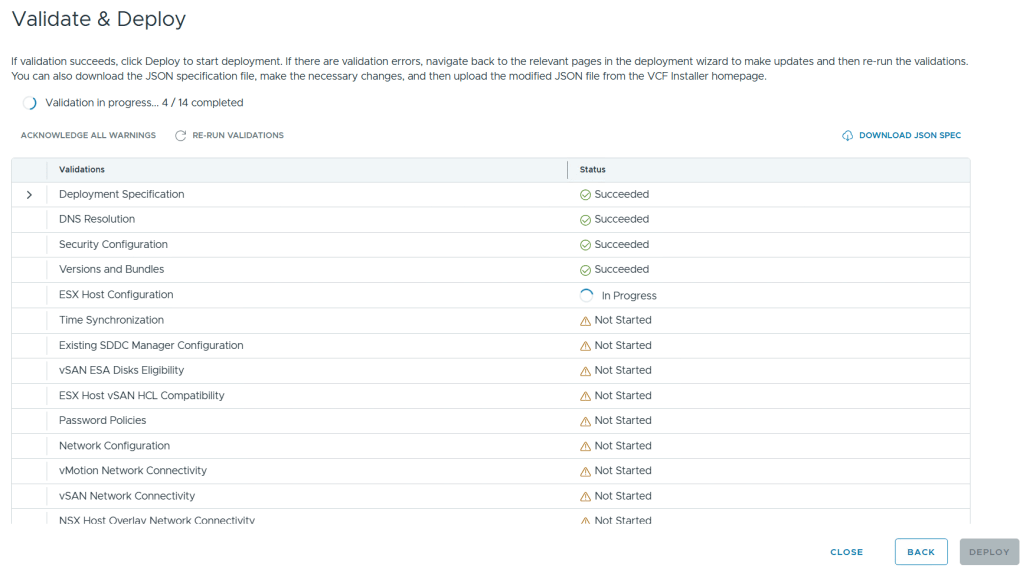

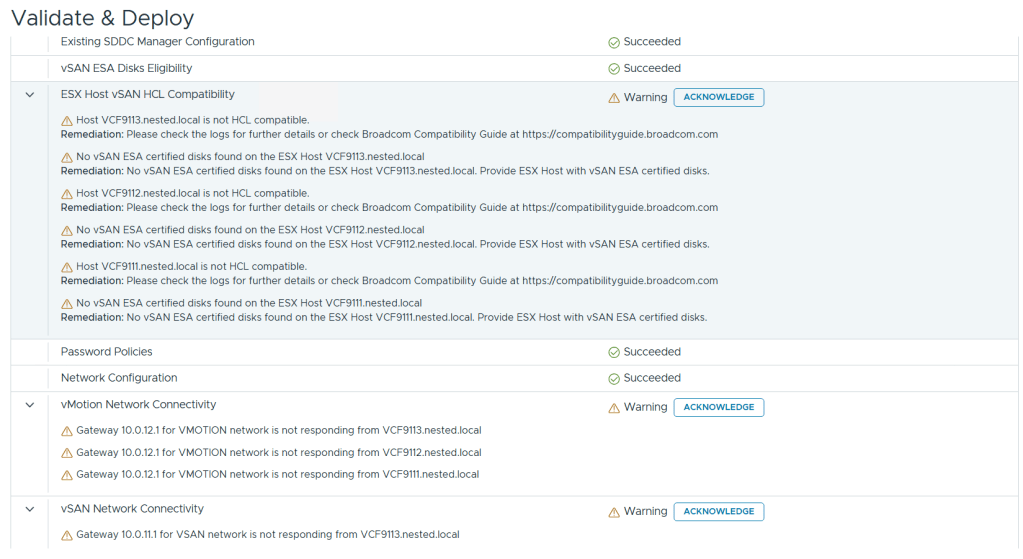

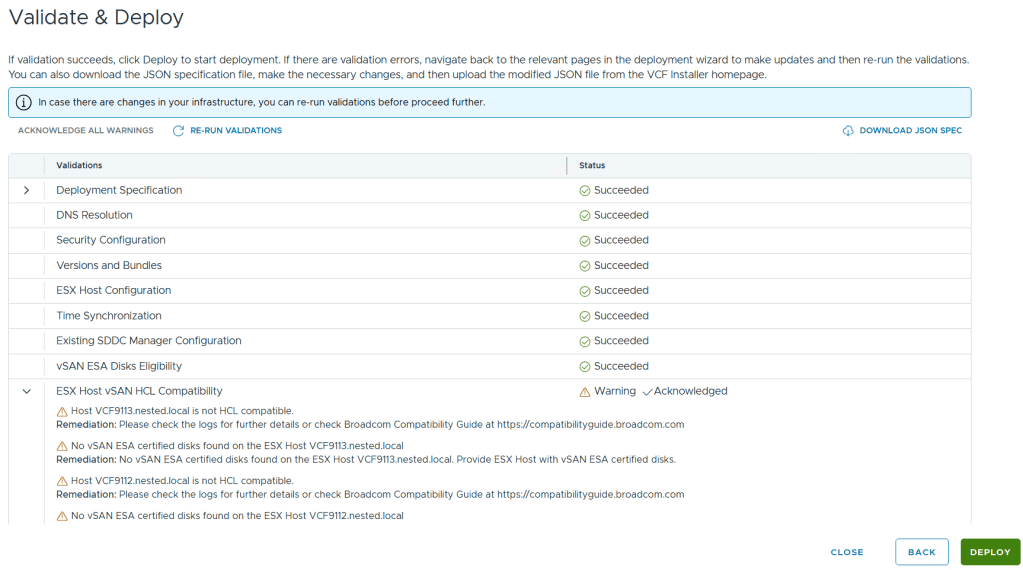

Allow it to validate the deployment information.

I reviewed the validation warnings, at the top click on “Acknowledge all Warnings” and click ‘DEPLOY’ to move to the next step.

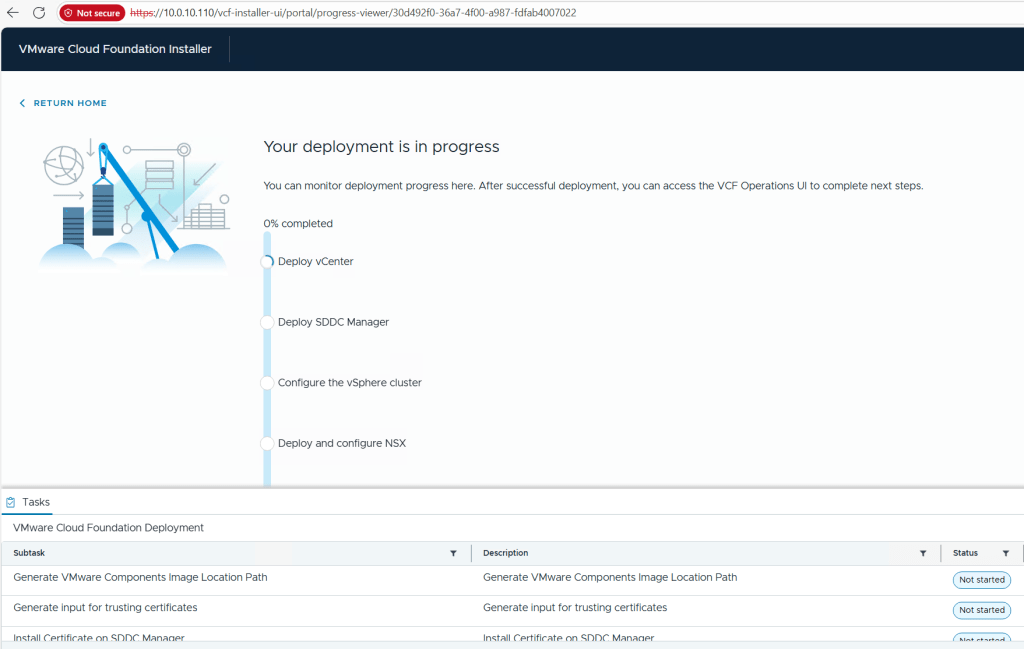

Allow the deployment to complete.

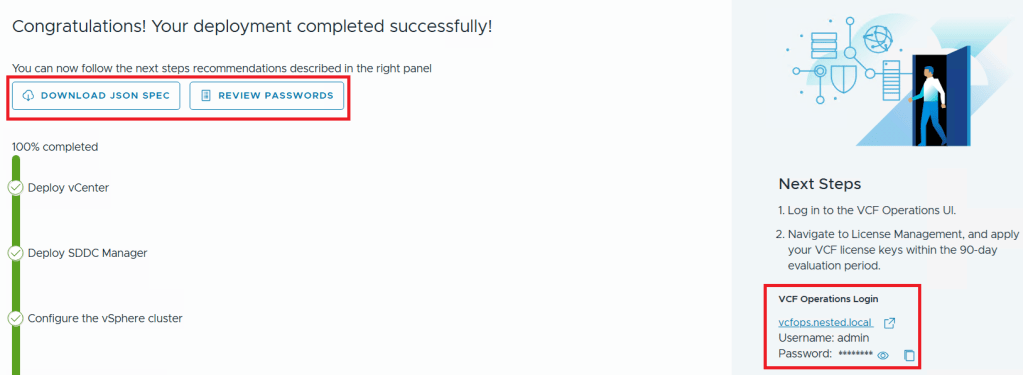

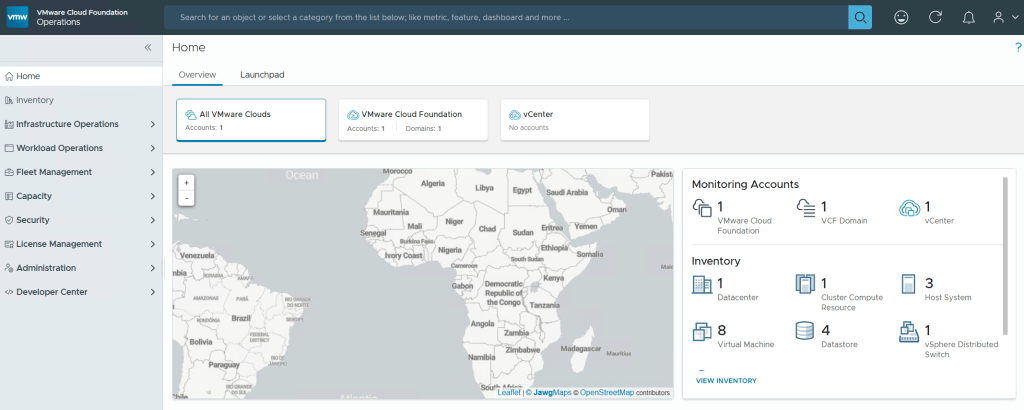

Once completed, I download the JSON SPEC, Review and document the passwords, (Fig-1) and then log into VCF Operations. (Fig-2)

(Fig-1)

(Fig-2)

Now that I have a VCF 9.0.1 deployment complete I can move on to Day N tasks. Thanks for reading and reach out if you have any questions.

VMware Workstation Gen 9: Part 6 VCF Offline Depot

To deploy VCF 9 the VCF Installer needs access to the VCF installation media or binaries. This is done by enabling Depot Options in the VCF Installer. For users to move to the next part, they will need to complete this step using resources available to them. In this blog article I’m going to supply some resources to help users perform these functions.

Why only supply resources? When it comes to downloading and accessing VCF 9 installation media, as a Broadcom/VMware employee, we are not granted the same access as users. I have an internal process to access the installation media. These processes are not publicly available nor would they be helpful to users. This is why I’m supplying information and resources to help users through this step.

What are the Depot choices in the VCF Installer?

Users have 2 options. 1) Connect to an online depot or 2) Off Line Depot

What are the requirements for the 2 Depot options?

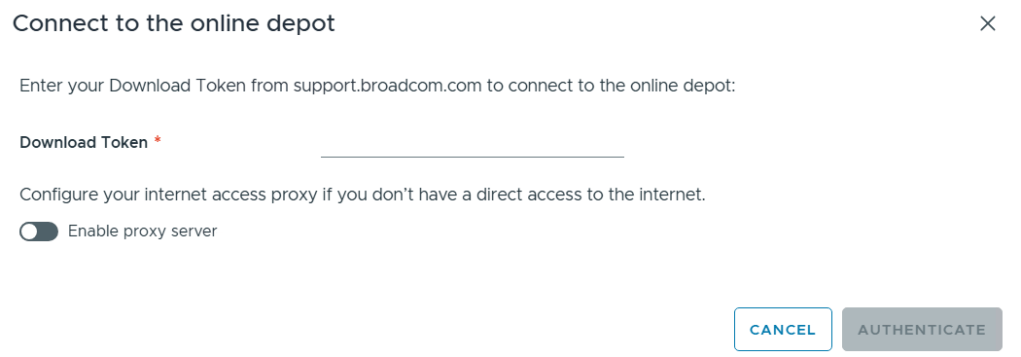

1) Connect to an online depot — Users need to have an entitled support.broadcom.com account and a download token. Once their token is authenticated they are enabled to download.

See These URL’s for more information:

2) Offline Depot – This option may be more common for users building out Home labs.

See these URLs for more information:

- Set Up an Offline Depot Web Server for VMware Cloud Foundation

- Set Up an Offline Depot Web Server for VMware Cloud Foundation << Use this method if you want to setup https on the Photon OS.

- How to deploy VVF/VCF 9.0 using VMUG Advantage & VCP-VCF Certification Entitlement

- Setting up a VCF 9.0 Offline Depot

I’ll be using the Offline Depot method to download my binaries and in the next part I’ll be deploying VCF 9.0.1.

VMware Workstation Gen 9: Part 5 Deploying the VCF Installer with VLANs

The VCF Installer (aka SDDC Manager Appliance) is the appliance that will allow me to deploy VCF on to my newly created ESX hosts. The VCF Installer can be deployed on to a ESX Host or directly on Workstation. There are a couple of challenges with this deployment in my Home lab and in this blog post I’ll cover how I overcame this. It should be noted, the modifications below are strictly for my home lab use.

Challenge 1: VLAN Support

By default the VCF Installer doesn’t support VLANS. It’s a funny quandary as VCF 9 requires VLANS. Most production environments will allow you to deploy the VCF Installer and be able to route to a vSphere environment. However, in my Workstation Home Lab I use LAN Segments which are local to Workstation. To overcome this issue I’ll need to add VLAN support to the VCF Installer.

Challenge 2: Size Requirements

The installer takes up a massive 400+ GB of disk space, 16GB of RAM, and 4 vCPUs. The current configuration of my ESX hosts don’t have a datastore large enough to deploy it to, plus vSAN is not set up. To overcome this issue I’ll need to deploy it as a Workstation VM and attach it to the correct LAN Segment.

In the steps below I’ll show you how I added a VLAN to the VCF Installer, deployed it directly on Workstation, and ensured it’s communicating with my ESX Hosts.

Deploy the VCF Installer

Download the VCF Installer OVA and place the file in a location where Workstation can access it.

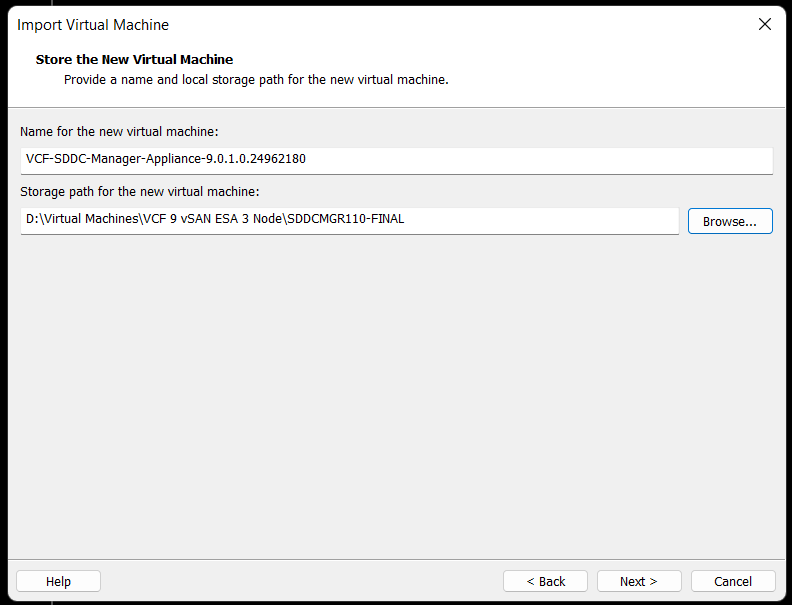

In Workstation click on File > Open. Choose the location of your OVA file and click open.

Check the Accept box > Next

Choose your location for the VCF Installer Appliance to be deployed. Additionally, you can change the name of the VM. Then click Next.

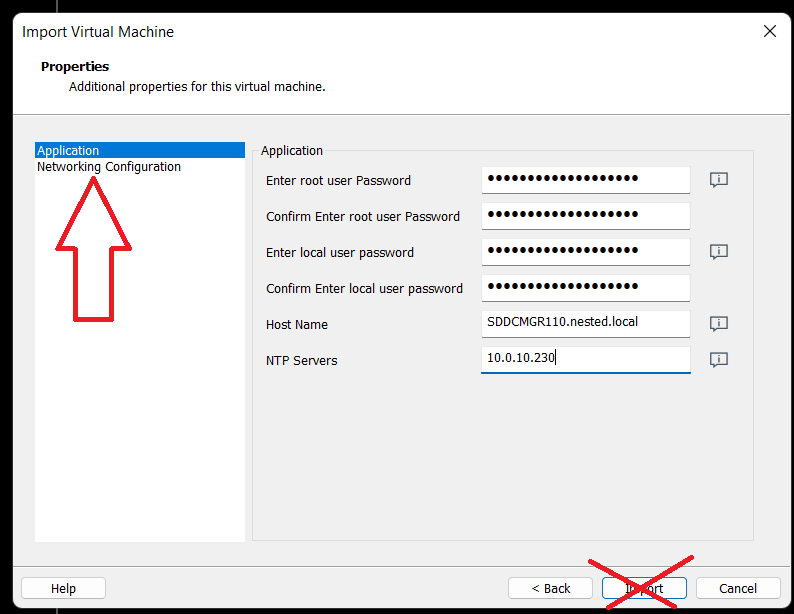

Fill in the passwords, hostname, and NTP Server. Do not click on Import at this time. Click on ‘Network Configuration’.

Enter the network configuration and click on import.

Allow the import to complete.

Allow the VM to boot.

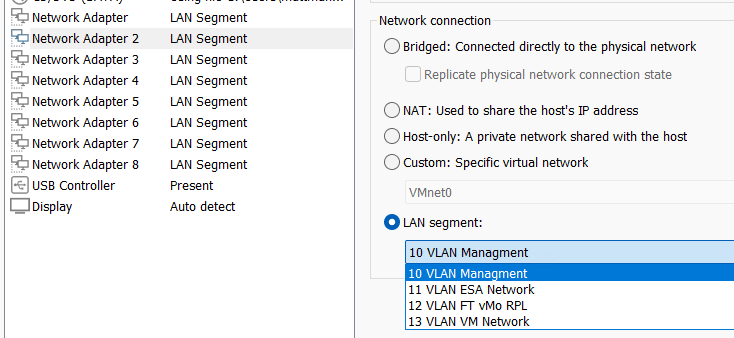

Change the VCF Installer Network Adapter Settings to match the correct LAN Segment. In this case I choose 10 VLAN Management.

Setup a Network Adapter with VLAN support for the VCF Installer.

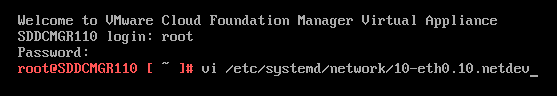

1) Login as root and create the following file.

vi /etc/systemd/network/10-eth0.10.netdev

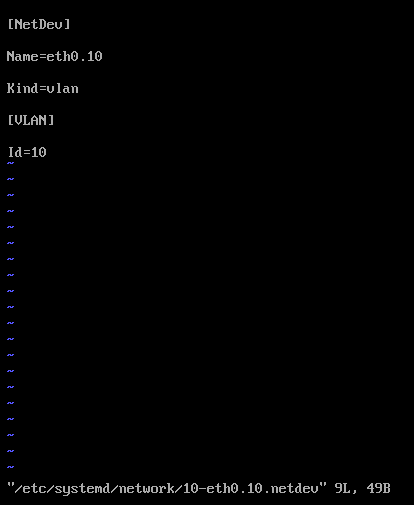

Press Insert the add the following

[NetDev]

Name=eth0.10

Kind=vlan

[VLAN]

Id=10

Press Escape, Press :, Enter wq! and press enter to save

2) Create the following file.

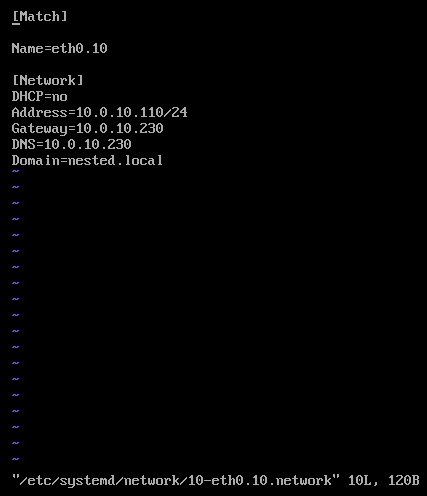

vi /etc/systemd/network/10-eth0.10.network

Press insert and add the following

[Match]

Name=eth0.10

[Network]

DHCP=no

Address=10.0.10.110/24

Gateway=10.0.10.230

DNS=10.0.10.230

Domain=nested.local

Press Escape, Press :, Enter wq! and press enter to save

3) Modify the original network file

vi /etc/systemd/network/10-eth0.network

Press Escape, Press Insert, and remove the static IP address configuration and change the configuration as following:

[Match]

Name=eth0

[Network]

VLAN=eth0.10

Press Escape, Press :, Enter wq! and press enter to save

4) Update the permissions to the newly created files

chmod 644 /etc/systemd/network/10-eth0.10.netdev

chmod 644 /etc/systemd/network/10-eth0.10.network

chmod 644 /etc/systemd/network/10-eth0.network

5) Restart services or restart the vm.

systemctl restart systemd-networkd

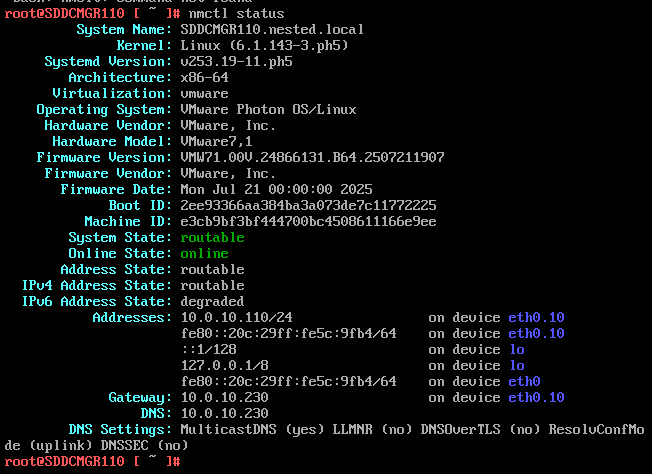

6) Check the network status of the newly created network eth0.10

nmctl status

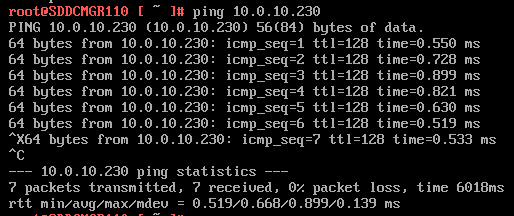

7) Do a ping test from the VCF Installer appliance and try an SSH session from another device on the same vlan. In my case I pinged 10.0.10.230.

Note – The firewall needs to be adjusted to allow other devices to ping the VCF Installer appliance.

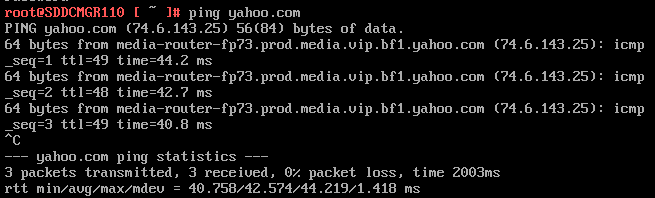

Next I do a ping to an internet location to confirm this appliance can route to the internet.

8) Allow SSH access to the VCF Installer Appliance

Follow this BLOG to allow SSH Access.

From the Windows AD server or other device on the same network, putty into the VCF Installer Appliance.

Adjust the VCF Installer Firewall to allow inbound traffic to the new adapter

Note – Might be a good time to make a snapshot of this VM.

1) From SSH check the firewall rules for the VCF Installer with the following command.

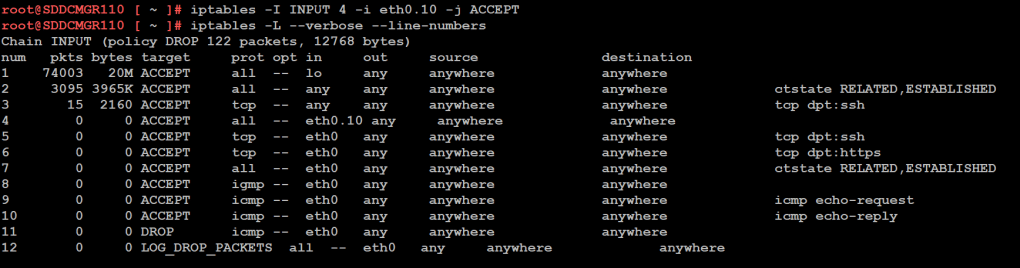

iptables -L –verbose –line-numbers

From this output I can see that eth0 is set up to allow access to https, ping, and other services. However, there are no rules for the eth0.10 adapter. I’ll need to adjust the firewall to allow this traffic.

Next I insert a new rule allowing all traffic to flow through e0.10 and check the rule list.

iptables -I INPUT 4 -i eth0.10 -j ACCEPT

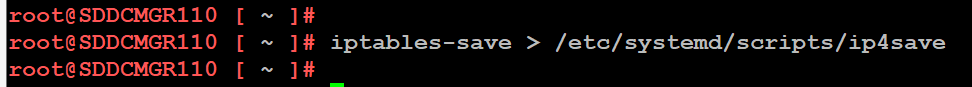

The firewall rules are not static. To make the current firewall rules stay static I need to save the rules.

Save Config Commands

Restart and make sure you can now access the VCF Installer webpage, and I do a ping test again just to be sure.

Now that I got VCF Installer installed and working on VLANs I’m now ready to deploy the VCF Offline Depot tool into my environment and in my next blog post I’ll do just that.

VMware Workstation Gen 9 Part 4 ESX Host Deployment and initial configuration

Now that I created 3 ESX hosts from templates it is time to install ESX. To do this I simply power on the Hosts and follow the prompts. The only requirement at this point is my Windows Server and Core Services be up and functional. In this blog we’ll complete the installation of ESX.

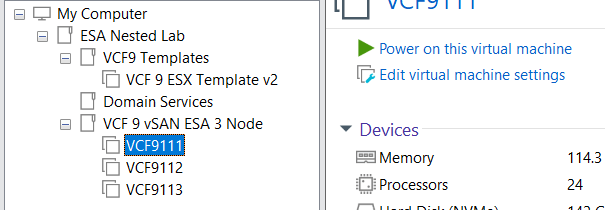

Choose a host then click on “Power on this virtual machine”.

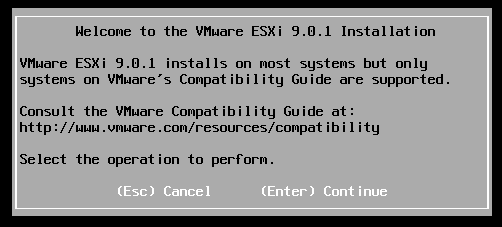

The host should boot to the ESX ISO I choose when I created my template.

Choose Enter to Continue

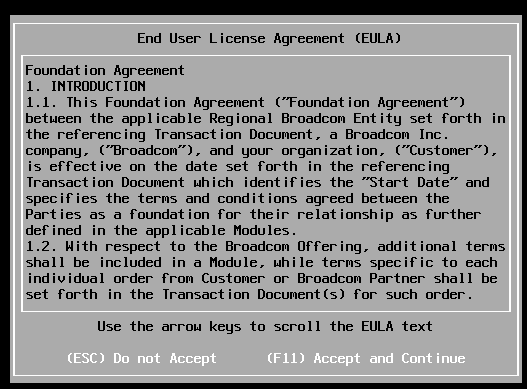

Choose F11 to Accept and Continue

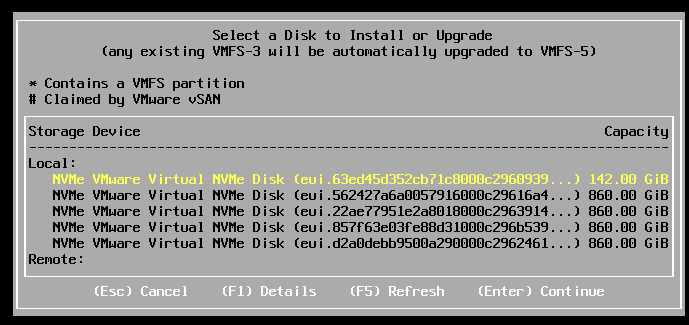

If the correct boot disk is selected, press Enter to continue.

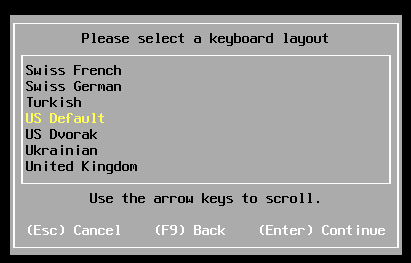

Choose pressed enter to accept the US Default keyboard layout

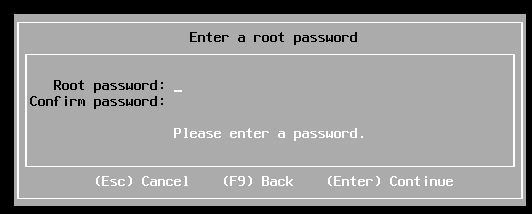

Entered a root password and pressed enter.

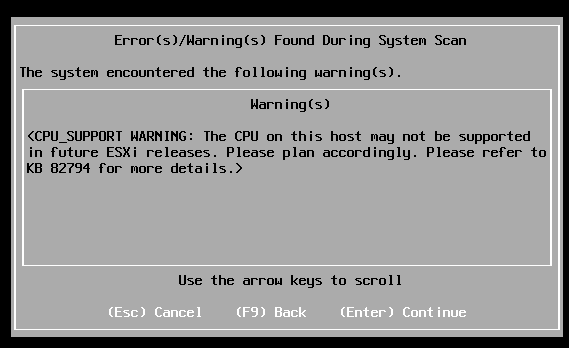

Pressed enter at the warning of CPU support.

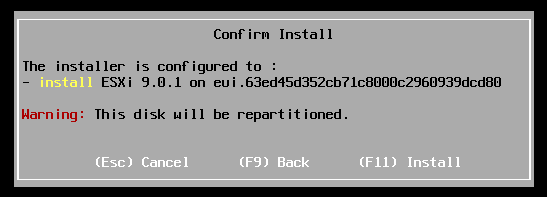

Pushed F11 to install

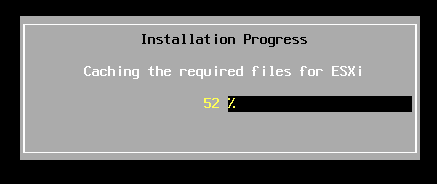

Allowed ESX to install.

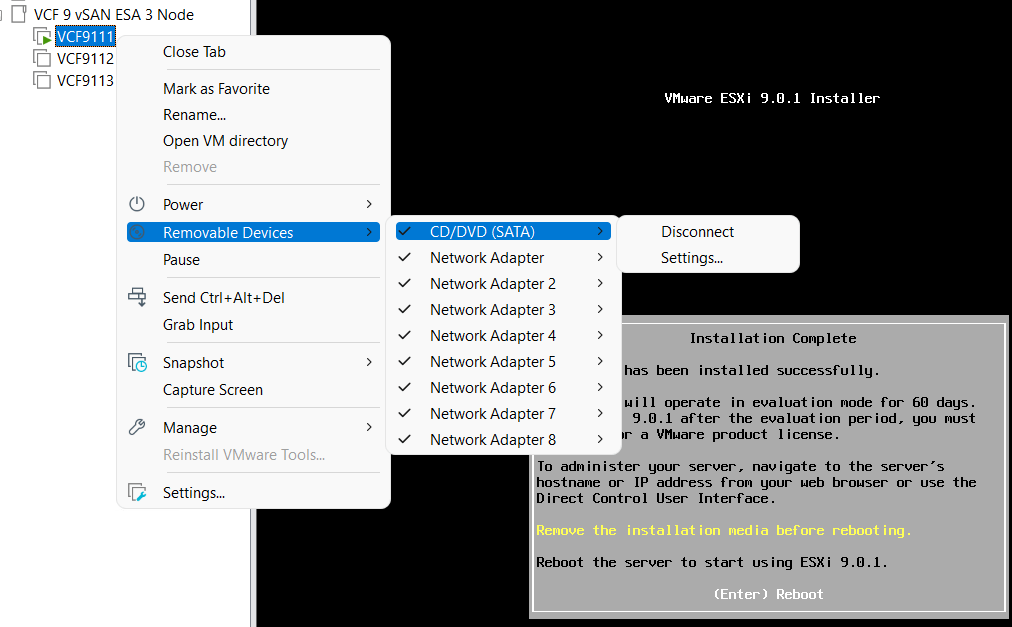

Disconnected the media and pressed enter to reboot

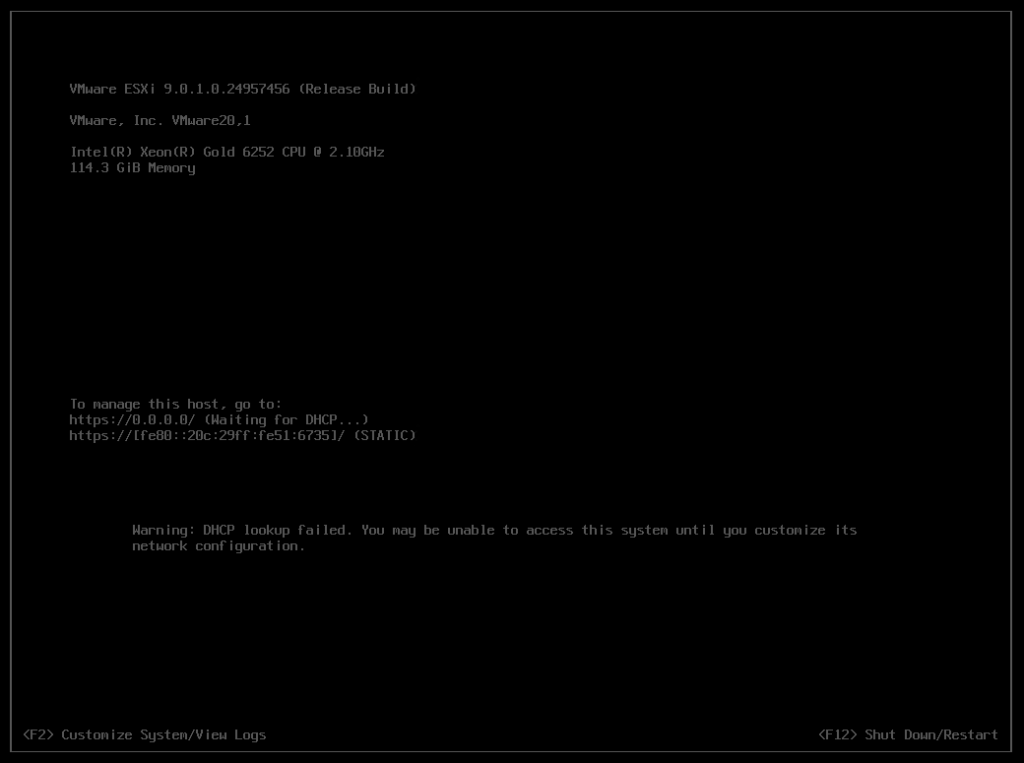

Once rebooted I choose F2 to customize the system and logged in with my root password

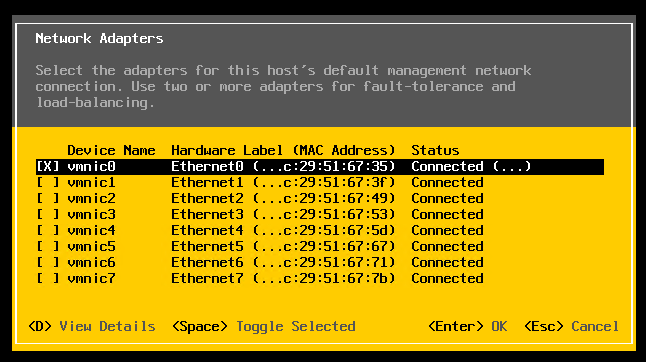

Choose Configure Management Network > Network Adapters, and validate the vmnic0 is selected, then pressed escape

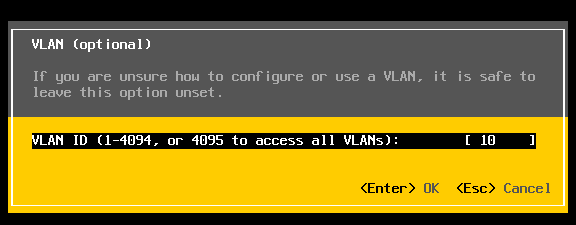

Choose VLAN (optional) > Entered in 10 for my VLAN > pressed enter to exit

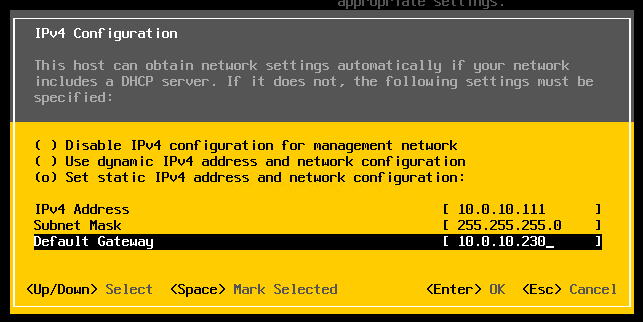

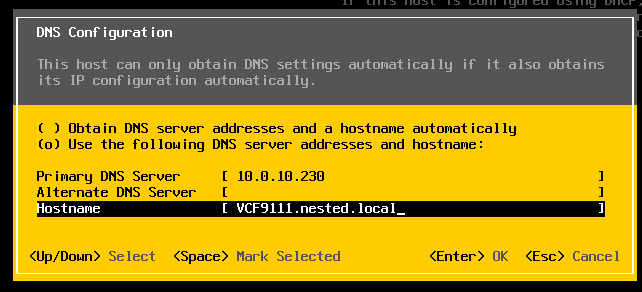

Choose IPv4 Configuration and enter the following for VCF9111 host and then pressed enter.

Choose DNS Configuration and enter the following.

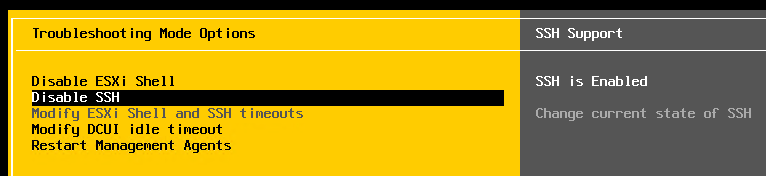

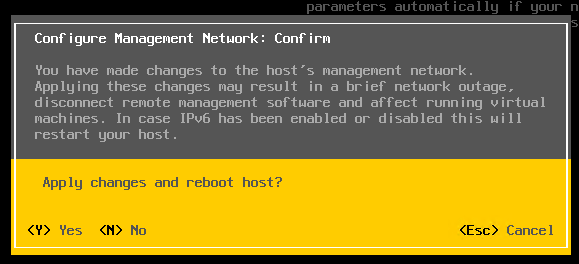

Press Escape to go to the main screen. Press Y to restart management. Arrow down to ‘Enable ESXi Shell” and press enter, then the same for SSH. Both should now be enabled.

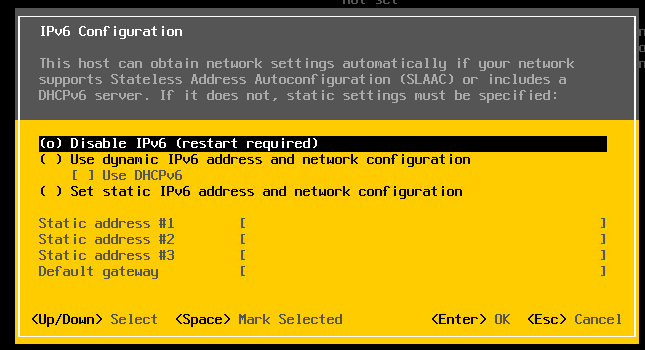

Press Escape and choose Configure Management Network. Next choose IPv6 Configuration, choose “Disable IPv6” and press enter.

Press Escape and the host will prompt you to reboot, press Y to reboot.

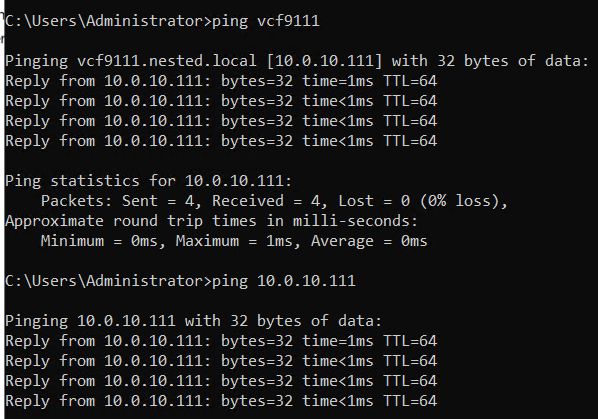

Test connectivity

From the AD server simply ping the VCF9111 host. This test ensures DNS is working properly and the LAN Segment is passing VLAN10.

From here I repeat this process for the other 2 hosts, only assigning them unique IPs.

Next up Deploying the VCF Installer with VLANs.

VMware Workstation Gen 9: Part 2 Using Workstation Templates

Workstation templates are a quick and easy way to create VMs with common settings. My nested VCF 9 ESX Hosts have some commonalities where they could benefit from template deployments. In this blog post I’ll show you how I use Workstation templates to quickly deploy these hosts and the hardware layout.

My nested ESX Hosts have a lot of settings. From RAM, CPU, DISK, and networking there are tons of clicks per host which is prone to mistakes. The LAN Segments as an example entail 8 clicks per network adapter. That’s 192 clicks to set up my 3 ESX hosts. Templates cover about 95% of all the settings, the only caveat is the disk deployment. Each host has a unique disk deployment which I cover below.

There are 2 things I do first before creating my VM templates. 1) I need to set up my VM folder Structure, and 2) Setup LAN Segments.

VM folder Structure

The 3 x Nested ESX hosts in my VCF 9 Cluster will be using vSAN ESA. These nested ESX Hosts will have 5 virtual NVMe disks (142GB Boot, and 4 x 860GB for vSAN). These virtual NVMe disks will be placed on to 2 physical 2TB NVMe Disks. At the physical Windows 11 layer I created folders for the 5 virtual NVMe disks on each Host. On physical disk 1 I create a BOOT, ESA DISK 1, and ESA DISK 2 folders. Then on physical disk 2 I created ESA DISK 3 and ESA DISK 4. By doing this I have found it keeps my VMs disks more organized and running efficiently. Later in this post we’ll create and position these disks into the folder.

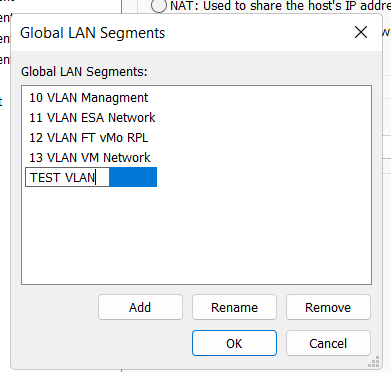

Setup LAN Segments

Prior to creating a Workstation VM Template I need to create my LAN Segments. Workstation LAN Segments allow VLAN traffic to pass. VLANs are a requirement of VCF 9. Using any Workstation VM, choose a network adapter > LAN Segments > LAN Segments Button. The “Global LAN Segments” window appears, click on Add, name your LAN Segment, and OK when you are done.

For my use case I need to make 4 LAN Segments to support the network configuration for my VCF 9 deployment.

Pro-Tip: These are Global LAN Segments, which makes them universally available—once created, every VM can select and use them. Create these first before you create your ESX VM’s or Templates.

Create your ESX Workstation Template

To save time and create all my ESX hosts with similar settings I used a Workstation Template.

NOTE: The screenshot to the right it is the final configuration.

1) I created an ESX 9 VM in Workstation:

- Click on File > New Virtual Machine

- Chose Custom

- For Hardware I chose Workstation 25H2

- Chose my Installer disc (iso) for VCF 9

- Chose my directory and gave it a name of VCF9 ESX Template

- Chose 1 Processor with 24 Cores (Matches my underlying hardware)

- 117GB of RAM > Next

- Use NAT on the networking > Next

- Paravirtualized SCSI > Next

- NVMe for the Disk type > Next

- Create a new Virtual Disk > Next

- 142GB for Disk Size > Store as a Single File > Next

- Confirm the correct Directory > Next

- Click on the Customize Hardware button

- Add in 8 NICs > Close

- Make sure Power on this VM after creation is NOT checked > Finish

- Go back in to VM Settings and align your Network adapters to your LAN Segments

- NIC 0 and 2 > 10 VLAN Management

- NIC 3 and 4 > 11 VLAN ESA Network

- NIC 5 and 6 > 12 VLAM FT vMo RPL

- NIC 7 and 8 > 13 VLAN VM Network

Note: You might have noticed we didn’t add the vSAN disks in this deployment, we’ll create them manually below.

2) Next we’ll turn this VM into a Template

Go to VM Settings > Options > Advanced > Check Box “Use this virtual machine as a linked clone template” and click on ok.

Next, make a snapshot of the VM. Right click on VM > chose Snapshot > Take Snapshot. In the description I put in “Initial hardware configuration.”

Deploy the ESX Template

I’ll need to create 3 ESX Hosts base off of the ESX template. I’ll use my template to create these VM’s, and then I’ll add in their unique hard drives.

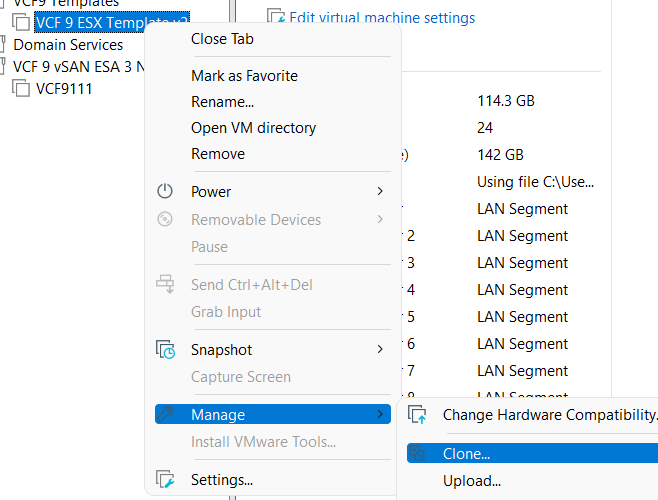

Right click on the ESX Template > Manage > Clone

Click Next > Choose “The current state of the VM” > Choose “Create a full clone”

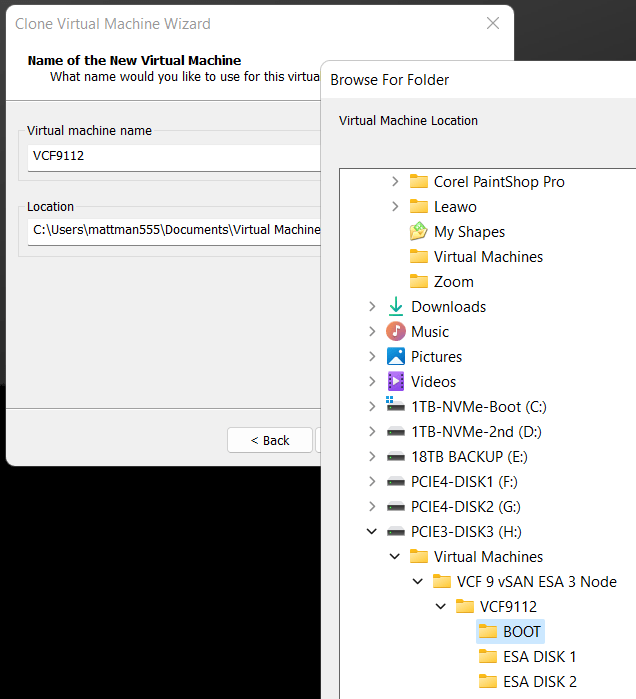

Input a name for the VM

MOST Important – Make sure you select the correct disk and folder you want the boot disk to be deployed to. In the Fig-1 below, I’m deploying my second ESX host boot disk so I chose its BOOT folder.

Click on finish > The VM is created > click on close

(Fig-1)

Adding the vSAN Disks

Since we are using unique vSAN disk folders and locations we need to add our disks manually.

For each nested ESX host I right click on the VM > Settings

Click on Add > chose Hard disk > Next > NVMe > Create New Virtual Disk

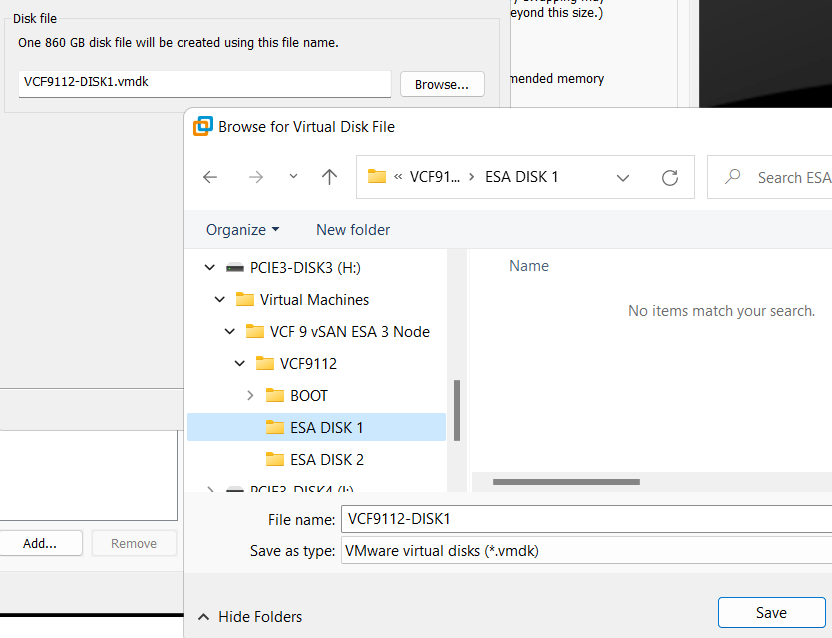

Type in the size (860GB) > Store as a single file > Next

Rename the disk filename to reflect the nested vSAN ESA disk number

Choose the correct folder > Save

Repeat for the next 3 disks, placing each one in the correct folder

When I’m done I created 4 x 860GB disks for each host, all as single files, and all in unique folders and designated physical disks.

(Fig-2, below) I’m creating the first vSAN ESA disk named VCF9112-DISK1.vmdk

That’s it!

Workstation Templates save me a bunch of time when creating these 3 ESX Hosts. Next we’ll cover Windows Core Services and Routing.

VMware Workstation Gen 9: Part 1 Goals, Requirements, and a bit of planning

It’s time to build my VMware Workstation–based home lab with VCF 9. In a recent blog post, I documented my upgrade journey from VMware Workstation 17 to 25H2. In this installment, we’ll go deeper into the goals, requirements, and overall planning for this new environment. As you read through this series, you may notice that I refer to VCF 9.0.1 simply as VCF 9 or VCF for brevity.

Important Notes:

- VMware Workstation Gen 9 series is still a work in progress. Some aspects of the design and deployment may change as the lab evolves, so readers should consider this a living build. I recommend waiting until the series is complete before attempting to replicate the environment in your own lab.

- There are some parts in this series where I am unable to assist users. In lieu I provide resources and advice to help users through this phase. These areas are VCF Offline Depot and Licensing your environment. As a Broadcom/VMware employee, we are not granted the same access as users. I have an internal process to access resources and these processes would not be helpful to users.

Overall Goals

- Build a nested minimal VCF 9.0.1 environment based on VMware Workstation 25H2 running on Windows 11 Pro.

- Both Workload and Management Domains will run on the same set of nested ESX Hosts.

- Using the VCF Installer I’ll initially deploy the VCF 9 Management Domain Components as a Simple Model.

- Initial components include: VCSA, VCF Operations, VCF Collector, NSX Manager, Fleet Manager, and SDDC Manager all running on the 3 x Nested ESX Hosts.

- Workstation Nested VMs are:

- 3 x ESX 9.0.1 Hosts

- 1 x VCF Installer

- 1 x VCF Offline Depot Appliance

- 1 x Windows 2022 Server (Core Services)

- Core Services supplied via Windows Server: AD, DNS, NTP, RAS, and DHCP.

- Networking: Private to Workstation, support VLANs, and support MTU of 9000. Routing and internet access supplied by the Windows Server VM.

- Should be able to run minimal workload VM’s on nested ESX Hosts.

Hardware BOM

If you are interested in the hardware I’m running to create this environment please see my Build of Materials (BOM) page.

Additionally, check out the FAQ page for more information.

Deployment Items

To deploy the VCF Simple model I’ll need to make sure I have my ESX 9.0.1 Hosts configured properly. With a simple deployment we’ll deploy the 7 required appliances running on the Nested ESX hosts. Additionally, directly on Workstation we’ll be running the AD server, VCF Offline Depot tool, and the VCF Installer appliance.

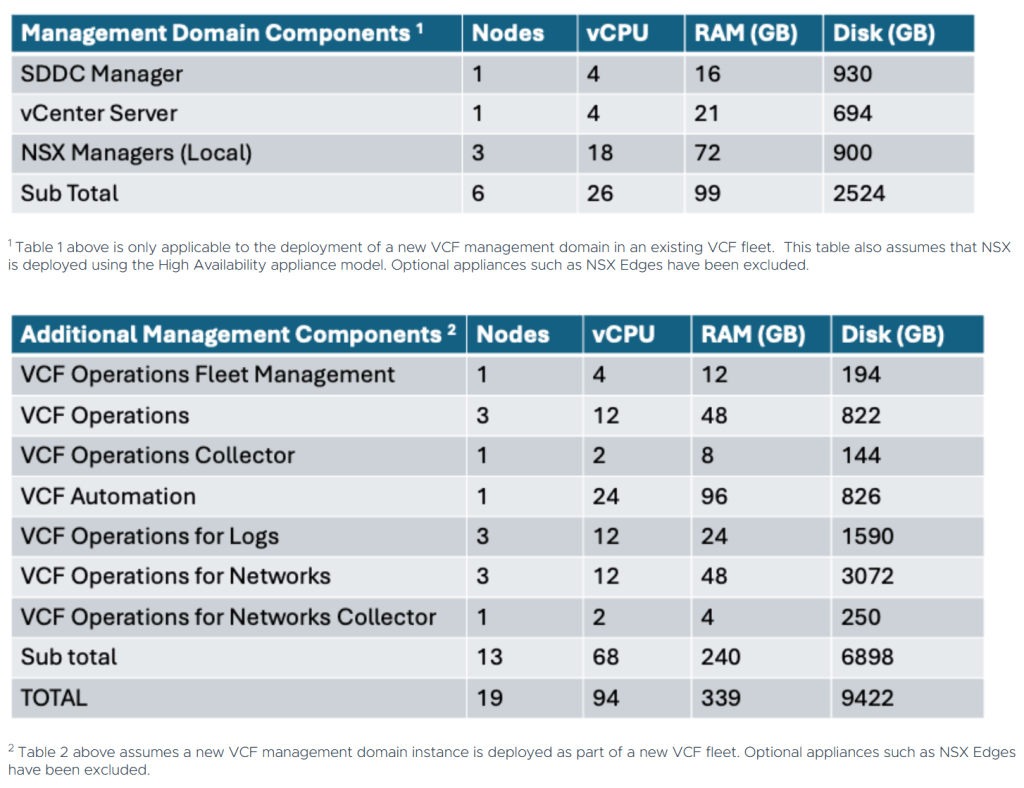

Using the chart below I can get an idea of how many cores, ram, and disk that will be needed. The one item that stands out to me is the component with the highest core count. In this case it’s VCF Automation at 24 cores. This is important as I’ll need to make sure my nested ESX Servers match or exceed 24 cores. If not, VCF Automation will not be able to deploy. Additionally, I’ll need to make sure I have enough RAM, Disk, and space for Workload VM’s.

Workstation Items

My overall plan is to build out a Windows Server, 3 x ESX 9 hosts, VCF Installer, and the VCF Depot Appliance. Each one of these will be deployed directly onto Workstation. Once the VCF Installer is deployed it will take care of deploying and setting up the necessary VMs.

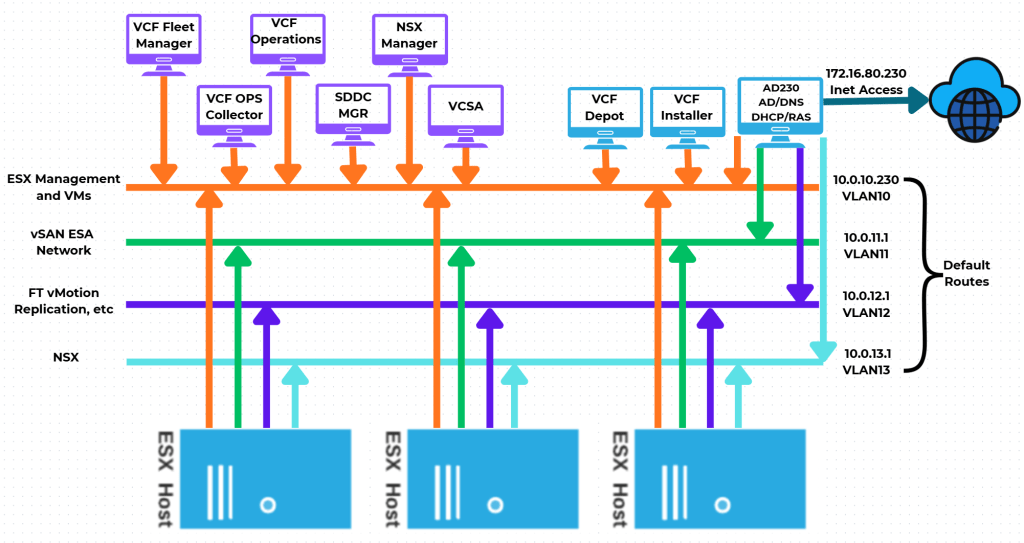

NOTE: In the network layout below, hosts that are blue in color are running directly on Workstation, and those in purple will be running on the nested ESX hosts.

Network Layout

One of the main network requirements for VCF is supporting VLAN networks. My Gen8 Workstation deployment did not use VLAN networks. Workstation can pass tagged VLAN packets via LAN Segments. The configuration of LAN Segments are done at the VM’s Workstation settings, not via the Virtual Network Editor. We’ll cover this creation soon.

In the next part of this series I’ll show how I used Workstation Templates to create my VMs and align them to the underlying hardware.

Resources: