4 Books in 4 Weeks – Book 1 Storage Area Networks for Dummies

In 2004 I bought the 1st Edition Storage Area Networks for Dummies (now in its 2nd Edition) and read 100 pages or so, life got in the way, and now 2011 I’ve decide to finish it. I know what you’re thinking, “Why read a Dummies book?” Back in the day this book had the information I was looking for and it helped me on my first virtualization design. In today’s world some of the information is somewhat outdated however it still has a lot of the basic SAN fundamentals and concepts of today’s SAN’s.

An Easy Read and my own personal Dummies Tip…

Going through the book I kept in mind this is a dummies book and it’s meant to be an easy read. This book was an easy read and it was a great refresh on SAN technologies. There was quite a bit of outdated material in this book (I expected this) and it was interesting to see how technology had progressed since 2003 days. Example – In 2003 FCoIP was referred as FCIP or iFCP. The basic book layout comprises of 5 different parts ranging from SAN 101 through Management & Configuration. One thing I dislike about technical books is when an author spells out an acronym once, never to return to it, and then references the acronym over and over. I couldn’t tell you how many times I go back to find out the meaning of an acronym. This book overcomes this issue and it does it so well it becomes unnecessary. Example, they mention FC-AL (See Cliff notes below), and talk about how FC-AL (For more information about Fibre Channel Arbitrated Loop – Please see my cliff notes under the section “Some General cliff notes below”) is an old technology. Only a fool would use FC-AL (Tip: if you’d like to know more about FC-AL see my cliff notes below). Hopefully you get my point. It was nice to have the reference but mentioning it too often (it seemed like 40 times) got a bit annoying. Here’s a Dummies Tip: Authors reading this blog – please find a happy medium, do it, and don’t blame your editor for taking it out. I mean after all it’s your book, right?

Something Unexpected…

While I was reading the book we had several vendors present their products at my current employer. They mentioned a lot of the terms I listed below. So yes this books still has value and for a person who wants to learn the basics I would recommend they read the 2nd edition and then on to “higher education”. I noted lots of errors in the book especially around their math or what appeared to be simple cut/paste issues. I did go to the www.dummies.com site to see if they printed book corrections but I was unable to find it.

Some General Cliff Notes…

Fibre Channel protocol is spelled fibRE not Fiber – http://en.wikipedia.org/wiki/Fibre_Channel << My spell checker really hates fibre J

Single Mode Fiber Cable – Smaller diameter means a more direct path for the beam, usually yellow, for long distances (Up to 10K), usually uses a higher powered laser

Multi Mode Fiber Cable – Larger diameter means a less direct path for the beam, usually Orange, for shorter distances (<500M, Normal 10 to 20M), can use an LED or vertical-cavity surface-emitting lasers (VCSELs)

Common fiber connectors – LC – Most common, SC – Older Larger connector, ST – Older BNC Twist on style http://en.wikipedia.org/wiki/Fiber_connector

FC-AL – Fibre Channel Arbitrated Loop Protocol, used with a SAN hub – RARE replaced by SAN Switches, not one device can exceed the max speed, the more devices the more congestion occours, MAX of 128 devices per hub, common use might be for SAN based TAPE.

FC-SW – Fibre Channel Switched Protocol, used with a SAN Switch, more efficient then HUBS, devices can cross communicate with each other, 1000’s of devices can be connected, each device is assigned a WWN(World Wide Name)

Modular class SAN – Use Controllers which are separate from disk shelves

MonoLithic class SAN – Use disks that are assembled inside the array frame, -these disks are connected to many internal controllers through lots of cache memory

Storage Bus Architecture Array – One thing can happen on the array at a time (Like a Hub Switch)

Storage Switch Architecture Array – Multiple things can be going on at the same time with less of an impact on I/O performance.

LUN – Logical Unit Number, usually represents a RAID set represents all the smaller physical drives as one logical disk to your server.

RAID – It depends on who you ask it can mean Redundant Array of Inexpensive Disks or Redundant array of Independent Disks. Funny thing this book was pre NetApp, they mention RAID 4 is no longer in use. Oh contraire NetApp uses it!

LUNS – Logical Unit Numbers represent the storage space formed by a RAID set. It may contain the partial or entire space.

Fiber Optic Cable – When Fiber Optic cables are used within a storage network they are spelled fibre channel cables. This helps to distinguish their meaning from other fiber based cables such as telecommunications.

ISL (Inter-switch link) – the term used to describe the connection between two switches in a fabric

Fabric Protocol – A SAN fabric may include Routing and conversation between switches, Listing Services, and Security

WWN (World Wide Name) – Devices in the SAN fabric are addressed by the World Wide Name. WWN’s consists of a 16 HEX numbers which make a 64 Bit Address.

Three Layers to a SAN Design AKA the Basic SAN topology – Host, SAN, and Storage

DAS – Direct Attached Storage AKA Local Host Storage

Point to Point – Host to disk Storage via a Fibre Cable (Require dedicated Storage Ports)

Arbitrated Loop Topology – Most likely you can find these devices on ebay, they might even pay you to take them off their hands. Basic designs around FC-AL hubs are cascading, fault-tolerant loops and your basic hub loops.

Switch Fabric Topology – Most prevalent for today’s fibre networks. Switch types include smaller modular (usually single failure) and larger director class (very redundant) switches.

Basic Switch Fabric Topologies – Dual Switch, Loop of Switches, Meshed Fabric, Star, Core-Edge

Zoning – Is a method used to segregate or separate devices connected to a switch fabice via switch based security. A Zone in many ways is similar to an IP Switch VLAN. They can span multiple switches. Zoning is typically used to separate storage from different operating systems. If by chance a windows server could see all the storage it might write a signature. If this space belonged to a UNIX server this could make it unusable. Other uses could be zoning storage by QA, Test, DEV, and Production networks. Zoning can come in two forms – Soft AKA by WWN or Hard AKA by physical switch port.

Quick Summary…

It’s a good starting place for those interested in SAN technologies, this books has some value in today’s world BUT if you choose this book then I suggest you read the latest edition…

Thanks for reading my post… I’m off to read my 2nd book of 4 VMware ESX and ESXi in the Enterprise 2nd Edition by Edward Halekty

GS3 Network IOS and more Emulator

Thank you to my friend Teed for the tip below on this great little program… VERY Cool!

This website looked cool so I bookmarked it a couple months ago. Haven’t gone through the website much yet but some of the pages have helped me with some AAA config on a switch awhile back. I liked to sound of (FREE) and 100 hands-on labs etc. using their GNS3 config.

http://www.freeccnaworkbook.com/

Blurb:

The Free CCNA Workbook provides over 100 hands on labs utilizing GNS3 (Graphic Network Simulator v3) to provide you a realistic hands on CCNA training experience at configuring Cisco devices. GNS3 in and of its self is a GUI for the core application, known as dynamips. Dynamips in a nut shell is a Cisco Router hardware emulator that executes REAL Cisco IOS images and allows you to virtualize multiple routers on a single desktop for educational purposes. By utilizing GNS3 and the free CCNA labs provided to you absolutely free at Free CCNA workbook dot com; you can prepare you for the CCNA certification exam without investing any of your hard earned money into Cisco hardware.

Blurb:

What is GNS3 ?

GNS3 is a graphical network simulator that allows simulation of complex networks.

To allow complete simulations, GNS3 is strongly linked with:

- Dynamips, the core program that allows Cisco IOS emulation.

- Dynagen, a text-based front-end for Dynamips.

- Qemu, a generic and open source machine emulator and virtualizer.

GNS3 is an excellent complementary tool to real labs for network engineers, administrators and people wanting to pass certifications such as CCNA, CCNP, CCIP, CCIE, JNCIA, JNCIS, JNCIE.

It can also be used to experiment features of Cisco IOS, Juniper JunOS or to check configurations that need to be deployed later on real routers.

This project is an open source, a free program that may be used on multiple operating systems, including Windows, Linux, and MacOS X.

Features overview

- The design of high quality and complex network topologies.

- Emulation of many Cisco IOS router platforms, IPS, PIX and ASA firewalls, JunOS.

- Simulation of simple Ethernet, ATM and Frame Relay switches.

- The connection of the simulated network to the real world!

- Packet capture using Wireshark.

Important notice: users have to provide their own IOS/IPS/PIX/ASA/JunOS to use with GNS3

4 Books in 4 Weeks

Over the next month or so I plan to read 4 technical books and write up a review / cliff notes. I choose the books below based on current needs as a VMware Senior Engineer. However one was chosen as a personal goal (okay its unfinished business). Another reason for reading (except the obvious – staying current) is the training budget over the past years has been non-existent and I felt it was time for me to move my education forward by reading up.

Here are the FANTASTIC 4 –

Book 1 Storage Area for Dummies (2003) 380 pages by Christopher Poelker and Alex Nikitin (both of Hitachi Data Systems) – Okay this is the unfinished business I was talking about. Back in 2004 I got my first vmware position. I was asked to help re-design and deploy a failing nationwide project based on VMware 2.5. Back then we were using a pair of DL380s with 8GB of RAM connected to an EMC SAN. I had a pretty good idea about SANs but I needed a general guide hence this book. I read the first hundred pages and feel off track. However the project went on and was a big success.

Book 2 VMware ESX and ESXi in the Enterprise 2nd Edition 541 pages by Edward Halekty – I read VMware ESX Server (3.X) in the Enterprise in late 2007 and I must say it was one of the better books of its time. This is why I choose to read the updated version covering ESXi and vSphere.

Book 3 VMware vSphere 4.1 HA and DRS Technical Deepdive 215 Pages by Duncan Epping and Frank Dennerman – If you are in certain VMware social circles (Twitter) you know this is one of the “must” read books of its time. Though currently they are writing an update to it I felt it best to read the original. My hopes are to get a better understating of HA and DRS for some upcoming challenges I’m facing at work.

Book 4 VMware vSphere Design 340 Pages by Forbes Guthrie, Maish Saidel-kessing and Scott Lowe – Of these authors I have meet fellow vExpert Scott Lowe and I must say he’s the nicest person you could ever meet. Not only does he author many books, work a full time job, donate his time/talents to VMUGs but he’s also a father too. By reading this book I hope to gain a better understanding of design processes around vSphere.

So those are the 4 books I plan to read…. Wish me luck I’m off to start reading Book 1 Storage Area for Dummies!

The future is only ESXi

Here is a great document that I found on ESXi, good reference…

http://communities.vmware.com/docs/DOC-11113

ESXi vs Full ESX VERSION 14  Created on: Oct 31, 2009 3:07 AM by AndreTheGiant – Last Modified: Jul 25, 2010 12:50 AM by AndreTheGiant

Created on: Oct 31, 2009 3:07 AM by AndreTheGiant – Last Modified: Jul 25, 2010 12:50 AM by AndreTheGiant

The future is only ESXi

With the new release of vSphere 4.1 there are some changes and news:

- the terminology as changed and now ESXi is ESXi Hypervisor Architecture

- the “old” ESX (or full ESX or legacy ESX or ESX with the service console) is available in the last release, from next version ONLY ESXi will be available.

VMware suggest to migrate to ESXi, the preferred hypervisor architecture from VMware. ESXi is recommended for all deployments of the new vSphere 4.1 release. For planning purposes, it is important to know that vSphere 4.1 is the last vSphere release to support both ESX and ESXi hypervisor architectures; all future releases of vSphere will only support the ESXi architecture.

On how to migrate see: http://www.vmware.com/products/vsphere/esxi-and-esx/

–

ESX vs ESXi

ESX and ESXi official comparison:

http://kb.vmware.com/kb/1006543 – VMware ESX and ESXi 3.5 Comparison

http://kb.vmware.com/kb/1015000 – VMware ESX and ESXi 4.0 Comparison

Probably the big difference is that ESXi has a POSIX Management Appliance that runs within the vmkernel and ESX has a GNU/Linux Management Appliance that runs within a VM.

ESX vs. ESXi which is better?

http://communities.vmware.com/blogs/vmmeup/2009/04/07/esx-vs-esxi-which-is-better

Dilemma: buy vSphere with ESXi4 or with ESX4?

http://www.vknowledge.nl/2009/01/15/to-esxi-or-not-to-esxi-thats-the-question/

ESXi vs. ESX: A comparison of features

http://blogs.vmware.com/esxi/2009/06/esxi-vs-esx-a-comparison-of-features.html

Note that HCL can be differed from ESX and ESXi:

ESX vs ESXi on the HCL

–

ESXi limitations

- No official interactive console (there is only a “unsupported” hidden console: http://www.virtualizationadmin.com/articles-tutorials/vmware-esx-articles/general/how-to-access-the-vmware-esxi-hidden-console.html) – With 4.1 the Tech mode support is now fully supported

- ESXi 4.0 has no official supported SSH access (there is only a “unsupported” access: http://www.yellow-bricks.com/2008/08/10/howto-esxi-and-ssh/) – Changed in 4.1, where SSH is a service

- No /etc/ssh/sshd_config file for SSH non-root access (but is still possible: http://www.yellow-bricks.com/2008/08/14/esxi-ssh-and-non-root-users/)

- No support for some 3th part backup programs (see also Backup solutions for VMware ESXi) – Resolved with “last” version of those programs

- No support for some 3th part programs

- ESXi 4.0 hasn’t Active Directory authentication for “local” user – Implemented in 4.1

- No full crontab for schedule jobs and scripts locally (but there is a root crontab in /var/spool/cron/crontabs/root)

- No hot-add feature for virtual disk in ESXi 4.0, but only on ESXi 3.5 (or also on ESXi Advanced with hot-add license) – resolved with first updates

- No SNMP “get” polling”

- No virtual Serial or Parallel port

- No USB disk in the “console”

- No support for mount ext2/ext3/ntfs/cifs filesystem in the console

- No fully functional esxtop

- No fully functional esxcfg-mpath

- No vscsiStats tool

- ESXi 4.0 has only experimental support for boot from SAN – Resolved with 4.1

- ESXi 4.0 has limited PXE install or scriptable installation (but seems that there is a solution: http://www.vmware.com/pdf/vsp_4_pxe_boot_esxi.pdf and http://www.mikedipetrillo.com/mikedvirtualization/2008/11/howto-pxe-boot-esxi.html) – Resolved with ESXi 4.1

- Normally with a standalone ESXi host you’ll get 1 hour of performance data, the graph should be set to reset every 20 seconds (you can also extend the performance data to 36 hours will a simple hack – http://www.vm-help.com/esx/esx3i/extending_performance_data.php)

–

Free ESXi (or VMware vSphere Hypervisor 4.1) limitations

- ESXi 4.x free can not be connected to a vCenter Center (it required a VC agent license)

- VCB does not work (it require a VCB license)

- Lot of 3th part backup programs does not work with free ESXi (see also: Backup solutions for VMware ESXi)

- RCLI and VMware Infrastructure toolkit are limited to read-only access for ESXi free

- Free ESXi does not support SNMP

- Free ESXi does not support Active Directory integration at this time

- Free ESXi does not support Jumbo Frame – http://kb.vmware.com/kb/1012454

- Free ESXi EULA has some interesting restrictions including enforced read only mode for v4 and later versions of v3.5.

See also: What’s the difference between free ESXi and licensed ESXi?

VMware ESX/ESXi EULA – http://www.vmware.com/download/eula/esx_esxi_eula.html

Re: EULA restrictions for free ESXi 4

–

ESXi advantages

- Fast to install / reinstall

- Can be installed on a SD flash card or USB key (there is also an embedded version that is pre-installed) – ESXi installation – Flash memory vs Hard disk

- Easy to configure (there is a simple configuration menu)

- Small footprint = fast and easy to patch + (maybe) more secure

- Extremely thin = fast installation + faster boot

- Does not use a vmdk for console filesystems (as ESX 4.0 does)

- There is a tool for dump ESXi configuration

- Near to be “plug and play” (for example with the embedded version and the Host Profile feature)

VM Check Alignment Tool

**Update 09/2016 — This 2011 version of the alignment tool wasn’t quite accurate, it was solely based sector alignment for EMC storage and I believe the author made several adjustments based on user feedback and comments. The current version seems to do a better job, but you don’t need any tools to check alignment just read this blog I wrote as it’s so simple to check **

Original Post below —

A work colleague led me to this program the other day.

This tool couldn’t be simpler to use. Download the EXE, place it on our VM and Click Check Alignment.

It’s a real quick way to ensure your aligned…

TIP: Don’t assume Windows 2008 is aligned out of the box, as you can see here this VM needs to be aligned… It’s out of alignment because of the deployment/unified server image process used to push the OS to the VM. READ this blog for more information

2008 R2

2003

Unforutnally, this tool is no longer availible for download.

Staying Current with VMware Technology

One of the common question I’m asked is- How do I stay current with virtualization technology

The answer is – Staying current takes time and work. Here are my Tips for staying current…

1. Join your Local User Group, show up, and get to know “Who are the People in your Neighborhood”

- In Phoenix we have one of the best VMware Users group in the world. 800+ Strong and they come from all over the state

- There are MANY vendors from all types of technologies (Storage, Backups, Networking, Server hardware, etc)

- User and Vendors alike present information in a Technical Fashion

- Phoenix VMUG regional meeting draws about ~300 Attendees from all types of industry

- Use these meetings to find out Who’s Who in the virtualization community and then starting asking LOTS of questions

2. Blog Sites – Read these sites frequently…

- Duncan Epping’s Blog – Yellow Bricks – http://www.yellow-bricks.com/ << TOP Rated VMware Blog

- Vaughan Stewart – The Virtual Storage Guy — http://blogs.netapp.com/virtualstorageguy/ << Mr. Vmware for NetApp

- Chad Sakac’s Blog – Virtual Geek – http://virtualgeek.typepad.com/virtual_geek/ <<< Mr. VMware for EMC (Has an army called Chad Army)

- Gabe Van Zanten’s Blog – Gabe’s Virtual World – http://www.gabesvirtualworld.com/

- Mike Laverick’s Blog – RTFM Ed. – http://www.rtfm-ed.co.uk/

- Scott Lowe’s Blog – ScottLowe.org – http://blog.scottlowe.org/ << Great author of many books and an EMC vSpecialist

- Jason Boche – Vmware Virtualization Evangelist – http://www.boche.net/blog/ << Old VMUG Minneapolis Leader, now works for Dell

- Local guy(Arizona) – Adam Baum – http://itvirtuality.wordpress.com/ <<< works for VCE and knows Cisco UCS

3. RSS Feeds

- Setup RSS Feeds to your Favorite Blogs sites – AND read them – Put your Smart Phone to use…

- VMWare has done a great job with Planetv12n. It is a blog consolidation of top bloggers

4. PodCasts

- John Troyer with VMware has done a great job putting together a weekly podcast around VMware based topics — http://www.talkshoe.com/talkshoe/web/talkCast.jsp?masterId=19367

- One thing I like about TalkShoe is all the previous pod casts are there for you to review.

5. LinkedIN

- Join a group, build your network, and start communicating

- This is a great way to track those people you meet

6. Twitter

- Twitter Really? Yes Really…. Companies like VMware use twitter to get information out to customers

- Find your favorite companies, find out their twitter feeds and follow them.

- If used properly Twitter is a great resource for valuable information

7. YouTube

- YouTube Really? Yes Really… Like Twitter YouTube is a great resource for quality information.

- Do simple searches for information or product, you’ll be surpised how much information you’ll find

- Companies like VMware have specific YouTube Channels and they frequently post Product and How To information

Here is my last link… This link contains most of the VMware Bloggers, Twitter Accounts, and RSS feeds in one spot.. http://vlp.vsphere-land.com/ << Priceless

All of this might seem a bit overbearing, and it can be at first. My recommendation is — start out small, read a few blogs, view some online content, ask questions, and repeat..

Before you know it you’ll be on the right track to staying current with virtualization.

What are some of the ways you stay current? Please post up!

Slow Windows 2008 VM Video Performance when viewing through an ESX console

Today one of my colleagues mentioned he was experiencing poor video performance with a windows 2008 VM. When he accessed the VM via vCenter Server Console the mouse was performing poorly..

We did a bit of research and found the article below… it fixed our issue in a jiffy..

Note: This post/notes are simply for my reference and I don’t recommend you apply this to your environment…

Troubleshooting SVGA drivers installed with VMware Tools on Windows 7 and Windows 2008 R2 running on ESX 4.0

Details

- You receive a black screen on the virtual machine when using Windows 7 or Windows 2008 R2 as a guest operating system on ESX 4.0.

- You experience slow mouse performance on Windows 2008 R2 virtual machine.

Solution

This issue can occur due to the XPDM (SVGA) driver provided with VMware Tools. This is a legacy Windows driver and is not supported on Windows 7 and Windows 2008 R2 guest operating systems.

To workaround this issue, replace the Display Adapter default-installed driver within the virtual machine with the driver contained in the wddm_video folder. In vCenter Server 4.1 (for Windows 2008 R2 operating systems), the wddm_video folder is in C:\Program Files\Common Files\VMware\Drivers.

To move a copy of this driver from the vCenter Server to the virtual machine:

- Open a command line share to the vCenter Server from the virtual machine:

c$ share

- Navigate to C:\Program Files\Common Files\VMware\Drivers and copy the driver to a location on the virtual machine (C:\, for example).

- Within the virtual machine, right-click Computer > Manage > Diagnostics > Device Manager, then expand the Display Adapters selection.

- Right-click the Driver that is currently installed and choose Update Driver Software. Browse to the folder that you copied from vCenter Server, select it, then follow the wizard.

- When the wizard completes, reboot the virtual machine.

To resolve this issue, update to ESX 4.0 Update 1. A new WDDM driver is installed with the updated VMware Tools and is compatible with Windows 7 and Windows 2008 R2.

If you are able to update to ESX 4.0 Update 1, but receive a black screen on the virtual machine after changing to the new driver, click Edit Settings and change the video settings to 32MB.

If you cannot update to ESX 4.0 Update 1:

- Deselect the driver included with ESX 4.0.

- When you install VMware Tools, select Custom or Modify in the VMware Tools installation wizard

- Deselect the SVGA (XPDM) driver.

You can also remove the VMware Tools SVGA driver from the Device Manager after installing VMware Tools.

Note: To install the drivers successfully, ensure that the virtual machine hardware version is 7.

Test Lab – Day 5 Expanding the IOMega ix12-300r

Recently I installed an IOMega ix12-300r for our ESX test lab and it’s doing quite will

However I wanted to push our Iomega to about 1Gbs of sustained NFS traffic of the available 2Gbs.

To do this I needed to expand our 2 drive storage pool to 4 drives.

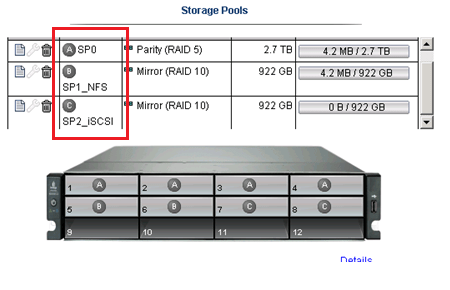

From a previous post we created 3 storage pools as seen below.

Storage Pool 0 (SP0) 4 Drives for basic file shares (CIFS)

Storage Pool 1 (SP1_NFS) 2 drives for ESX NFS Shares only

Storage Pool 2 (SP2_iSCSI) 2 drives dedicated for ESX iSCSI only

In this post I’m going to delete the unused SP2_iSCSI and add those drives to SP1_NFS

Note: This procedure is simply the pattern I used in my environment. I’m not stating this is the right way but simply the way it was done. I don’t recommend you use this pattern or use it for any type of validation. These are simply my notes, for my personal records, and nothing more.

Under settings select storage pools

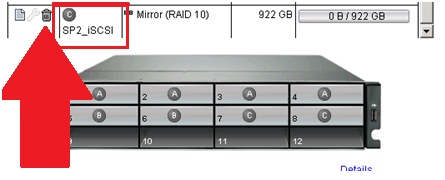

Select the Trash Can to delete the storage pools..

It prompted me to confirm and it deleted the storage pool.

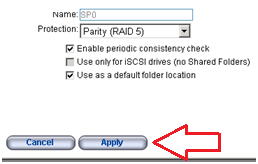

Next I choose the Edit icon on SP2_NFS, selected the drives I wanted, choose RAID 5, and pressed apply.

From there it started to expand the 2 disk RAID1 to a 4 disk RAID5 storage pool..

Screenshot from the IOMega ix12 while it is being expanded…

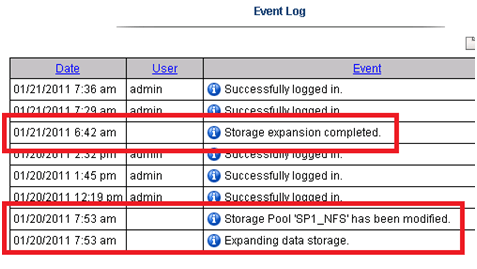

I then went to the Dashboard and under status I can view its progress…

ALL this with NO Down time to ESX, in fact I’m writing this post from a VM at the expansion is happening.

It took about 11 Hours to rebuild the RAID set.

Tip: Use the event log under settings to determine how long the rebuild took.

The next day I checked in on ESX and it was reporting the updated store size.

Summary…

To be able to expand your storage pool that houses your ESXi test environment with no down time is extremely beneficial and a very cool feature.

Once again IOMega is living up to its tag line – IOmega Kicks NAS!

Tomorrow we’ll see how it performs when we push a higher load to it.

Test Lab – Day 4 Xsigo Redundancy testing with ESXi

Today I tested Xsigo redundancy capabilities within the ESXi test environment.

So far I have built up an environment with 4 x ESXi 4.1 hosts, each with a Single VM, and 2 Xsigo VP780’s.

Each VM is pretty much idle for this test, however tomorrow I plan to introduce some heavier IP and NFS traffic and re-run the tests below.

I used a Laptop and the ESXi console in tech support mode to capture the results.

Keep in mind this deployment is a SINGLE site scenario.

This means both Xsigo are considered at the same site and each ESXi host is connected to the A & B Xsigo.

Note: This test procedure is simply the pattern I used to test my environment. I’m not stating this is the right way to test an environment but simply the way it was done. I don’t recommend you use this pattern to test your systems or use it for validation. These are simply my notes, for my personal records, and nothing more.

Reminder:

XNA, XNB are Xsigo Network on Xsigo Device A or B and are meant for IP Network Traffic.

XSA, XSB are Xsigo Storage or NFS on Xsigo Device A or B and are meant for NFS Data Traffic.

Test 1 – LIVE I/O Card Replacement for Bay 10 for IP Networking

Summary –

Xsigo A sent a message to Xsigo support stating the I/O Module had an issue. Xsigo support contacted me and mailed out the replacement module.

The affected module controls the IP network traffic (VM, Management, vMotion).

Usually, an I/O Module going out is bad news. However, this is a POC (Proof of Concept) so I used this “blip” to our advantage and captured the test results.

Device – Xsigo A

Is the module to be affected currently active? Yes

Pre-Procedure –

Validate by Xsigo CLI – ‘show vnics’ to see if vnics are in the up state – OKAY

Ensure ESX Hosts vNICs are in Active mode and not standby – OKAY

Ensure ESX Hosts network configuration is setup for XNA and XNB in Active Mode – OKAY

Procedure –

Follow replacement procedure supplied with I/O Replacement Module

Basic Steps supplied by Xsigo –

- Press the Eject button for 5 seconds to gracefully shut down the I/O card

- Wait LED to go solid blue

- Remove card

- Insert new card

-

Wait for I/O card to come online LED will go from Blue to Yellow/Green

- The Xsigo VP780 will update card as needed Firmware & attached vNIC’s

- Once the card is online your ready to go

Expected results –

All active IP traffic for ESXi (including VM’s) will continue to pass through XNB

All active IP traffic for ESXi (including VM’s) might see a quick drop depending on which XN# is active

vCenter Server should show XNA as unavailable until new I/O Module is online

The I/O Module should take about 5 Minutes to come online

How I will quantify results –

All active IP traffic for ESXi (including VM’s) will continue to pass through XNB

- Active PING to ESXi Host (Management Network, VM’s) and other devices to ensure they stay up

All active IP traffic for ESXi (including VM’s) might see a quick drop depending on which XN# is active

- Active PING to ESXi Host (Management Network, VM’s)

vCenter Server should show XNA as unavailable until new I/O Module is online

- In vCenter Server under Network Configuration check to see if XNA goes down and back to active

The I/O Module should take about 5 Minutes to come online

- I will monitor the I/O Module to see how long it takes to come online

Actual Results –

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | One Ping Loss | No Ping Loss |

| External Laptop | ESX Host 1 | ESX | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 2 | ESX | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Loss |

One Ping Loss |

| External Laptop | ESX Host 4 | ESX | No Loss | No Loss |

| ESX Host | IOMega Storage | NFS | No Loss | No Loss |

From vCenter Server –

XNA status showing down during module removal on all ESX Hosts

vCenter Server triggered the ‘Network uplink redundancy lost’ – Alarm

I/O Module Online –

The I/O Module took about 4 minutes to come online.

Test 1 Summary –

All results are as expected. There was only very minor ping loss, which for us is nothing to be worried about

Test 2 – Remove fibre 10gig Links on Bay 10 for IP Networking

Summary –

This test will simulate fibre connectivity going down for the IP network traffic.

I will simulate the outage by disconnecting the fibre connection from Xsigo A, measure/record the results, return the environment to normal, and then repeat for Xsigo B.

Device – Xsigo A and B

Is this device currently active? Yes

Pre-Procedure –

Validate by Xsigo CLI – ‘show vnics’ to see if vnics are in up state

- Xsigo A and B are reporting both I/O Modules are functional

Ensure ESX Host vNICs are in Active mode and not standby

- vCenter server is reporting all communication is normal

Procedure –

Remove the fibre connection from I/O Module in Bay 10 – Xsigo A

Measure results via Ping and vCenter Server

Replace the cable, ensure system is stable, and repeat for Xsigo B device

Expected results –

All active IP traffic for ESXi (including VM’s) will continue to pass through the redundant XN# adapter

All active IP traffic for ESXi (including VM’s) might see a quick drop if it’s traffic is flowing through the affected adapter.

vCenter Server should show XN# as unavailable until fibre is reconnected

How I will quantify results –

All active IP traffic for ESXi (including VM’s) will continue to pass through XNB

- Using PING the ESXi Hosts (Management Network, VM’s) and other devices to ensure they stay up

All active IP traffic for ESXi (including VM’s) might see a quick drop depending on which XN# is active

- Active PING to ESXi Host (Management Network, VM’s)

vCenter Server should show XNA as unavailable until new I/O Module is online

- In vCenter Server under Network Configuration check to see if XNA goes down and back to active

Actual Results –

Xsigo A Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | No Ping Loss | One Ping Loss |

| External Laptop | ESX Host 1 | ESX | One Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | One Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

One Ping Loss |

| External Laptop | ESX Host 4 | ESX | One Ping Loss | One Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | No Ping Loss |

From vCenter Server –

XNA status showing down during module removal on all ESX Hosts

vCenter Server triggered the ‘Network uplink redundancy lost’ – Alarm

Xsigo B Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming on line / After |

| External Laptop | Windows 7 VM | VM | One Ping Loss | One Ping Loss |

| External Laptop | vCenter Server | VM | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 1 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

No Ping Loss |

| External Laptop | ESX Host 4 | ESX | No Ping Loss | One Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | No Ping Loss |

From vCenter Server –

XNB status showing down during module removal on all ESX Hosts

vCenter Server triggered the ‘Network up link redundancy lost’ – Alarm

Test 2 Summary –

All results are as expected. There was only very minor ping loss, which for us is nothing to be worried about

Test 3 – Remove fibre 10g Links to NFS

Summary –

This test will simulate fibre connectivity going down for the NFS network.

I will simulate the outage by disconnecting the fibre connection from Xsigo A, measure/record the results, return the environment to normal, and then repeat for Xsigo B.

Device – Xsigo A and B

Is this device currently active? Yes

Pre-Procedure –

Validate by Xsigo CLI – ‘show vnics’ to see if vnics are in up state

- Xsigo A and B are reporting both I/O Modules are functional

Ensure ESX Host vNICs are in Active mode and not standby

- vCenter server is reporting all communication is normal

Procedure –

Remove the fibre connection from I/O Module in Bay 11 – Xsigo A

Measure results via Ping, vCenter Server, and check for any VM GUI hesitation.

Replace the cable, ensure system is stable, and repeat for Xsigo B device

Expected results –

All active NFS traffic for ESXi (including VM’s) will continue to pass through the redundant XS# adapter

All active NFS traffic for ESXi (including VM’s) might see a quick drop if it’s traffic is flowing through the affected adapter.

vCenter Server should show XS# as unavailable until fibre is reconnected

I don’t expect for ESXi to take any of the NFS datastores off line

How I will quantify results –

All active NFS traffic for ESXi (including VM’s) will continue to pass through XSB

- Active PING to ESXi Host (Management Network, VM’s) and other devices to ensure they stay up

All active NFS traffic for ESXi (including VM’s) might see a quick drop depending on which XN# is active

- Active PING to ESXi Host (Storage, Management Network, VM’s)

vCenter Server should show XS# as unavailable until fibre is reconnected

- In vCenter Server under Network Configuration check to see if XS# goes down and back to active

I don’t expect for ESXi to take any of the NFS datastores offline

- In vCenter Server under storage, I will determine if the store goes offline

Actual Results –

Xsigo A Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 1 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

No Ping Loss |

| External Laptop | ESX Host 4 | ESX | No Ping Loss | No Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | Two Ping Loss |

From vCenter Server –

XSA & XSB status showing down during fibre removal on all ESX Hosts

vCenter Server triggered the ‘Network uplink redundancy lost’ – Alarm

No VM GUI Hesitation reported

Xsigo B Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 1 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

No Ping Loss |

| External Laptop | ESX Host 4 | ESX | No Ping Loss | No Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | No Ping Loss |

From vCenter Server –

XNB status showing down during module removal on all ESX Hosts

vCenter Server triggered the ‘Network up link redundancy lost’ – Alarm

No VM GUI Hesitation reported

Test 3 Summary –

All results are as expected. There was only very minor ping loss, which for us is nothing to be worried about

Test 4 – Remove Infiniband cables from the ESXi HBA.

Summary –

During this test, I will remove all the Infiniband cables (4 of them) from the ESXi HBA.

I will disconnect the Infiniband connection to Xsigo A first, measure/record the results, return the environment to normal, and then repeat for Xsigo B.

Pre-Procedure –

Validate by Xsigo CLI – ‘show vnics’ to see if vnics are in upstate

- Xsigo A and B are reporting both I/O Modules are functional

Ensure ESX Host vNICs are in Active mode and not standby

- vCenter server is reporting all communication is normal

Procedure –

Remove the InfiniBand cable from each ESXi Host attaching to Xsigo A

Measure results via Ping, vCenter Server, and check for any VM GUI hesitation.

Replace the cables, ensure system is stable, and repeat for Xsigo B device

Expected results –

ALL active traffic (IP or NFS) for ESXi (including VM’s) will continue to pass through the redundant XNB or XSB accordingly.

All active traffic (IP or NFS) for ESXi (including VM’s) might see a quick drop if it’s traffic is flowing through the affected adapter.

vCenter Server should show XNA and XSA as unavailable until cable is reconnected

I don’t expect for ESXi to take any of the NFS datastores offline

How I will quantify results –

ALL active traffic (IP or NFS) for ESXi (including VM’s) will continue to pass through the redundant XNB or XSB accordingly.

- Active PING to ESXi Host (Management Network, VM’s) and other devices to ensure they stay up

All active traffic (IP or NFS) for ESXi (including VM’s) might see a quick drop if it’s traffic is flowing through the affected adapter.

- Active PING to ESXi Host (Storage, Management Network, VM’s)

vCenter Server should show XNA and XSA as unavailable until cable is reconnected

- In vCenter Server under Network Configuration check to see if XS# goes down and back to active

I don’t expect for ESXi to take any of the NFS datastores offline

- In vCenter Server under storage, I will determine if the store goes offline

Actual Results –

Xsigo A Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | Two Ping Loss |

| External Laptop | vCenter Server | VM | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 1 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

No Ping Loss |

| External Laptop | ESX Host 4 | ESX | No Ping Loss | No Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | No Ping Loss |

From vCenter Server –

XSA & XNA status showing down during fibre removal on all ESX Hosts

vCenter Server triggered the ‘Network uplink redundancy lost’ – Alarm

No VM GUI Hesitation reported

NFS Storage did not go offline

Xsigo B Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming on line / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 1 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | One Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

No Ping Loss |

| External Laptop | ESX Host 4 | ESX | One Ping Loss | No Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | No Ping Loss |

From vCenter Server –

XNB & XSB status showing down during module removal on all ESX Hosts

vCenter Server triggered the ‘Network up link redundancy lost’ – Alarm

NFS Storage did not go offline

Test 4 Summary –

All results are as expected. There was only very minor ping loss, which for us is nothing to be worried about

Test 5 – Pull Power on active Xsigo vp780

Summary –

During this test, I will remove all the power cords from Xsigo A.

I will disconnect the power cords from Xsigo A first, measure/record the results, return the environment to normal, and then repeat for Xsigo B.

Pre-Procedure –

Validate by Xsigo CLI – ‘show vnics’ to see if vnics are in up state

- Xsigo A and B are reporting both I/O Modules are functional

Ensure ESX Host vNICs are in Active mode and not standby

- vCenter server is reporting all communication is normal

Procedure –

Remove power cables from Xsigo A

Measure results via Ping, vCenter Server, and check for any VM GUI hesitation.

Replace the cables, ensure system is stable, and repeat for Xsigo B device

Expected results –

ALL active traffic (IP or NFS) for ESXi (including VM’s) will continue to pass through the redundant XNB or XSB accordingly.

All active traffic (IP or NFS) for ESXi (including VM’s) might see a quick drop if it’s traffic is flowing through the affected adapter.

vCenter Server should show XNA and XSA as unavailable until cable is reconnected

I don’t expect for ESXi to take any of the NFS datastores offline

How I will quantify results –

ALL active traffic (IP or NFS) for ESXi (including VM’s) will continue to pass through the redundant XNB or XSB accordingly.

- Active PING to ESXi Host (Management Network, VM’s) and other devices to ensure they stay up

All active traffic (IP or NFS) for ESXi (including VM’s) might see a quick drop if it’s traffic is flowing through the affected adapter.

- Active PING to ESXi Host (Storage, Management Network, VM’s)

vCenter Server should show XNA and XSA as unavailable until cable is reconnected

- In vCenter Server under Network Configuration check to see if XS# goes down and back to active

I don’t expect for ESXi to take any of the NFS datastores offline

- In vCenter Server under storage, I will determine if the store goes offline

Actual Results –

Xsigo A Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | No Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 1 | ESX | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 2 | ESX | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 3 | ESX |

No Ping Loss |

No Ping Loss |

| External Laptop | ESX Host 4 | ESX | No Ping Loss | One Ping Loss |

| ESX Host | IOMega Storage | NFS | No Ping Loss | No Ping Loss |

From vCenter Server –

XSA & XNA status showing down during the removal on all ESX Hosts

vCenter Server triggered the ‘Network uplink redundancy lost’ – Alarm

No VM GUI Hesitation reported

NFS Storage did not go offline

Xsigo B Results…

Pings –

| From Device | Destination Device | Type | Result During | Result coming online / After |

| External Laptop | Windows 7 VM | VM | One Ping Loss | No Ping Loss |

| External Laptop | vCenter Server | VM | One Ping Loss | One Ping Loss |

| External Laptop | ESX Host 1 | ESX | No Ping Loss | No Ping Loss |

| External Laptop | ESX Host 2 | ESX | No Ping Loss | One Ping Loss |

| External Laptop | ESX Host 3 | ESX |

One Ping Loss |

One Ping Loss |

| External Laptop | ESX Host 4 | ESX | One Ping Loss | No Ping Loss |

| ESX Host | IOMega Storage | NFS | One Ping Loss | No Ping Loss |

From vCenter Server –

XNB & XSB status showing down during module removal on all ESX Hosts

vCenter Server triggered the ‘Network uplink redundancy lost’ – Alarm

No VM GUI Hesitation reported

NFS Storage did not go offline

Test 5 Summary –

All results are as expected. There was only very minor ping loss, which for us is nothing to be worried about

It took about 10 Mins for the Xsigo come up and online from the point I pulled the power cords to the point ESXi reported the vnics were online..

Overall Thoughts…

Under very low load the Xsigo it performed as expected with ESXi. So far the redundancy testing is going well.

Tomorrow I plan to place a pretty hefty load on the Xsigo and IOMega to see how they will perform under the same conditions.

I’m looking forward to seeing if the Xsigo can perform just as well under load.

Trivia Question…

How do you know if someone has rebooted and watched an Xsigo boot?

This very cool logo comes up on the bootup screen! Now that’s Old School and very cool!

Great Post on ESXi 4.1

I was doing some exploring for HA / LDvmotion setup today and I came across this great post on ESXi 4.1 today.

It contains a lot of great information on ESXi 4.1.

I know a lot of you have questions about ESXi 4.1 and I thought I would share it out…

Here’s the link to the post…

http://blog.mvaughn.us/2010/07/13/vsphere-4-1/

The entire blog was really well done and I really liked the links at the end…

- KB Article:

1022842 – Changes to DRS in vSphere 4.1 - KB Article:

1022290 – USB support for ESX/ESXi 4.1 - KB Article:

1022263 – Deploying ESXi 4.1 using the Scripted Install feature - KB Article:

1021953 – I/O Statistics in vSphere 4.1 - KB Article:

1022851 – Changes to vMotion in vSphere 4.1 - KB Article:

1022104 – Upgrading to ESX 4.1 and vCenter Server 4.1 best practices - KB Article:

1023118 – Changes to VMware Support Options in vSphere 4.1 - KB Article:

1021970 – Overview of Active Directory integration in ESX 4.1 and ESXi 4.1 - KB Article:

1021769 – Configuring IPv6 with ESX and ESXi 4.1 - KB Article:

1022844 – Changes to Fault Tolerance in vSphere 4.1 - KB Article:

1023990 – VMware ESX and ESXi 4.1 Comparison - KB Article:

1022289 – Changing the number of virtual CPUs per virtual socket in ESX/ESXi 4.1