Home Lab GEN V: The Quest for More Cores! Design Considerations

** NOV-2020 Update: I’ve updated my Home Lab to Gen 7, please go to my BLOG Series for more information on this update. **

** MAR-2020 Update: Though I had good luck with the HP 593742-001 NC523SFP DUAL PORT SFP+ 10Gb card in my Gen 4 Home Lab, I found it faulty when running in my Gen 5 Home Lab. Could be I was using a PCIe x4 slot in Gen 4, or it could be the card runs to hot to touch. For now this card was removed from VMware HCL, HP has advisories out about it, and after doing some poking around there seem to be lots of issues with it. **

Original Post Below:

I have decided to update my Home Lab into Generation V. In doing this I am going to follow my best practices laid out in my ‘Home Lab Generations’ and ‘VMware Home Labs: a Definitive guide’. As you read through the “Home Lab Generations page” you should notice a theme around planning each generation and documenting its outcomes and unplanned items. In this blog post, I am going to start laying out Design Considerations which include the ‘Initial use case/goals and needed Resources as they relate to GEN V.

First off, lets answer why am I updating my home lab. Over the past 4+ Home Lab generations I had deemed that CPU’s with 4 Physical Cores with up to 32GB RAM would meet the demands of my use cases and, in most cases it did. However, most recently I starting having resource constraints when I wanted to use multiple VMware products. This caused me to do a bit of shuffling to be able to run the software I wanted. Now this is not the fault of VMware, its just that there are so many products that have resource demands and my current home lab was undersized. Additionally, the fan noise from the InfiniBand switch and others was just to loud.

First – Here are my initial use case and goals:

- Be able to run vSphere 6.x and vSAN Environment

- Reuse as much as possible from Gen IV Home lab, this will keep costs down

- Choose products that bring value to the goals, are cost effective, and if they are on the VMware HCL that a plus but not necessary for a home lab

- Move networking (vSAN / FT) from 40Gb InfiniBand to 10Gbe Switch

- Have enough CPU cores and RAM to be able to support multiple VMware products (ESXi, VCSA, vSAN, vRO, vRA, NSX, LogInsight)

- Be able to fit the the environment into 3 ESXi Hosts

- The environment should run well, but doesn’t have to be a production level environment

Second – Evaluate Software, Hardware, and VM requirements:

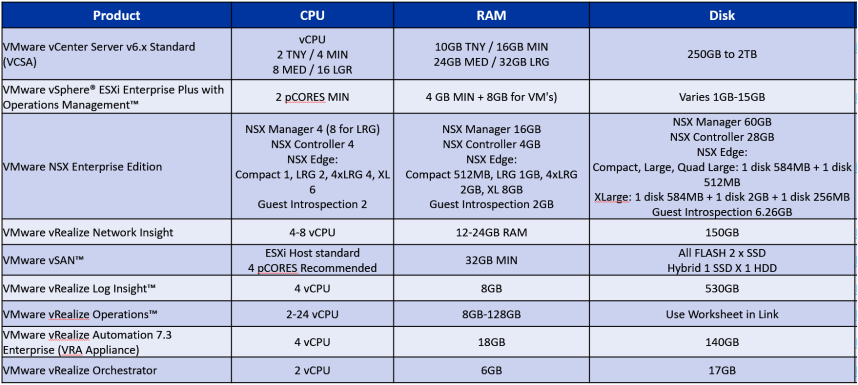

Before I run off and start buying items. I need to look at the software requirements on the hardware. Using the table from my ‘HOME LABS: A DEFINITIVE GUIDE’, I can start to figure out how much CPU, RAM, and Disk space I’ll need. Through experience working with these products I already know my Dual Port 10Gbe per host network is adequate to support these products. Its the other items I’m concerned with in this build.

Using this information I can quickly see I need the following across all my hosts:

- CPU: ~32 cores, of which 30 are vCores and 4 are pCores

- RAM: ~95GB RAM, of which 64GB used by VMs and 32 GB RAM used by ESXi + vSAN

- Disk: ~1.3 TB of disk space

- For vROPS I used this sizer: http://vropssizer.vmware.com/sizing-wizard/choose-installation

Lastly I figure I run between 20-30 VM’s for testing, these could be Windows, Linux, etc. which can be over subscribed

- CPU: 30 x 2 vCPU = ~60 vCPU

- RAM: 30 X 8GB = ~240 GB

- Disk: 30 x 30GB = ~900GB

Total resource needs for the Cluster:

- CPUs: 92 cores

- RAM: 335GB

- Disk: 2.2TB

Third – Home Lab Design Considerations

As you can see form the totals above my existing Gen IV Home Lab would not be able to keep up. Lets do keep in mind the totals for the CPU/RAM are a 1:1 ratio and doesn’t take in consolidation. For a home lab I should be able to reduce these numbers quite a bit. What I do next is review my Home lab Design Considerations. This plus the information from step two will help me to decide which hardware to select.

Home Lab Design Considerations:

| Design Considerations | Description |

| Initial Cost | How much does the Home lab solution cost to build out?

This is always top of mind for me and I do a lot of cost comparisons, research, and evaluation. For this build I found that reusing what I have plus purchasing a few more items kept my cost lower with more value then buying new or even used hardware. |

| Noise | When the home lab is running how much noise will it produce, and are the noise levels appropriate for your use case?

In my design I’m looking to reduce main fan noise. My lab is in my home office so it needs to be whisper quiet. |

| Heat / Power Consumption | Does the home lab produce to much heat for the intended location?

Heat/Power is always a balancing act. I want something that will not heat up my room, has enough cores to to do the job but doesn’t consume so much power I don’t want to turn it on. |

| Monthly Operational Cost | Based on Power (watts) and the average cost for electricity for the USA, cost is an estimate if running for 24x7x30 days?

My home lab power needs are as follows:

|

| Foot Print Space and Flexibility | How much space does the solution take up. Based on the type of product you choose, how flexible is the solution when hardware or other changes are needed to expand?

What I’m really looking for are 3 x Tall tower PC Cases with maximum flexibility. This means where the power supplies are located, the amount of drives it can hold, fits many different motherboards, and has vertical / horizontal slots for Host Cards. |

| Bleeding Edge

VMware products |

Software products are constantly adding new requirements for home labs (example: 10Gbe Networks, or more HDD/SDD) How does the solution align to bleeding edge products without major over haul?

If I build this system beefy enough I should be able to be in the position to run just about any software that comes my way. |

| Hands on Software | Measures viability from the ESXi layer through the entire stack of products

My new system should be designed to accommodate the software stack mentioned in step 2 |

| Hands on Hardware | Considers the effectiveness of the hardware solution to real world technologies

Choosing a system that allows the most flexibility is key here to be future looking |

| ESXi / vSAN HCL Support | How does the hardware align to the Hardware compatibility guides

Not top of mind for a Home lab as I’m not looking for VMware to support it. However, the closer I can get to the HCL the better off I will be. |

| HCI

Hyper Converged Infrastructure |

How well does the solution adapt to HCI (vSAN)

I should ensure that JBOD Disk Controller and NIC both have a PCIe 8x slot or better and I can fit many drives into my case. |

| Refresh Cost | Financially, what would it take to refresh, replace, or update the hardware solution

Consider how adaptable is the solution to changing hardware and software demands. I want to choose products that are cost effective but I can reuse down the road. This should put my lab in a potion to keep costs down. |

Step Four – Choosing Hardware

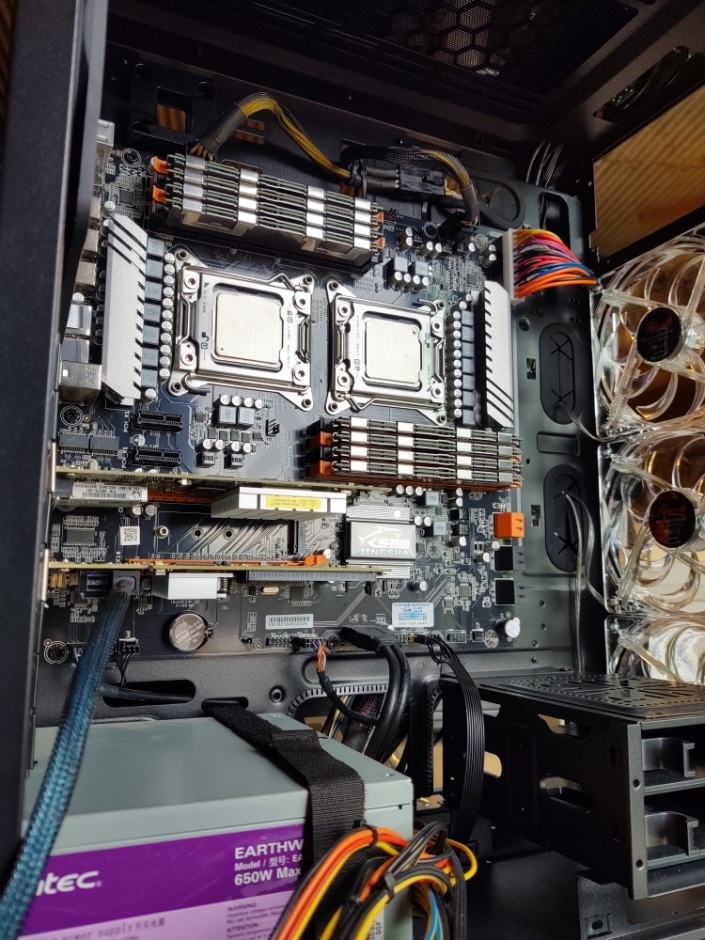

Based on my estimations above I’m going to need a very flexible case, Dual CPU Mobo, lots of RAM, and good network connectivity.

Here is what each Host will have:

- Rosewill RISE Case

- JINGSHA EATX X79 Dual CPU motherboard (Worked with 6.7, did not test with 7.0)

- 128GB DDR3 ECC RAM

- 4 x 200 SAS SSD

- 4 x 600 SAS HDD

- 1 x IBM 5210 JBOD

1 x HP 593742-001 NC523SFP DUAL PORT SFP+ 10GbCard found faulty with ESXi- Connect into a MikoTik 10gbe CN309

Here are the resources I’ll need to build out my 3 hosts:

- To meet the initial use case/goals I’m will be investing quite a bit into this total refresh.

- Here are some of the initial GEN V resource choices (Still in the works and not all proven out)

- Purchase Items:

- Mobo: JINGSHA EATX X79 Dual CPU motherboard LGA 2011 Supports Xeon v2 processor ($86 Alibaba)

- Mobo Stands: 4mm Nylon Plastic Pillar (Amazon $8)

- RAM: 128GB DDR3 ECC (Ebay $110)

- CPU: Xeon E5-2640 v2 8 Cores / 16 HT (Ebay $30)

- CPU Cooler: DEEPCOOL GAMMAXX 400 (Amazon $19)

- Video: ASUS Neon PCIe 1x with DMS-59 Splitter (Ebay $15)

- Video Riser: PCI-E 1x to 16x Riser Adapter (Amazon $4)

- DISK: 600GB SSD (Ebay $80 for 10 Drives)

- Power Supply Adapter: Dual 8(4+4) Pin Male for Motherboard Power Adapter Cable (Amazon $11)

- Power Supply Extension Cable: StarTech.com 8in 24 Pin ATX 2.01 Power Extension Cable (Amazon $9)

- CableCreation Internal Mini SAS SFF-8643 to (4) 29pin SFF-8482 (Amazon $18)

- Case: Rosewill RISE Glow EATX (Newegg $54)

- Existing Items I’ll move over from the old 3 Hosts:

- Power Supply’s

- 200GB SAS SSD

- 600GB SAS HHD

- 2TB SATA HDD

- 64GB USB Thumb Drive

- IBM 5210 JBOD Disk Controller

- CableCreation Internal Mini SAS SFF-8643 to (4) 29pin SFF-8482 connectors with SATA Power,1M

- HP 593742-001 NC523SFP DUAL PORT SFP+ 10Gb SERVER ADAPTER W/ HIGH PROFILE BRACKET

- HP 684517-001 Twinax SFP 10gbe 0.5m DAC Cable Assembly

- Purchase Items:

The total cost for me to upgrade each server using purchased and existing items came out to ~$425 US Each. If you built this configuration without existing items the cost would be around ~$850 US. Clearly, you can see reusing my existing hardware and taking a step back with older Xeon/DDR3 RAM it saved quite a few dollars.

Next Steps for me is to finalize my orders and start the assembly process. I’ll post up soon around my progress.Here are a few initial photos from the build.

For now here are a few pre-deployment pics- ~Enjoy!

If you like my ‘no-nonsense’ videos and blogs that get straight to the point… then post a comment or let me know… Else, I’ll start posting really boring content!

January 27, 2020 at 9:16 am

Why not get a few Dell R710’s ? Only downside is that they’re cheap and memory is cheap too. It’s all used stuff and I’ve had a few bad memory sticks but overall it rocks. I have 2 R710’s. One with x5690’s which are powerhungry and a backup machine with slower e56xx cpu’s. Works great.

LikeLike

January 27, 2020 at 3:53 pm

Thanks for the reply, and I do appreciate your view points. I started my home lab based on White Box servers and have built them up over the years. Many things I’ve blogged about that are important to me and my home lab use case – Flexibility, Heat, and Noise as an example. I tend to avoid the “server” class in general as they tend to be loud, power hungry, run hot, can require non-commodity parts, have a ridged footprint, and a refresh/update could mean buy another. This doesn’t mean they won’t work well, but for what I need White box builds fit my needs well. I do hope this helps to clarify your question.

LikeLike

January 27, 2020 at 4:06 pm

oh, that makes perfect sense. I started out with a whitebox which is now pretty outdated. I recently jumped into using the older server class machines. Overall I think the issues you mention I haven’t seen all that much. I do think they are more robust and I absolutely love the ipmi for it. Pricewise I think I spent a LOT less than what I would for a similar performance white box machine. I run one of them 24×7 and other than higher power usage and slightly more heat I don’t see any other downsides. Buying 16G ram sticks for 20 bucks each can get you one hell of a setup. I run 140G of ram in my main machine and the backup has 96G of ram. Dual powersupply… well, I guess it’s what you like the most. I might be sol if esxi 7 comes out and they no longer support the cpu’s, but by then I will jump on one of the alternatives that are currently coming out or just stick w 6.7 for a while. I installed xcp-ng on my old whitebox and it’s impressive.

LikeLike

January 27, 2020 at 9:31 am

I used to run 3 HuananZhi X79 boards with 128GB RAM and single socket Xeon 2689v1. I recently upgraded to a single socket Jingsha X99 board but with DDR3, It is based on C612 chipset, and it works with certain v3 Xeons (I got a Xeon 2673v3 for U$S90 shipped, a 12c/24t 105w CPU with an all core turbo of 2.7Ghz).Paired it with 256GB DDR3-1600 RAM, and it works great (X79 boards are a little bit finicky with quad rank modules).

So I went from 3 Xeons E5 2689v1 128GB to 2 Xeons E5 2673v3 256GB. Lower power consumption, more RAM, newer and faster CPUs (In my experience my home lab doesn’t require much CPU, but loves RAM. I run NSX-T, NSX-V, vRO, vRA, Director, Horizon, Operations…)

I would always recommend spending more on RAM than on CPU for a home lab environment. There are some dual socket boards with 16 DIMMs.

LikeLike

January 27, 2020 at 3:59 pm

Thank you for the comment and info! I agree RAM is clearly the leading shortage in a lot of cases. However, my previous lab was based on 4 Cores and in some cases OVA installs required more cores then I could provide. So, they just wouldn’t install. With my new lab I’ll be able to support current and future install requirements.

LikeLike

February 19, 2021 at 2:06 pm

Hi

I’m thinking in get a HuananZhi X79 with E5-2670 for home lab use. I intend to run vpshere (6.5 or 7)on this platform, Accord your experience with this mobo and vpshere (i suppose), did you got some issue or incompatibility running esxi on this platform?

LikeLike

February 20, 2021 at 4:35 pm

Thank you for the comment. I bought the board in my posts as I wanted something new vs. something used on ebay. My thought was I would have a more reliability vs. used ebay boards. The board in this post did allow me to install ESXi 6.7 and at one point I did have vSAN Hybrid installed. I ran these boards for weeks on ESXi 6.7 and folding@home VMs. Folding@home really taxes the performance of the CPU and worked these boards really hard. They did not fail and they seemed to work well. Their were a couple of reasons why I went with a different board – Mostly, the 16x PCIe slots only worked with video cards, the space behind the two PCIe 1x slots only allows for small cards, and no onboard video. I cover all these points in my wrap up video so let me know if you have any other questions – https://www.youtube.com/watch?v=sZ2ZplzlyW0&list=PLiWivaJb025Zq9ySz8KTY3P647Qucdg0N&index=8

LikeLike

February 21, 2021 at 11:50 am

Thank you for the reply.

I will buy a different mobo (http://www.huananzhi.com/html/1/184/185/532.html), but i guess that gonna work with vsphere 6.7 too. My concern is with the drivers principally. As it is a non-common built

LikeLike

February 22, 2021 at 8:40 am

My strong suggestion is to keep away from Motherboards (Mobo) with Realtek NICs. Realtek had not been supported since the 6.x days, and the old drivers that are out there currently do not work with vSphere 7. Find a Mobo with a NIC that is on the VMware HCL, else you are going to have issues if you try to install vSphere 7.

LikeLike

February 17, 2020 at 6:03 am

[…] More information on the overall components can be found here: https://vmexplorer.com/2020/01/27/home-lab-gen-v-the-quest-for-more-cores-part-i-design-consideratio… […]

LikeLike

February 19, 2020 at 6:11 am

[…] More information on the overall components can be found here: https://vmexplorer.com/2020/01/27/home-lab-gen-v-the-quest-for-more-cores-part-i-design-consideratio… […]

LikeLike

April 29, 2020 at 5:46 pm

[…] More information on the overall components can be found here: https://vmexplorer.com/2020/01/27/home-lab-gen-v-the-quest-for-more-cores-part-i-design-consideratio… […]

LikeLike

June 17, 2020 at 1:27 pm

thank you for sharing.

what about this motherboard for ESXi 7 ?

https://www.alibaba.com/product-detail/World-premiere-lga2011-v3-v4-socket_62451408040.html?spm=a2700.galleryofferlist.0.0.176f732dmsVzW1

LikeLike

June 17, 2020 at 1:30 pm

I have no idea on that motherboard for ESXi 7. There’s been a lot of changes with ESXi 7 and most home labs I’ve seen that work okay are “more” closely aligned to the vSphere HCL list.

LikeLike

June 17, 2020 at 1:44 pm

read that the motherboard X99 dual is not that good from overclocking perspective.

LikeLike

June 25, 2020 at 8:37 am

I wouldn’t know I’ve done zero reading on that board.

LikeLike

November 29, 2020 at 3:56 am

Hey Matt, I’m really liking your article. I’m always looking in to these motherboards. For the memory is it important what kind of type it is? Server memory or not? Or do they all fit the motherboard. Not sure what type of memory is in yours?

LikeLike

December 1, 2020 at 8:34 am

Hey Kristof, thank you for the question. Please note, I have moved on from these boards. But, for the Jingsha board I used “HYNIX HMT42GR7BMR4C-G7 16gb (1x16gb) Pc3-8500r 1066mhz Cl7 Quad Rank X4 Registered Ecc Ddr3 Sdram 240-pin Rdimm Memory Module For Server”. These modules easily transfer in to my SuperMicro Motherboard that I am currently running. For more information on my current build here – https://vmexplorer.com/home-labs-a-definitive-guide/

LikeLike

December 1, 2020 at 8:58 am

Hey Matt, thanks for your reply. Yes, I’ve seen the new article only after my comment. Any specific reason why you went away from those boards?

LikeLike

December 1, 2020 at 9:07 am

I had issues adapting them to vSphere 7. The first paragraph of this blog post sums it up . https://vmexplorer.com/2020/11/03/updating-vmware-homelab-gen-5-to-gen-7/

LikeLike