VSAN – The Migration from FreeNAS

Well folks it’s my long awaited blog post around moving my Homelab from FreeNAS to VMware VSAN.

Here are the steps I took to migrate my Home Lab GEN II with FreeNAS to Home Lab GEN III with VSAN.

Note –

- I am not putting a focus on ESXi setup as I want to focus on the steps to setup VSAN.

- My home lab is in no way on the VMware HCL, if you are building something like this for production you should use the VSAN HCL as your reference

The Plan –

- Meet the Requirements

- Backup VM’s

- Update and Prepare Hardware

- Distribute Existing hardware to VSAN ESXi Hosts

- Install ESXi on all Hosts

- Setup VSAN

The Steps –

Meet the Requirements – Detailed list here

- Minimum of three hosts

- Each host has a minimum of one SSD and one HDD

- The host must be managed by vCenter Server 5.5 and configured as a Virtual SAN cluster

- Min 6GB RAM

- Each host has a Pass-thru RAID controller as specified in the HCL. The RAID controller must be able to present disks directly to the host without a RAID configuration.

- 1GB NIC, I’ll be running 2 x 1Gbs NICs. However 10GB and Jumbo frames are recommended

- VSAN VMkernel port configured on every host participating in the cluster.

- All disks that VSAN will be allocated to should be clear of any data.

Backup Existing VMs

- No secret here around backups. I just used vCenter Server OVF Export to a local disk to backup all my critical VM’s

- More Information Here

Update and Prepare Hardware

- Update all Motherboard (Mobo) BIOS and disk Firmware

- Remove all HDD’s / SDD’s from FreeNAS SAN

-

Remove any Data from HDD/SDD’s . Either of these tools do the job

- Windows Tools – “MiniTool Partition Wizard”

- Boot ISO – GParted

Distribute Existing hardware to VSAN ESXi Hosts

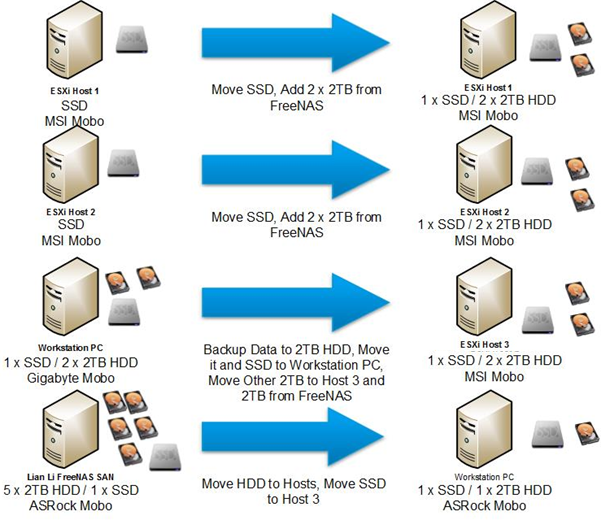

- Current Lab – 1 x VMware Workstation PC, 2 x ESXi Hosts boot to USB (Host 1 and 2), 1 x FreeNAS SAN

- Desired Lab – 3 x ESXi hosts with VSAN and 1 x Workstation PC

-

End Results after moves

- All Hosts ESXi 5.5U1 with VSAN enabled

- Host 1 – MSI 7676, i7-3770, 24GB RAM, Boot 160GB HDD, VSAN disks (2 x 2TB HDD SATA II, 1 x 60GB SSD SATA III), 5 xpNICs

- Host 2 – MSI 7676, i7-2600, 32 GB RAM, Boot 160GB HDD, VSAN disks (2 x 2TB HDD SATA II, 1 x 90 GB SSD SATA III), 5 x pNICs

- Host 3 – MSI 7676, i7-2600, 32 GB RAM, Boot 160GB HDD, VSAN disks (2 x 2TB HDD SATA II, 1 x 90 GB SSD SATA III), 5 x pNICs

- Note – I have ditched my Gigabyte z68xp-UD3 Mobo and bought another MSI 7676 board. I started this VSAN conversion with it and it started to give me fits again similar to the past. There are many web posts with bugs around this board. I am simply done with it and will move to a more reliable Mobo that is working well for me.

Install ESXi on all Hosts

-

Starting with Host 1

- Prior to Install ensure all data has been removed and all disk show up in BIOS in AHCI Mode

-

Install ESXi to Local Boot HD

- After the install I experienced the ESXi hanging at Boot – ‘Starting up Services – Running usbarbitrator start’

- Solution – Stop usbarbitrator service

- http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1023976

- Setup ESXi base IP address via direct Console, DNS, disable IP 6, enable shell and SSH

- Using the VI Client setup the basic ESXi networking and vSwitch

- Using VI Client I restored the vCSA and my AD server from OVF and powered them on

- Once booted I logged into the vCSA via the web client

- I built out Datacenter and add host 1

- Create a cluster but only enabled EVC to support my different Intel CPU’s

- Cleaned up any old DNS settings and ensure all ESXi Hosts are correct

- From the Web client Validate that 2 x HDD and 1 x SDD are present in Host

- Installed ESXi Host 2 / 3, followed most of these steps, and added them to the cluster

Setup VSAN

-

Logon to the Webclient

-

Ensure on all the hosts

- Networking is setup and all functions are working

- NTP is working

- All expected HDD’s for VSAN are reporting in to ESXi

-

Create a vSwitch for VSAN and attach networking to it

- I attached 2 x 1Gbs NICs for my load that should be enough

-

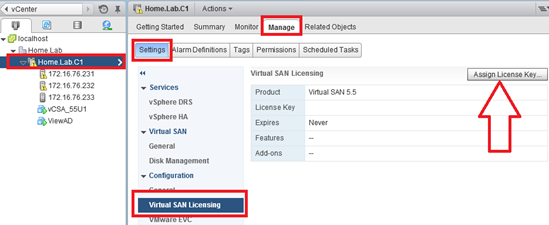

Assign the VSAN License Key

- Click on the Cluster > Manage > Settings > Virtual SAN Licensing > Assign License Key

-

-

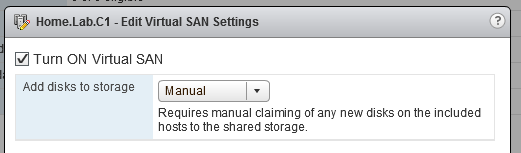

Enable VSAN

- Under Virtual SAN click on General then Edit

- Choose ‘Turn on Virtual SAN’

- Set ‘Add disks to storage’ to Manual

-

Note – for a system on the HCL, chances are the Automatic setting will work without issue. However my system is not on the any VMware HCL and I want to control the drives to add to my Disk Group.

-

Add Disks to VSAN

- Under Virtual SAN click on ‘Disk Management’

- Choose the ICON with the Check boxes on it

- Finally add the disks you want in your disk group

- Allow VSAN to complete its tasks, you can check on its progress by going to ‘Tasks’

- Once complete ensure all disks report in as healthy.

-

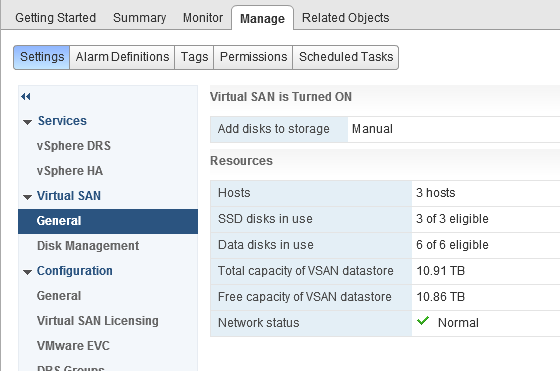

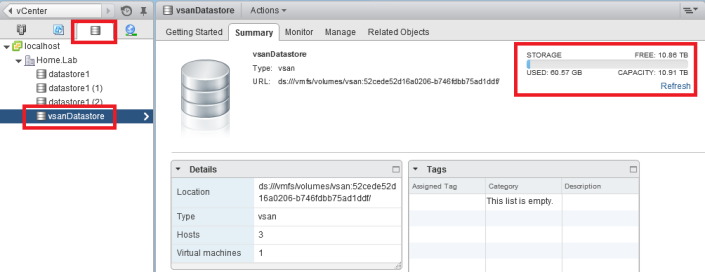

Ensure VSAN General tab is coming up correct

- 3 Hosts

- 3 of 3 SSD’s

- 6 of 6 Data disks

- Check to see if the data store is online

Summary –

Migrating from FreeNAS to VSAN was relatively a simple process. I simply moved, prepared, and installed and the product came right up. My only issue was working with a faulty Gigabyte Mobo which I resolved by replacing it. I’ll post up more as I continue to work with VSAN. If you are interested in more detail around VSAN I would recommend the following book.

September 24, 2014 at 5:56 pm

[…] Crookston) VMware VSAN meets EZLAB (Virtual JAD) My VSAN home lab configuration (Virtualize Tips) VSAN – The Migration from FreeNAS (VMexplorer) Home Lab – Part 4.1: VSAN Home Build (vWilmo) Home Lab – Part 4.2: VSAN […]

LikeLike

June 18, 2016 at 1:29 pm

How do you find the performance of VSAN since you are using consumner disks? How does it compare to FreeNAS?

I’m about to purchase myself some kit for a home lab and still can’t decide between:

1) Two ESXi servers and an iSCSI FreeNAS server

OR:

2) 3 ESXi servers using VSAN only

I did a bit of testing with VSAN on my single server at home and was “only” getting about 150-200MB/s write speeds with Samsung SM863 Enterprise SSDs drives in the cache and capacity tier. I don’t think that was great? Turning of the checksum feature in the VSAN policy helped but I still only saw about 300MB/s tops.

Interested to hear your thoughts!

LikeLike

July 7, 2016 at 3:25 pm

In my home lab experience VSAN out performed FreeNAS dramatically. If you are running VSAN 6.2 look in the vCenter Server Webclient under Monitoring and/or Preformance you should see many ways to monitor and test its performance. Based on your post its hard for me to tell what your issue could be. However I would recommend if you haven’t yet to follow the VSAN Design Guide. Its a no-nonsense approach to VSAN design and I’d highly recommend it. http://www.vmware.com/files/pdf/products/vsan/virtual-san-6.2-design-and-sizing-guide.pdf

LikeLike

July 7, 2016 at 11:29 pm

Thanks for the reply! I am leaning towards VSAN 6.2 rather than FreeNAS (read too many bad things about iSCSI/ZFS and defragmentation) but I want to go the All Flash route with VSAN and I think its recommended (or required?) to have 10Gb. The servers I want to buy have 10Gb NICs in them but I have yet to find a practical (ie: quiet!) 10Gb switch. How do you find the performance using 1Gb NICs with your setup?

If I remember correctly you can’t setup Virtual SAN traffic to go over multiple 1Gb NICs like you can with (say) iSCSI (MPIO).

LikeLike

August 6, 2016 at 7:25 am

I’m running lower load levels currently. However I’m planning to install an infiniband switch which will give me 20gbs bandwidth per hba. Blog post coming soon on this…

LikeLike

August 6, 2016 at 1:14 pm

I eagerly await your post on this as I am currently looking into using Infiniband for my backend storage network and vSphere 6.

LikeLike

July 1, 2017 at 10:47 am

Hey Matt,

Thank you for sharing this article on how to build a 3-Node vSAN home lab solution.

I had tried to build a single node vSAN EXi5.5 solution William Lam (http://www.virtuallyghetto.com) put out a blog on how to bootstrap a vCSA. But it proved unreliable so I ditched it.

I have 3 identical low power supermicro Atom 2750 systems and was thinking of setting it up similar to the way you have done. But I am curious to find out in your experience how you handling the reliable power down/up of the cluster nodes because of vSAN’s dependency of vCSA to be always available.

Reading the VMware docs I found in order to reliably shutdown a vSAN cluster it is a requirement to have a 4-node cluster but I am still somewhat confused as to how vSAN handles the powering down of vCSA.

Cheers,

MP

LikeLike

July 6, 2017 at 11:48 am

I’m not quite sure I fully understand your asks, but I think you want to know how to fully shut down the environment. With a 3 Node cluster and a policy of FTT1 you can shut down a vSAN cluster without issue. Here is how I do it. Validate not components are resyncing, then shut down all vm’s in an order that works for your environment. For me, I shut down my vm’s, AD/DNS VM’s, and then vCenter Server. From there I simply use the thick client to put the hosts in mmode with no evac and shut them down. To power on, I simply power on my servers, use the thick client remove the hosts from mmode, I then power on my AD/DNs VM’s, I power on vCenter Server next, I go into vCenter server, validate the clusters health, and then power on the rest of the VM’s. You could also follow this procedure too — https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2142676

Now, why would you need 4 Nodes for an FTT1 when 3 nodes are enough? You “might” want 4 nodes if you want to take down 1 node and still support FTT1. 4 Nodes enables you to support the FTT1 Policy and have enough capacity to reboot one node at a time.

Is VSAN dependent on vCenter Server? Can I configure VSAN if vCenter is down?

VSAN is not dependent on vCenter. It can be configured from the console using “esxcli” and can even be configured and used before vCenter is up and running. If its down you only lose your GUI interface until it returns. In newer versions of ESXi 6.5, the Host Client even has options to monitor vSAN.

I Hope this helps…

LikeLike

July 11, 2017 at 8:15 pm

Matt, thank you so much for clearing the confusion that I had in my mind from my bad experience when I tried to setup an experimental vSAN using a single node and it failed and I lost all my VMs luckily I had OVF backups of my DC, NAS and vCenter.

I now get it and the trick is to store the core management VMs on a separate disk and use the vSAN exposed storage purely for storing rip and replace lab VMs (in my case) so should there be a problem with the vSAN datastore I will only loose the lab VMs.

I will give it a try and report back.

LikeLike