Home Lab – VMware ESXi 5.1 with iSCSI and freeNAS

Recently I updated my home lab with a freeNAS server (post here). In this post, I will cover my iSCSI setup with freeNAS and ESXi 5.1.

Keep this in mind when reading – This Post is about my home lab. My Home Lab is not a high-performance production environment, its intent is to allow me to test and validate virtualization software. Some of the choices I have made here you might question, but keep in mind I’ve made these choices because they fit my environment and its intent.

Overall Hardware…

Click on these links for more information on my lab setup…

- ESXi Hosts – 2 x ESXi 5.1, iCore 7, USB Boot, 32GB RAM, 5 x NICS

- freeNAS SAN – freeNAS 8.3.0, 5 x 2TB SATA III, 8GB RAM, Zotac M880G-ITX Mobo

- Networking – Netgear GSM7324 with several VLAN and Routing setup

Here are the overall goals…

- Setup iSCSI connection from my ESXi Hosts to my freeNAS server

- Use the SYBS Dual NIC to make balanced connections to my freeNAS server

- Enable Balancing or teaming where I can

- Support a CIFS Connection

Here is basic setup…

freeNAS Settings

Create 3 networks on separate VLANs – 1 for CIFS, 2 x for iSCSI < No need for freeNAS teaming

CIFS

The CIFS settings are simple. I followed the freeNAS guide and set up a CIFS share.

iSCSI

Create 2 x iSCSI LUNS 500GB each

Setup the basic iSCSI Settings under “Servers > iSCSI”

- I used this doc to help with the iSCSI setup

- The only exception is – Enable both of the iSCSI network adapters in the “Portals” area

ESXi Settings

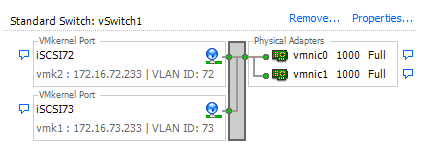

Setup your iSCSI vSwitch and attach two dedicated NICS

Setup two VMKernel Ports for iSCSI connections

Ensure that the First VMKernel Port group (iSCSI72) goes to ONLY vmnic0 and vice versa for iSCSI73

Enable the iSCSI LUNs by following the standard VMware instructions

Note – Ensure you bind BOTH iSCSI VMKernel Ports

Once you have your connectivity working, it’s time to setup round robin for path management.

Right click on one of the LUNS, choose ‘Manage Paths…’

Change the path selection on both the LUNS to ‘Round Robin’

Tip – After the fact if you make changes to your iSCSI settings, then ensure you check your path selection as it may go back to default

Notes and other Thoughts…

Browser Cache Issues — I had issues with freeNAS updating information on their web interface, even after reboots of the NAS and my PC. I moved to Firefox and all issues went away. I then cleared my cache in IE and these issues were gone.

Jumbo Frames — Can I use Jumbo Frames with the SYBA Dual NICs SY-PEX24028? – Short Answer is NO I was unable to get them to work in ESXi 5.1. SYBA Tech support stated the MAX Jumbo frames for this card is 7168 and it supports Windows OS’s only. I could get ESXi to accept a 4096 frame size but nothing larger. However, when enabled none of the LUNS would connect, once I moved the frame size back to 1500 everything worked perfectly. I beat this up pretty hard, adjusting all types of ESXi, networking, and freeNAS settings but in the end, I decided the 7% boost that Jumbo frames offer wasn’t worth the time or effort.

Summary…

These settings will enable my 2 ESXi Hosts to balance their connections to my iSCSI LUNS hosted by freeNAS server without the use of freeNAS Networking Teaming or aggregation. By far it is the simplest way to setup and the out of the box performance works well.

My advice is — go simple with these settings for your home lab and save your time to beat up more important issues like “how do I shutdown windows 8” J

I hope you found this post useful and if you have further questions or comments feel free to post up or reach out to me.

February 12, 2013 at 1:04 pm

All work and no play – Thanks for the lab!

LikeLike

March 19, 2013 at 2:50 pm

Was there any special settings you needed to do set on the vSphere (v5.1) side to get the iSCSI devices to appear? Im having an issue with FreeNAS 8.3.0 where the iSCSI target is found, but no devices appear. Id appreciate any input you can provide. Thanks.

LikeLike

April 8, 2013 at 3:41 pm

Rob – Sorry about the delay, I just saw your post. The only thing I did was follow the steps listed in my post, and this guide – http://www.vmware.com/files/pdf/techpaper/vmware-multipathing-configuration-software-iSCSI-port-binding.pdf

LikeLike

April 8, 2013 at 3:45 pm

Ay. It was a noob mistake. Initiator and target weren’t on the same subnet.

LikeLike

April 11, 2013 at 2:49 am

No worries, glad to hear you got it solved!

LikeLike

June 30, 2013 at 3:25 pm

Excellent post however I was wanting to know if

you could write a litte more on this topic?

I’d be very thankful if you could elaborate a little bit more. Kudos!

LikeLike

July 22, 2013 at 4:11 pm

Thanks for your comments… I’m happy to write more, what topics did you have in mind?

LikeLike

July 22, 2013 at 2:25 pm

Thank you for your excellent job. I’ll try to did the same in the next days.

Can you confirm simultaneous access by both ESXi ? Because I’m wondering if it was possible without special configuration or I don’t know what.

Thanks again. Pierre

LikeLike

July 22, 2013 at 4:15 pm

Hey Pierre – I’m not sure what you mean by “special configuration” however the config is pretty simple. Setup basic iSCSI on FreeNAS with multi (NOT Link-Agg) NICs. Setup ESXi native Round Robin iSCSI setup and point to those NICs. So yes it will balance the load if you set it up correctly. You can see this in FreeNAS or vCenter Server monitoring. Thanks!

LikeLike

July 23, 2013 at 4:14 am

Thanks mattman5, I was’nt clear : “normally” (Microsoft saying) one iscsi initiator point one target and only one initiator because FAT can be corrupted if more than one computer access and modofy it’s content during concurrent operations. So my question was : can I have multiple iscsi initiators targetting one LUN whithout any data (FAT) corruption problem ?

LikeLike

September 6, 2013 at 11:36 pm

I’m not using my using my free nas SAN with FAT style Luns.. my iSCSI LUNS are formatted with VMFS which allows for multi-initiators to communicate with the LUNs

LikeLike

September 3, 2013 at 5:10 am

Did you made any perfomance test on your environment? I’m missing something on the configuration, I’m almost sure is a config issue ’cause it works fine on other hosts. Let me explain what my lab has:

Windows 7 Client: Core i7 3820, 16GB RAM, 128GB SSD, 2TB+64GB SSD cache, 1xIntel NIC 1Gbps

ESXi Server 1: Core i5 650, 8GB RAM, 160GB local HDD, 3x1Gbps Intel NICs

ESXi Server 2: Athlon II X3 455, 8GB RAM, 160GB local HDD, 3x1Gbps Intel NICs

FreeNAS Server: Pentium G2030, 8GB RAM, 4x1TB 7200RPM RAIDZ1, 2x1Gbps Realtek NICs

The RAIDZ1 has a 2TB CIFS share, and a 600GB zvol for iSCSI (2 uplinks, 2x1gpbs).

CIFS Performance with Windows: 92MB/s Read average

iSCSI Performance with Windows (1 uplink, 1gpbs): 102MB/s average

iSCSI Performance with ESXi 5.1, 1 path (1gbps): 35MB/s

iSCSI Performance with ESXi 5.1, 2 path Round Robin (2x1gbps): 50MB/s

dd on local: 260MB/s

There is something wrong between ESXi and the FreeNAS server, the performance is awful.

NIC model is discarded (Intel vs Realtek, because the Windows client is working near the gigabit maximum).

Any ideas??

LikeLike

September 6, 2013 at 11:36 pm

Thanks for the great question, lets take this offline as I will send you an email…

LikeLike

May 10, 2014 at 10:53 pm

Hi,

I followed your setup: two different vlan per iscsi path. From vSphere, by looking at iSCSI initiator i see that under iSCSI Server Location there are two ips addresses (whichs great), but Target name are the same. (iqn.2011-03.com.lv-ny-san-03:tgt01) would that cause a problem?

Thank you

LikeLike

March 8, 2019 at 3:28 pm

Thank you for sharing. I have a GigaByte C1037 motherboard lying around and wanted to use it for a FreeNAS storage to use with my 3 host ESX lab to expose iSCSI and NFS. Maybe even CIFS.

I would like to use the 2 onboard Realtec NICs. BTW – this motherboard only has 2 nics. But if I use both the NICs for storage traffic,how do I configure the management network. I know FreeNas does support vlans and I also have a managed switch with trunked ports carrying my ESX VM Traffic on different VLAns.

Could do with your input on the above.

Thank you!

LikeLike

May 1, 2019 at 2:08 pm

I haven’t played with iSCSI in years been all vSAN, but I would assume you’d just need to enable “management’ on one of the pNICs. Another option could be, since ESXi Management traffic if pretty low, just use a 1gbe USB pNICs. Maybe use one or management one for vMotion. Of course, these are not supported by VMware, but I know lots of Home labs that do this.

LikeLike